Unless you have been living under a rock for the last six months, you probably know that the new installment in the Star Wars film series is coming up. And it’s going to be huge! “The Force Awakens” in the movie and so did it in our geeky minds. We at ELEKS spent a lot of time meditating with Yoda and practicing with Obi-Wan to find the Force. But what we really had to do was just use Kinect for Windows 2.0 and Sphero 2.0. Ladies and gentlemen, we give you the power to move objects (almost) telepathically.

Why Kinect?

You should know Kinect is very cool – I mean come on, it can make us a little Darth Vader-ish! But it was quite demanding to work with. Kinect uses only USB 3.0 because it sends the amount of data USB 2.0 can`t handle. It also requires DirectX 11 and drivers supporting Windows 8 and higher. But when all the requirements are satisfied, you get 120 Mb/sec Color data, 13 Mb/sec Depth data, 13 Mb/sec Infrared data, 32 Kb/sec audio and other cool features. Cool, huh? Plus, Kinect SDK allows skeletal tracking for up to 6 people at the same time. All this makes Kinect the right sensor for creating human-technology interaction.

Why Sphero?

Certainly, BB-8 was an inspiration for our team. And since BB-8 is based on Sphero, a device we already had, we chose it for our experiment. We used the unofficial .NET SDK, which allowed us to make a custom control system for Sphero using Kinect and Kinect SDK for Windows.

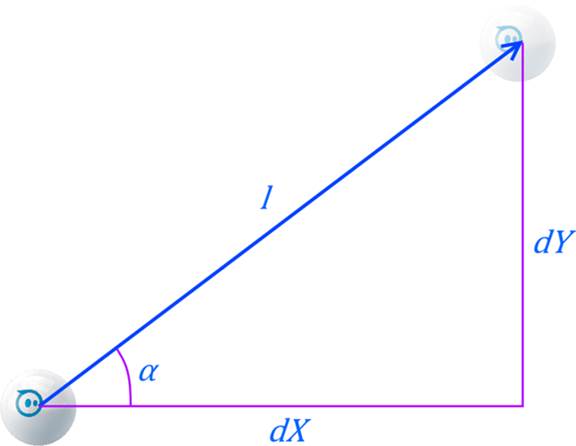

.NET SDK has actually limited functionality. If you want to move it, you must set angle and velocity. But we already had the necessary coordinates thanks to JediGeustureRecognizer, the class we created to detect Sphero’s points of movement. So, how can we move Sphero from its current point to the next one? Let's see:

Configuring Kinect

According to Microsoft’s guidelines on working with Kinect, the first thing you have to do is determine the PHIZ - physical human interaction zone. Usually, Kinect for Windows is used to interact with the screen, so the PHIZ is in front of the person’s body.

First the idea was to use the square space in front the person’s body and project this space on the ground. So, when a person moved their hand in the air, Sphero would move on the ground. But this concept didn’t feel natural, because when you want to move something (even telepathically), you usually stretch your hand out and point your palm at the thing you want to move.

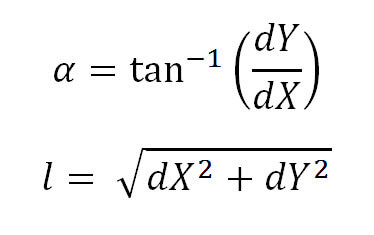

This is when we decided to move our PHIZ to the ground, which turned out to be easier than we thought. We used the person’s head and the active hand as pointers and calculated the point projected on the ground by the line which starts at the head point and goes through the active hand point.

Now it’s all about the math.

Point B (the head) has coordinates (0.26, 1.90, 3.20), line M (the active hand) has coordinates (0.30, 1.40, 2.80). Kinect sends coordinates in meters; the Y axis aims up, the Z axis aims away from Kinect, showing distance to the objects in front of it. We have to find the projected point C. C has Z=0.

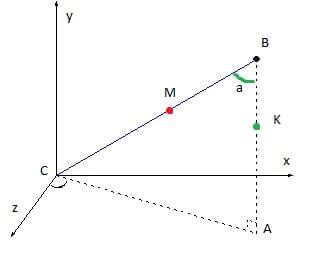

To solve this problem, we will use simple trigonometry:

We used

So, the algorithm is the following:

1. Find the projection point for the ZY axis following the procedure below.

2. Find the projection point for the XZ axis following the procedure below.

Finding projection point from the triangle:

With these results you can easily find the projected point on the floor.

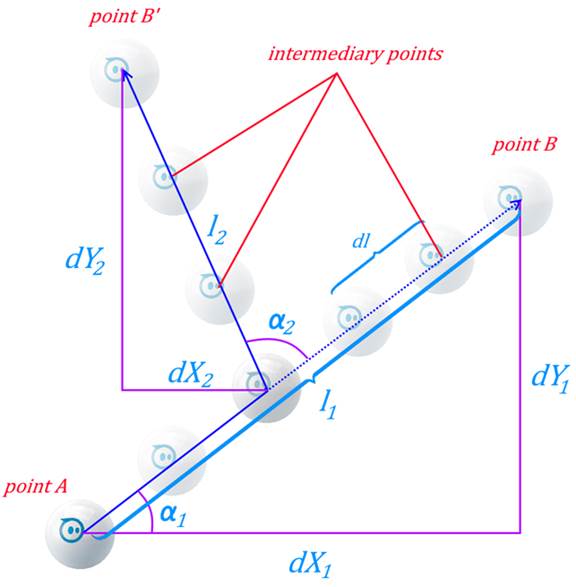

Next, we need to send this position to Sphero. We have angle and distance and Sphero’s speed value - 2 m/s. We could change the speed scale, so if you set the scale to 0.5, the real Sphero speed will be 1 m/s.

Sphero .NET SDK has a feature of sending the command “Roll(angle, velocity)” that makes Sphero roll until you send the command “Roll(angle, 0)”. To manage the events of Sphero stopping, we created a timer that allows sending commands to the gadget with calculated frequency.

To control Sphero better, we allowed changing the destination point on the move. We did it by dividing the distance (l) from point A to point B by the small iteration distances (dl) - the distances Sphero covers from one timer triggering to another, without changing direction. On each iteration we recalculated our next intermediary point. So, if the destination point (B) changes during Sphero’s movement, the gadget changes its direction to the new intermediary point related to the new destination point (B’).

This is how it looks in code:

//Sphero is in next point

Point currentPosition = _nextIntermediaryPoint;

SetCurrentPosition(currentPosition);

Point destinationPosition = GetDestinationPosition();

//recalculate the Sphero angle (0.0 <= a <= 359.0):

double spheroAngle = GetSpheroAngle(currentPosition, destinationPosition);

//recalculate distance

double realDistance = GetDistance(currentPosition, destinationPosition);

if (realDistance >= _iterationDistance)

{

//Save recalculated sphero angle (for correct stop)

_lastAngle = (int)spheroAngle;

//Move with recalculated values

Roll(_lastAngle, (int)_spheroSpeed);

//Recalculate real angle (-180.0 < a <= 180.0)

double realAngle = GetAngle(currentPosition, destinationPosition);

//Recalculate next intermediary point

Point nextPoint = CalculateNextIterationPosition(currentPosition, realAngle,

_iterationDistance);

SetNextIterationPosition(nextPoint);

}

else

{

//Sphero near or on destination point

Roll(_lastAngle, 0);

}

How do we Track the Force?

To recognize hand gestures, we used Body data. Since the algorithms that determine the human skeleton are far from perfect, we had to filter out accurate data from the noise according to the formula FrameRecived - HandTrackedFrames <= Epsilon (where FrameRecived - the number of frames we covered, HandTrackedFrames - the number of frames in which the fist was open, Epsilon - a constant that determines noise sensibility). This formula helps to determine whether a person holds an open hand in front of oneself. For the research we set Epsilon to 3 and verification every 8 frames. This means that if we tracked an open hand at least 5 times out of 8, the Force would be applied.

The tracking logic can be found here.

Behold the Force!

First, set up the scene in the application:

1. Put Sphero on the floor.

2. Set the correct angle (the backlight has to be opposite the Kinect).

3. Set up the speed.

Take your place on the scene like a young padawan. Feel the Force in you. Stretch your hand out and focus your palm (and mind) on Sphero. Now telekinetically move Sphero with your hand. To stop Sphero just clench your fist. When you feel the force again, change your position and continue moving Sphero.

Cool! Where can I get the Source Code?

The concept find you can on GitHub.

Any questions or thoughts on the topic? Contact us here.

May the Force be with you!

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.