The great thing about Intelligent apps is that they can become integrated with almost every area of a customer’s life. Over the last few years, more and more smart connected devices have been hitting the market, and all these gadgets are usually augmented with digital conversational interfaces. We're living in an age where even fridges can connect to the internet and offer you some interactive digital experience on top of their primary function.

While the first internet-enabled refrigerators were introduced years ago, the concept of a smart fridge received another major upgrade last spring with Samsung launching its Family Hub™. This new refrigerator has a wifi-enabled touchscreen, and aside from various fancy features for communication and entertainment, it lets you manage your groceries and even do your food shopping routine right on the spot. The software behind these features is based on Tizen - an open-source operating system that powers 50 million Samsung gadgets.

Later on, in November 2016, Samsung announced its collaboration with Microsoft to enable .NET support for Tizen. The newly-released Visual Studio Tools preview promised to let .NET developers easily build Tizen applications for Samsung's IoT devices, as well as for smartphones, wearables and Smart TVs.

We at ELEKS were among those anticipating for the toolkit to be released. Inspired by our recent successful experiment with a voice-controlled bot for website search, we decided to сombine our expertise in retail domain with our Xamarin development skills to build a custom Tizen application for Samsung refrigerator - a smart voice assistant for online grocery shopping. Basically, we wanted to let users shop foods right in front of the fridge’s LCD display using voice commands. Interested in how we implemented this functionality and what we got as a result? Read the story below to find out.

Imagine you can literally ask your smart fridge to do grocery shopping for you

What if you could browse an online grocery store, add products to your shopping cart and complete your order right on your smart fridge’s screen using your voice instead of touch interactions? Does speaking to your fridge sound weird? Not at all. At least when you have a friendly, voice-powered shopping assistant integrated with your refrigerator.

From a usability point of view, we wanted our application to support the basic online shopping process. It should retrieve information about the products from an API (for our demo, we used Amazon.com) to display it on the screen, enabling the user to browse the store and search for specific products using voice commands. The app should allow adding products to the cart or removing them when needed, just as it is with the conventional online shop. But, in our case, no touch interactions required. The assistant would recognize human speech and respond back, displaying lists of the requested products, checking on the number of the items needed and adding them to the cart. The user then would be able to make a purchase and complete the checkout right away.

What we’ve learned developing for Tizen and where are the pitfalls for you to watch

This is the list of the tools that we chose as a technology stack:

- Tizen platform-specific .NET API, exposing Tizen features

- Xamarin.Forms as a UI framework

- Microsoft.Bot framework that powers our bot for recognizing text with the help LUIS model and returning product related data, such as category, description, price, quantity etc.

- Microsoft LUIS (Language Understanding Intelligent Service) to power language recognition and allow our bot to respond with a relevant action.

As a first step, we needed to set up an installation environment, which included Xamarin, Visual Studio Tools for Tizen preview, Tizen emulator and Tizen MI-Based Debugger. Concerning the emulator, it has a specific hardware requirements that you will have to meet. Make sure you have at least a 2nd generation Intel HD Graphics processor or a discrete video card. In our case, we used GeForce 8400GS.

Another pitfall happened when we tried to run our app in the debug mode and failed to do this. It turns out that this mode will be fully implemented only with Tizen 4.0 beta. So, we had to create a custom logger to trace any code bugs.

And one more quite important issue we faced with Tizen is that the project couldn’t detect NuGet packages in the runtime mode. So, we had to add them to the Tizen lib folder manually. Only after all these manipulations were completed could we start developing our app.

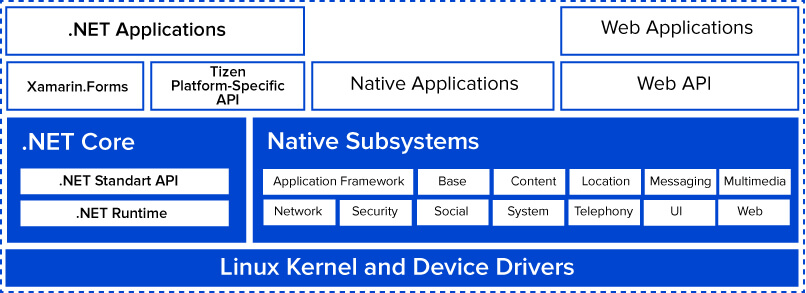

Below you can see how the basic Tizen . Net architecture looks like in detail.

Tizen .Net architecture

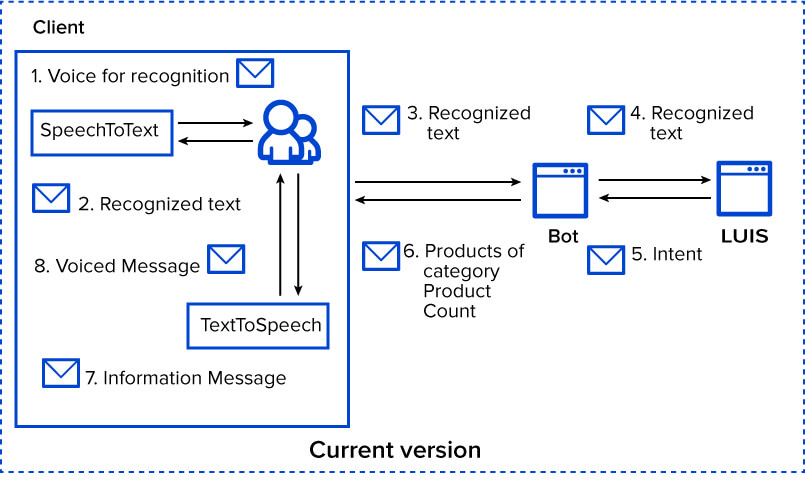

By default, Tizen uses native SpeechToText library to transform speech into text (for mobile apps). In our case, to provide language recognition, we used a custom LUIS model. Generally, the process looks this way: the assistant interprets the user's text and transfers it to LUIS, so the model could analyze it and trigger the bot to perform a certain action. Using the Microsoft.Bot framework, we enabled our bot to interact with LUIS, recognizing texts and returning various sets of data, such as the list of the products in a specific category, a certain product from this list or a quantity of the products that were requested by the user.

Basically, for language recognition we followed the same pattern as we did for our website search assistant. You can find all the information in the article about our smart assistant for website search under the "Enabling language recognition section". Should you have any questions here, just drop us a line or simply leave a comment right below this post.

To be able to emulate an online shopping experience, we needed to create a base of goods and build a user interface replicating the functionality of an online shop. First of all, we made up some fake data for the products that we wanted to have in-stock and developed a simple store UI.

As our next step, we needed to implement the services for transferring speech into text and vice versa. With our first demo, we used such online speech services as Google Speech API and Microsoft Cognitive Services. But later on, in January 2017, the second Tizen preview was released providing additional API packages. So, we could use the native Tizen SpeechToText and TextToSpeech libraries instead of online the services mentioned above, which improved the performance of our application drastically.

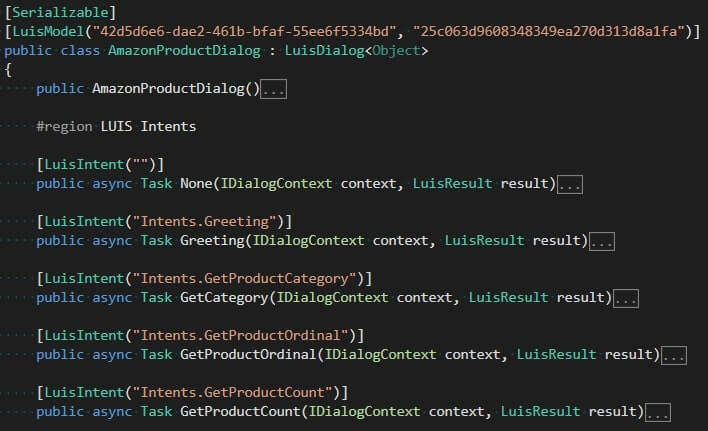

Then we needed to enable our bot to parse request messages and return products listed by a certain category, a count of products or any specific item selected by the user. The below image shows you the code. And again, all questions are welcome.

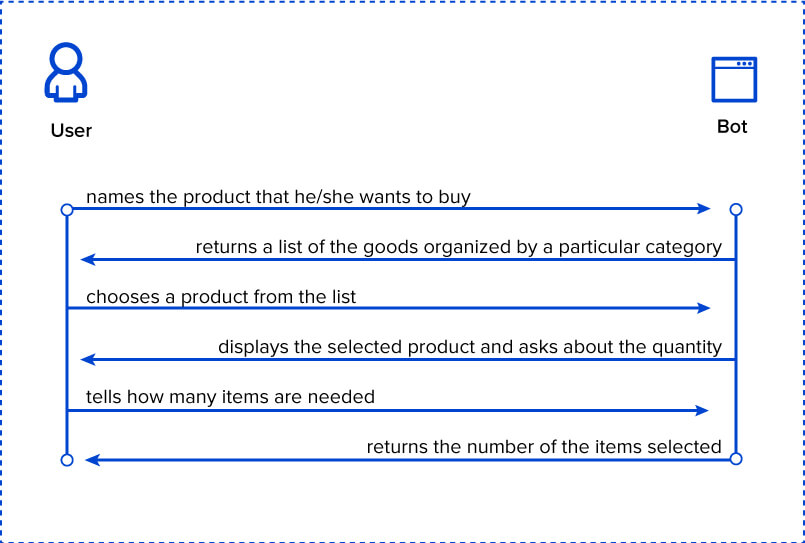

And this scheme shows what the interaction between the user and the bot looks like. We tried to follow a simple, straightforward pattern.

It's worth mentioning the Prism Framework that we also used while building our app. We opted for Prism since it allows building maintainable and testable XAML applications for Xamarin Forms. The framework provides an implementation for a set of design patterns that help creating well-structured XAML applications, including MVVM, dependency injection, commands, EventAggregator and others. We also used the Unity container to support the dependency injection.

On the scheme below you can find more details about the interaction that our application provides.

Voice-controlled shopping assistant application interaction

Wrapping up the experiment - voice-controlled shopping assistant

Interested in how the things looked by the end of our experiment? Here is a video to show you how our bot actually works.

As you can see, by using Tizen and the other tools mentioned above, we managed to provide the interaction we aimed for. For now, our assistant is just a raw demo, but we are looking forward to improving and augmenting it further. It would be great to enable the assistant to confirm online purchase orders, so no additional manual interactions from the user’s side are required. We also wanted to provide integration with instant messengers. Plenty of cool stuff ahead! Especially taking into account that the first official version of Tizen .NET is going to be released in September 2017 as a part of Tizen 4.0. The 4.0 version is intended to provide support for a variety of IoT devices (the full list is here, in case you are interested).

Speaking of our impressions after developing on Tizen, the .NET Tools preview is pretty good in terms of usability. Sure, there were some pitfalls; we have touched upon these above, but in general for us the experience was useful and definitely worth the time and effort we invested. What we like about Tizen, is that it allows one to work with multiple devices - wearables, TVs, smart refrigerators and many others. So, it looks like more interactive use cases featuring smart gadgets are yet to come.

Personally, we believe that smart assistants have a fair chance to become a part of our daily routines in multiple areas. Why not, since they can help us perform time-consuming tasks faster and easier —of course, presuming that they really work the way we need them to. But this is just another challenge for us as developers. And we love challenges. And you, what do you think?

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.