Common media such as pictures, audio and video are now often not enough to instantly engage and thrill ever-demanding audiences. In search of ways of delivering an exceptional digital user experience, businesses have to resort to technically advanced methods such as 3D, virtual reality and augmented reality (AR). AR is a relatively versatile technology that combines real world and digital data, allowing to display various types of content – images, 3D models or video – on top of different markers placed in our physical environment.

Basically, there are two types of commercial AR. The first one requires bulky custom equipment: for example, check out this Pepsi Max campaign. The second type requires the user to install a native app before plunging into an AR experience (here is how Vespa and Lexus did it).

Both types are fine if you are a famous brand with millions of fans all over the world. But running into a new company or technology, the average user is hardly prone to install native apps while a business might not be ready to spend millions on custom hardware.

Meanwhile, more and more services migrate to the web to grant quick access from versatile devices, avoiding any installation processes. Having found no examples of commercial projects with web-based augmented reality, we decided to experiment with this technology and check if it is ready to become adopted extensively.

The Concept

We challenged ourselves with the following questions:

- What are the capabilities and limitations of Augmented Reality with regard to the web?

- What can be produced out of the available instruments?

- Can a web app built with ready-made web libraries compete with a native application in terms of productivity?

- Is the technology ready for commercial use on mobile devices?

Over the course of the project, we created a demo web application with augmented reality and tested its performance on several devices:

| PC: | Asus Transformer Pad TF103C (Tablet): | LG Optimus L5 II E450 (Smartphone): |

|---|---|---|

| Windows 8 | Android 5.0.1 | Android 4.1 |

| Browser: Opera | Browser: Firefox | Browser: Firefox |

| CPU: Intel i5-3570 4x 3.4 GHz | CPU: 4x 1.33 GHz | CPU: 1* 1 GHz |

The AR marker types we experimented with included:

- QR code, inverted QR code;

- Three-color sequences;

- Images;

- Hybrid marker – an image in the black rectangular frame.

Let’s now have a closer look at how we actually did our experiment and the results we obtained.

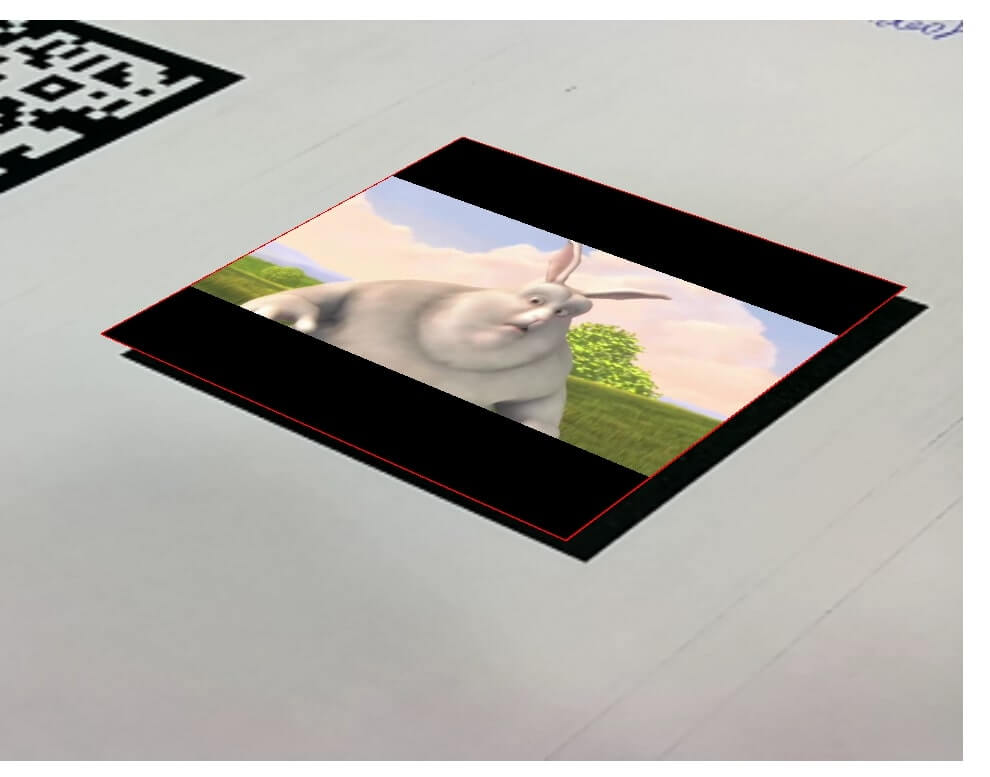

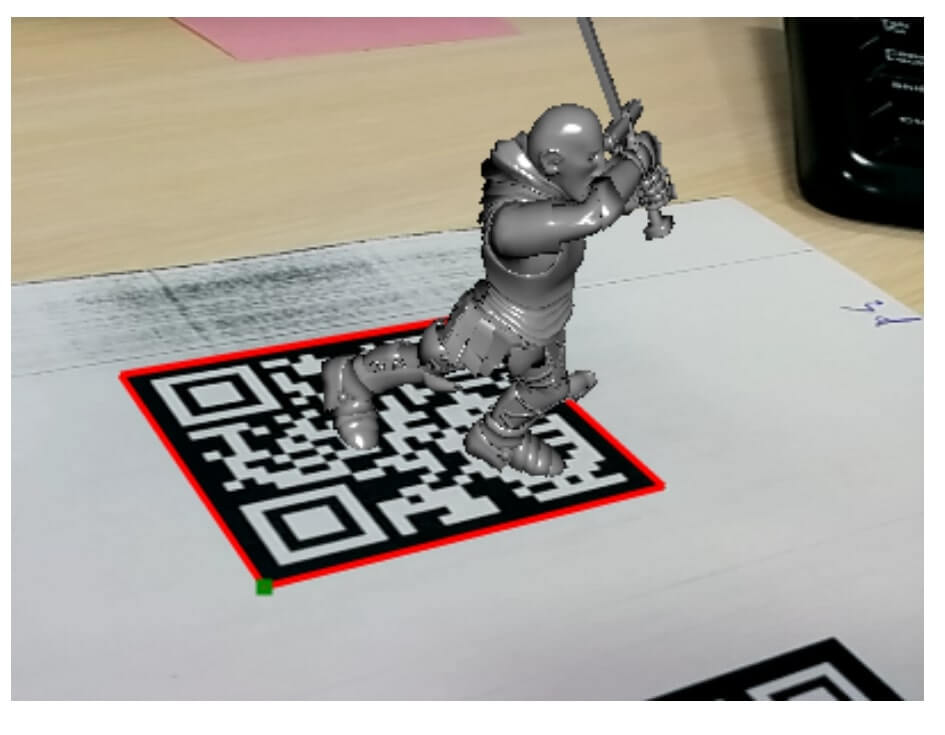

QR Tracking

To create an AR experience with a QR code as a marker, we used js-aruco, a JavaScript port of the OpenCV-based ArUco library. At first, we developed simple demos to check the performance and then used the library to create augmented reality with QR codes as markers.

The demos showed quite good results – an average of 40 FPS (Frames Per Second) on the PC, 8 FPS on the tablet and 6 FPS on the phone. js-aruco allowed easy detection of the marker’s orientation in space, so we used static and animated 3D models rendered with three.js as our displayable content.

Since js-aruco is quick and precise with finding rectangles, we decided to invert the QR code so that it had an easily detectable rectangular shape.

Our recognition algorithm was the following:

- We sorted all the rectangles from the biggest to the smallest. First, the app detected the biggest rectangle - the frame around the marker. Then, inside this rectangle it located three smaller rectangles relatively equal in area (within the empirically selected permissible error). By doing this, we tried to locate the mandatory rectangular elements in the corners of the QR code.

- Once the corner rectangles were located, the app went on with the recognition by checking if all three of these rectangles were inside the frame.

- Next, the app had to determine the markers’ orientation within the successfully recognized frame. To do this, we calculated the angles of the triangle, formed by three rectangles of the marker. The vertex with the biggest angle was considered the top left corner of the marker.

- With the top left corner of the marker detected, we searched for the vertex of the frame that would be the closest to it. As js-aruco rotates the rectangles with the frame vertices clockwise, and even if a single vertex is found, the app gathers enough information to detect the orientation of the marker.

Below are the results we obtained in the QR marker tests and through the tests with different AR content.

| PC | Asus Transformer | LG Optimus L5 | |

|---|---|---|---|

| QR | 35-60 FPS | 7-15 FPS | 1-4 FPS |

| QR + image | 30-60 FPS | 7-15 FPS | 1-3 FPS |

| QR + video | 30-60 FPS | 7-15 FPS | 1-3 FPS |

| QR + 3d model | 30-60 FPS | 7-13 FPS | 1-2 FPS |

We also introduced an optimization that allowed for fewer calculations and improved performance: after the marker is found, the application memorizes its position and area. In the next frame, the largest of all detected rectangles is compared to the area and position of the marker. If they coincide (within a given error), the rectangle is considered to be the previously found marker, and its new location and area is again memorized. Otherwise the marker is considered lost and the application has to search for it again. We also used this approach for hybrid markers.

Find the demo here https://github.com/eleks/rnd-ar-web (run index.html)

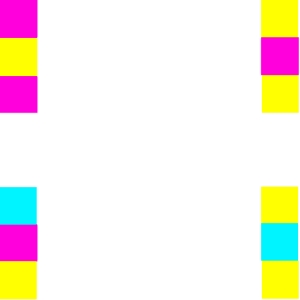

Color Tracking

The tracking.js library allows detecting the elements of previously determined colors in a video sequence. We decided to use this feature to create an AR marker. However, we immediately understood that it is impossible to set an object of a certain color as a marker because there might be other objects of that color around: it is impossible to predict or control that. Our idea was to track thee-color sequences instead of separate colors.

Within tracking.js, the located color spot is enclosed in the black rectangle that is determined by the position of its top left vertex together with its width and height. This rectangle also contains information about the color. In our case, the marker - a big rectangle - was a sequence of three rectangles of predetermined colors meeting the following criteria:

- the distance between the first and second rectangle in the sequence is shorter than the diagonal of the first rectangle;

- the distance between the second and third rectangle is shorter than the diagonal of the second rectangle;

- the distance between the first and third rectangle is longer than the diagonal of the first rectangle.

We tested a sequence of three contrasting colors - cyan, magenta and yellow, but you can choose any three colors. In general, our marker had 4 points (vertices of the large rectangle), each marked by its own sequence of colors. The center of the marker sequence was the center of the second rectangle. Once all 4 points were located, the marker was also considered located and the content was displayed (we rendered video over the marker using the corner pinning technique).

Predictably, the better the camera and lighting you have, the better color recognition results you get. On the desktop the marker was found quite quickly (~24FPS), but on the tablet the performance dropped dramatically (down to 3 FPS). Apparently, color markers are slower than QR markers. Also, they are no less conspicuous and can hardly fit organically in the environment, which totally eliminates all their advantages over QR markers.

The figures we obtained are the following:

| PC | Asus Transformer | LG Optimus L5 | |

|---|---|---|---|

| Tracking, FPS | 15-50 | 3-6 | 1-3 |

Find the demo here https://github.com/eleks/rnd-ar-web (run index.html)

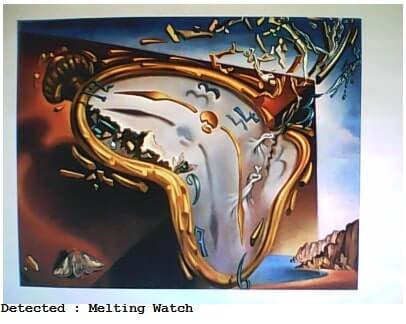

Image Tracking

We used FAST corner detection algorithm and BRIEF feature descriptors of tracking.js for image tracking. These algorithms detect the unique points and descriptors of an image that are then compared to points and descriptors of another image, and if they coincide, the latter is considered a sought-after marker. To eliminate faulty image pairs, we included additional verifications into the algorithm: the app chooses a sequence of points that form a polygon, then, for both images, the sides of that polygon are rotated in one direction. If the rotation angles coincide, the points are assumed verified.

In native libraries, the algorithms similar to SURF or SIFT are used to search for the markers. They perform many more calculations but they are noise-resistant and recognize rotated and resized markers. Such calculations would be up to 13 times slower than FAST and BRIEF, which makes them too slow for real-time processing on JavaScript.

The results shown by different devices with one image marker:

| PC | Asus Transformer | LG Optimus L5 | |

|---|---|---|---|

| Searching, FPS | 50 | 4 | 2 |

| Tracking, FPS | 25-35 | 2-3 | 0-1 |

With two image markers, we observed a minor decrease in performance on a PC, while tablet and smartphone results remained disappointing all the same:

| PC | Asus Transformer | LG Optimus L5 | |

|---|---|---|---|

| Searching, FPS | 45 | 4 | 2 |

| Tracking, FPS | 10-25 | 2-3 | 0-1 |

Find the demos here https://github.com/eleks/rnd-ar-web (run index.html)

Hybrid Tracking

We decided to combine the capabilities of js-aruco and tracking.js for this type of marker. We selected a random image in the thick black rectangular frame as a marker. Then, with tracking.js we calculated the descriptors for the image, and js-aruco was used to detect the rectangle. Calculating descriptors in every frame required a lot of resources and made marker detection unacceptably slow. To avoid this, we searched for the black rectangle first, and then compared the descriptors within the rectangle with the descriptors of the marker. If they coincided, the app continued to track only the rectangle as a marker.

While testing the app, we discovered that the marker had to occupy the entire screen for the descriptor search to be effective; so, there was no difference in searching the descriptors within the rectangle of the marker or within the whole camera frame.

As a result, the demo with a single marker worked relatively fast (30 FPS on a desktop), but the performance dropped dramatically (down to 6 FPS) when the descriptors were calculated. The time spent on the comparison of descriptors depended on the number of compared images. Even with 10 pictures it took too long. The accuracy of the comparison was also a problem. We used pencil sketches, and the algorithm was occasionally mistaking incorrect pictures for markers.

Results shown by different devices with one hybrid marker:

| PC | Asus Transformer | LG Optimus L5 | |

|---|---|---|---|

| Searching rectangle, FPS | 30-60 | 15 | 3 |

| Searching image, FPS | 20 | 2-3 | 0-1 |

| Tracking rectangle, FPS | 45-60 | 15 | 3 |

With two hybrid markers, the results of our test devices were pretty much the same:

| PC | Asus Transformer | LG Optimus L5 | |

|---|---|---|---|

| Searching rectangle, FPS | 30-60 | 15 | 3 |

| Searching image, FPS | 15 | 2-3 | 0-1 |

| Tracking rectangle, FPS | 45-60 | 15 | 3 |

Find the demos here https://github.com/eleks/rnd-ar-web (run index.html)

Short (and somewhat frustrating) Summary

Our first and main impression – the demo is desperately slow. The tested web instruments are not capable of providing the performance necessary for the product to be viable.

Still, we continue to experiment with AR technologies and hope that in the near future we could come up with a web-based augmented reality demo app that would produce decent results.

If would like to discuss your VR product ideas with our team of experts, we will be happy to hear from you. Contact us.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.