A data mesh is a decentralised architecture devised by Zhamak Dehghani, director of Next Tech Incubation, principal consultant at Thoughtworks and a member of its technology Advisory Board.

According to Thoughtworks, a data mesh is intended to; “address[es] the common failure modes of the traditional centralised data lake or data platform architecture”, hinging on modern distributed architecture and “self-serve data infrastructure”.

Key uses for a data mesh

Data mesh's key aim is to enable you to get value from your analytical data and historical facts at scale. You can apply this approach in the case of frequent data landscape change, the proliferation of data sources, and various data transformation and processing cases. It can also be adapted depending on the speed of response to change.

There are a plethora of use cases for it, including:

- Building virtual data catalogues from dispersed sources

- Enabling a straightforward way for developers and DevOps team to run data queries from a wide variety of sources

- Allowing data teams to introduce a universal, domain-agnostic, automated approach to data standardization thanks to data meshes’ self-serve infrastructure-as-a-platform.

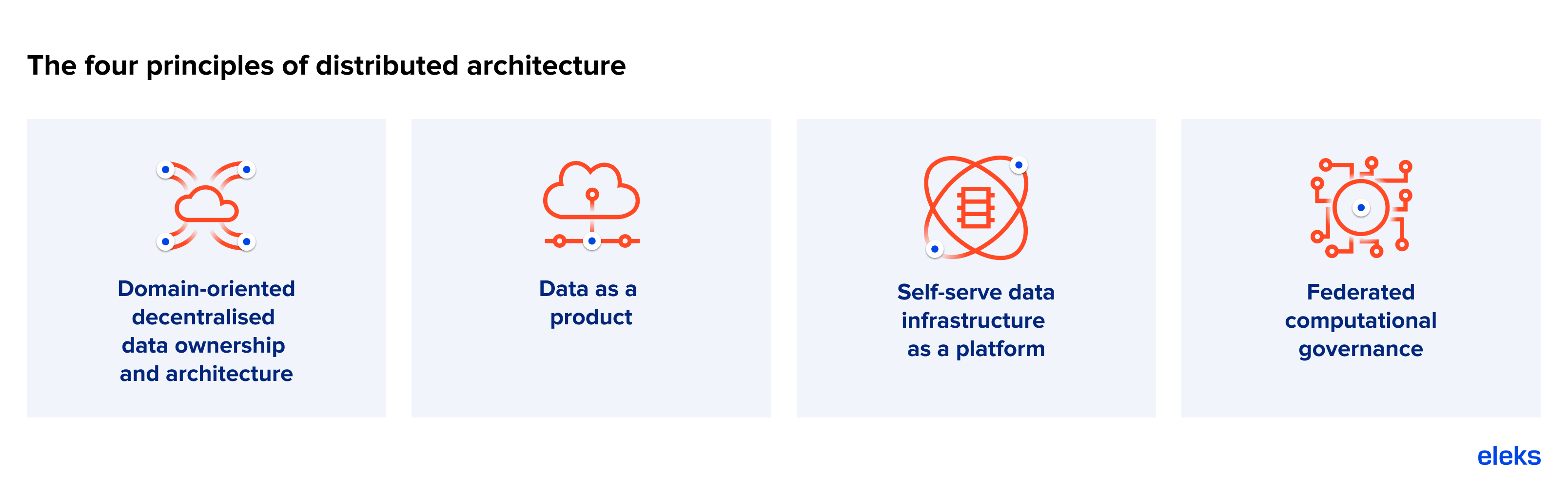

There are four key principles of distributed architecture. Let’s take a look at these in more detail.

Four core principles underpinning distributed architecture

The principles themselves aren’t new. They’ve been used in one form or another for quite some time, and, indeed, ELEKS has used them in various ways for a number of years. However, when applied together, what we get is, as Datameer describes it: “a new architectural paradigm for connecting distributed data sets to enable data analytics at scale”. It allows different business domains to host, share and access datasets in a user-friendly way.

1. Domain-oriented decentralised data ownership and architecture

The trend towards a decentralised architecture started decades ago—driven by the advent of service-oriented architecture and then — by microservices. It provides more flexibility, is easier to scale, easier to work on in parallel and allows for the reuse of functionality. Compared with old-fashioned monolithic data lakes and data warehouses (DWH), data meshes offer a far more limber approach to data management.

Embracing decentralisation of data has its own history. Various approaches have been documented in the past, including decentralised DWH, federated DWHs, and even Kimball's data marts (the heart of his DWH) are domain-oriented, supported and implemented by separate departments. Here at ELEKS, we apply this approach in situations whereby multiple software engineering teams are working collaboratively, and the overall complexity is high.

During one of our financial consulting projects, our client’s analytical department was split into teams based on the finance area they covered. This meant that most of the decision-making and analytical dataset creation could be done within the team, while team members could still read global datasets, use common toolsets and follow the same data quality, presentation and release best practices.

2. Data as a product

This simply means applying widely used product thinking to data and, in doing so, making data a first-class citizen: supporting operations with its owner and development team behind it.

Creating a dataset and guaranteeing its quality isn’t enough to produce a data product. It also needs to be easy for the user to locate, read and understand. It should conform to global rules too, in relation to things like versioning, monitoring and security.

3. Self-serve data infrastructure as a platform

A data platform is really an extension of the platform businesses use to run, maintain and monitor their services, but it uses a vastly different technology stack. The principle of creating a self-serve infrastructure is to provide tools and user-friendly interfaces so that generalist developers can develop analytical data products where, previously, the sheer range of operational platforms made this incredibly difficult.

ELEKS has implemented self-service architecture for both analytical end-users and development teams—self-service BI solutions using Power BI or Tableau—and power users. This has included the self-service creation of different types of cloud resources.

4. Federated computational governance

This is an inevitable consequence of the first principle. Wherever you deploy decentralised services—microservices, for example—it’s essential to introduce overarching rules and regulations to govern their operation. As Dehghani puts it, it’s crucial to "maintain an equilibrium between centralisation and decentralisation".

In essence, this means that there’s a “common ground” for the whole platform where all data products conform to a shared set of rules, where necessary while leaving enough space for autonomous decision-making. It’s this last point which is the key difference between decentralised and centralised approaches.

The challenges of data mesh

While it allows much more room to flex and scale, data mesh, as every other paradigm, shouldn’t be considered as a perfect-fit solution for every single scenario. As with all decentralised data architectures, there are a few common challenges, including:

- Ensuring that toolsets and approaches are unified (where applicable) across teams.

- Minimise the duplication of workload and data between different teams; centralised data management is often incredibly hard to implement company-wide.

- Harmonising data and unifying presentation. A user that reads interconnected data across several data products should be able to map it correctly.

- Making data products easy to find and understand, through a comprehensive documentation process.

- Establishing consistent monitoring, alerting and logging practices.

- Safeguarding data access controls, especially where a many-to-many relationship exists between data products.

Summary

As analytics becomes increasingly instrumental to how society operates day-to-day, organisations must look beyond monolithic data architectures and adopt principles that promote a truly data-driven approach. Data lakes and warehouses are not always flexible enough to meet modern needs.

Data meshes make data more available and discoverable by those that need to work with it, while making sure it remains secure and interoperable.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.