Artificial intelligence (AI) mimics human thinking and has grown rapidly thanks to powerful computers and massive datasets. But this progress comes at a cost: every AI request travels to energy-hungry data centres, generating a significant environmental footprint.

We will explore the impact of artificial intelligence on the environment with Nazar Ivantsiv, the Senior Data Scientist at ELEKS.

Is AI a significant contributor to energy consumption?

By 2030, global data centre electricity use is projected to more than double to ~945 TWh, exceeding Japan's current total electricity usage.

The mathematical foundation of modern AI consists of massive-scale matrix and vector calculations. These operations are highly parallelisable, meaning they can be broken down into millions of independent calculations and executed simultaneously.

This parallel nature makes standard CPUs, which handle tasks sequentially, inefficient for AI workloads. Instead, the industry uses GPUs (Graphics Processing Units) and specialised hardware like TPUs (Tensor Processing Units), which contain thousands of simpler cores designed to execute a massive number of parallel calculations at once.

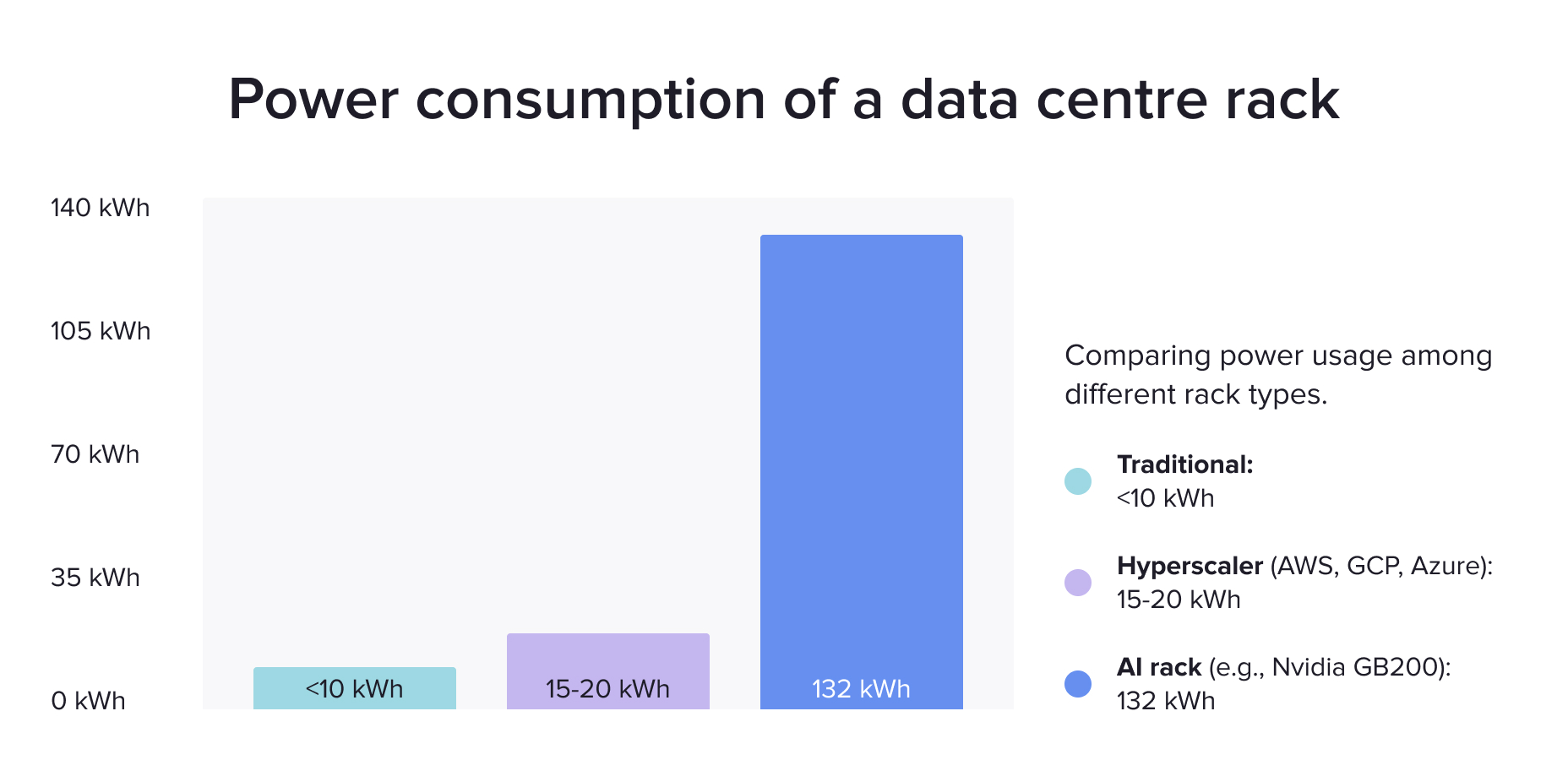

Given this hardware requirement, traditional CPU-based data centres are not suitable for training large-scale foundation models, like LLMs. The task requires specialised AI data centres equipped with high-density computer hardware and advanced cooling to operate efficiently.

While effective, these systems are incredibly power-hungry. Operating thousands of GPUs 24/7 results in a massive energy footprint, with AI data centres estimated to contribute up to 35-40% of global data centre energy consumption by 2030.

Many assume energy usage drops after training is complete. Is this accurate?

No, it is not accurate if we take into account the temporal factor. Historically, the main focus has been on the LLM model training phase. But the cumulative cost of the inference, where the model is processing user prompts and answering questions, is significantly higher.

After the training phase, where electricity consumption is measured in tens of gigawatts, the inference comes into play. In this phase, energy consumption drops to completely different lower levels (hundreds of watts per prompt). But given billions of user requests, this leaves a significant footprint.

Although AI companies don't release official figures, estimates show that the daily electricity needed to run a state-of-the-art model for its users can match the massive energy cost of its entire training in just a few weeks to a couple of months.

Another fact that helps with understanding the impact is that, compared to a simple web search, an LLM request requires x5 to x10 more energy.

Are there other environmental costs aside from energy usage?

For sure. Every unit of energy used for computing requires an additional 9% to 35% of the total electricity usage for cooling, power distribution and other support systems.

In turn, every kilowatt-hour of electricity results in CO2 emissions, the amount of which depends heavily on the local energy grid's mix of fossil fuels and renewables. The global average is around 450 grams of CO2 equivalent per kWh, meaning a data centre consuming thousands of megawatt-hours daily has a substantial carbon footprint from energy alone.

In addition, data centres consume enormous amounts of water for cooling, with a single facility's daily usage equaling a small city's. The constant demand for technological upgrades means servers and other components become hazardous e-waste in just 3-5 years. Their full environmental footprint also accounts for the manufacturing of every component, from concrete to microchips.

Finally, constructing these massive facilities occupies vast areas of land that alter local landscapes and ecosystems.

Can this negative impact be mitigated?

During the “AI gold rush” happening now, the most profitable business is in selling “shovels” - providing enormous amounts of efficient compute.

In the mid-term horizon, this shifts the focus away from sustainable development. Despite major global industry players (Amazon, Alphabet, Microsoft, Meta, Oracle) being significant consumers of green energy, the electricity demand from data centres is growing faster than new green energy supplies are being added, leading to increased carbon emissions.

In the long run, the industry requires proper legal and economic stimulus and regulations, as well as further interest, research and investments from the global players.

For example, Google, to keep to its carbon-free pledge, has been investing heavily in a range of energy sources, including geothermal, both flavours of nuclear power, and renewables. It has contracted with enough renewables to match its total consumption, though those sources don’t always deliver electrons when and where the company needs them. Google is investing in stable, carbon-free sources like nuclear fission and fusion.

Ways to reduce AI's environmental impact

1. Hardware and data centres

- Hardware and data centre improvements (specialised energy-efficient processing units like TPU and FPGA, LLM-optimised hardware (e.g. Rubin CPX GPU for the LLM prefill phase), alternative low-latency high-throughput connectivity (e.g., next-generation hollow-core fibre optic cable).

- Ensuring usage of available low-carbon footprint energy sources (solar, wind, geothermal, nuclear)

- Considering power availability and cost (during the day, geographical distribution)

- Researching efficient alternative sources of low-carbon energy, such as advanced nuclear reactors and nuclear fusion

2. Model and software

- Optimisation of AI model architecture (model pruning, quantisation, knowledge distillation

- Using specialised ML models (aka Narrow AI) instead of general-purpose ones, where applicable

3. Leveraging AI for environmental benefits

- Using AI to optimise resource management

- AI for climate research and environment monitoring

4. General awareness

- Encouraging mindful use of AI tools

- Addressing Jevons Paradox in the long run: acknowledging that the increase in AI energy consumption is expected to grow with technological progress.

On some specific bullet points, I want to emphasise model optimisation, usage of specialised ML models, and mindful use of AI-powered tools and APIs in general. This is especially applicable in scalable business-specific applications.

It is where AI consulting, the involvement of data science specialists, is beneficial in choosing proper tools and optimal solutions, whether it is based on generative AI or on other, so-called Narrow AI/ML, also powerful but order of magnitude energy-efficient tools.

We've talked about AI's environmental challenges. Are there ways AI benefits the environment?

We already have an established framework to evaluate such questions, the UN Sustainable Development Goals (SDG 2030). Let’s look at this question from an SDG perspective. How can AI revolution help us achieve these goals?

- SDG 2: Zero hunger — AI is being instrumental in transforming food systems to be more efficient and sustainable, which is crucial for ending hunger.

- SDG 3: Good health and well-being — The healthcare sector is being revolutionised by AI, leading to more accurate diagnoses, personalised treatments, and accelerated medical research.

- SDG 4: Quality education — AI is helping to create more personalised and accessible learning experiences for students around the globe.

- SDG 7: Affordable and clean energy — AI is playing a vital role in optimising the generation, distribution, and consumption of clean and renewable energy.

- SDG 13: Climate action — Addressing climate change requires a deep understanding of complex environmental systems, and AI is a powerful tool for this purpose.

On the industry side, we can mention a remarkable example of Google AlphaEvolve coding agent, powered by LLM, which improved its own algorithms and infrastructure.

AlphaEvolve enhanced the efficiency of Google's data centres, chip design and AI training processes — including training the large language models underlying AlphaEvolve itself. It has also helped design faster matrix multiplication algorithms and find new solutions to open mathematical problems, showing incredible promise for applications across many areas.

Diagram showing how AlphaEvolve helps Google deliver a more efficient digital ecosystem

- Data centre efficiency: AlphaEvolve developed a more efficient and human-readable heuristic for Borg, Google's data centre orchestrator. This resulted in a continuous 0.7% recovery of global compute resources and provided code that is interpretable, predictable, and easy to deploy.

- TPU hardware design: It proposed a verified Verilog rewrite that optimized a key matrix multiplication circuit in Google's TPUs by removing unnecessary bits. This collaboration with hardware engineers helps accelerate the design of future specialized chips.

- Accelerated model training: It sped up model training by optimizing matrix multiplication, which accelerated a crucial kernel in Gemini's architecture by 23% (reducing overall training time by 1%). It also optimized low-level GPU instructions, achieving up to a 32.5% speedup for the FlashAttention kernel in Transformer models, saving significant time, compute, and energy.

What's your outlook for the future?

I am sure society will benefit in the long run from the wise, efficient, and sustainable adoption of AI tools. It will improve many areas of our lives:

- Automation and productivity

- Robotics and physical systems

- Enhanced decision-making

- Improved safety and risk management

- Accessible healthcare and longevity

- Scientific discoveries

- Personalised education

- An efficient and equitable society

- Climate change and environmental sustainability

The question is, will this progress take into account and minimise potential risks?

This is the government's responsibility, corporations' responsibility and also our responsibility as engineers and everyday users.

AI literacy is a crucial element of our sustainable future. Given the abundance of tools at our fingertips, including AI-powered ones, we must evaluate and choose wisely what to use for each problem we aim to solve.

Next time you will prompt LLM to provide a recipe, or get a definition from Wikipedia, consider using a regular web search instead. Deploying smaller, faster, and energy-efficient models or using so-called Narrow AI/ML instead of complex agentic systems, where applicable, will also make a difference in the long run.

FAQs

Cloud computing can be sustainable for AI when providers use renewable energy sources and efficient infrastructure. While it offers flexible resource allocation, sustainability depends on the provider's energy optimisation capabilities and commitment to environmental responsibility.

Edge computing can be more sustainable than centralised cloud AI because it reduces data transmission and distributes computational performance closer to users. However, sustainability depends on local energy efficiency and the environmental impact of distributed renewable energy sources.

Sustainable machine learning is the practice of designing, developing, and deploying machine learning models with environmental responsibility in mind. It focuses on reducing the ecological impact of computational processes while maintaining model performance, promoting a balance between technological advancement and sustainability.

Related insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.