Data science and machine learning are on hand to make sense and use of your vast reams of information. However, the success of your next intelligent solution depends largely on the quality of the machine learning data. If the quality of your information isn’t up to the mark, you are likely not to obtain any reliable results from all the intelligent tools that pass through your organisation.

It's all about data quality

Many are lead to believe that data quality should be a secondary consideration in the pursuit of machine learning, yet the figures don’t lie. IBM estimates that poor quality data costs US organisations $3.1 trillion every single year; the sum is deriving from large-scale errors and the workarounds undertaken by people in control of it.

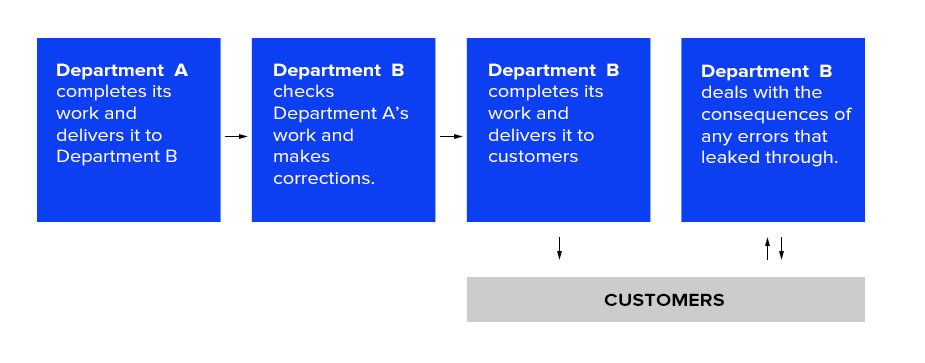

This huge figure is made to look even more significant on the backdrop of IDC’s $136 billion valuation of the big data market. The Harvard Business Review provides a graph which sums up the domino effect of bad data quality, which goes some way to explaining how this has been allowed to happen.

The Hidden Data Factory

Lots of reasons go into why data is found to be of poor quality. One of the more obvious is the need for companies to play the volume game, but the quality of your data should be of more importance than its quantity. ELEKS completed over 20 machine learning projects last year and around half of the cases demanded a data-cleansing effort before the modelling could start.

Use bad data and your machine-learning model will yield bad results, so any successful implementation of a machine-learning algorithm should require some form of data cleansing.

How can you tell good data from bad data?

Data quality is imperative, but how are you to know if your information really isn’t up to the required standard? Here are some of the ‘red flags’ for you to watch:

- It has missing variables and cannot be normalised to a unique basis.

- The data has been collected from lots of very different sources. Information from third parties may come under this banner.

- The data is not relevant to the subject of the algorithm. It might be useful, but not in this instance.

- The data contains contradicting values. This could see the same values for opposing classes or a very broad variation inside one class.

Upon your meeting of any one of these points, there’s a chance that your data will need to be cleaned prior to your implementation of a machine-learning algorithm.

Cleansing, rather than replacing, is likely the action you’re looking for here. Like with point three, it might be that your data is fit for use, just not for the purpose outlined. From our experience, you may need to allocate around 70-80% of your overall modelling time on things like data cleansing or the replacement of missing and contradicting data samples. Discovering poor data triggers actions like the merging of information into one database, the adding of new data or the refining of existing sources.

This approach is one of the main principles within an LLMOps workflow, where this data preparation phase becomes even more crucial, since the accuracy of embeddings, retrieval pipelines, and downstream model monitoring all depend on the consistency and cleanliness of the underlying datasets.

Conclusion

It’s possible to turn a poor database into one that’s ready for the transformation of a business. Actions like focusing on quality over volume and the uniformity of your information can go a long way to ensuring a seamless implementation of machine-learning algorithms.

The big point is to conduct this before commissioning any serious work on big data projects. In our own experience, the allocation of resource towards these actions often means that only 20-30% of our time is dedicated to actually modelling an algorithm.

Are you getting the most out of your data? Contact us to get expert assistance with your data-driven digital transformation.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.