AI is becoming integral to modern software development, impacting every step of the process—from deploying and testing to maintenance. In general, AI helps optimise routine tasks, freeing up developers to focus on more creative and complex work. Think of it as a super-smart assistant who never gets tired and catches the smallest errors one might miss.

One important area where AI solutions help is automating and improving code reviews. These reviews, which can be time-consuming when done manually, can be accelerated using AI technologies like machine learning and natural language processing. These technologies quickly spot issues and suggest fixes, making the process faster and more efficient.

In this article, we’ll explore AI code review and whether this task can be handled effectively without human help. Keep reading to find out more.

Understanding how AI code review tools work

AI-powered code review tools can seamlessly integrate with different development environments and version control systems. They provide real-time feedback during the coding process, enabling the identification and resolution of issues immediately. Whether through IDE plugins, continuous integration pipelines, or direct integration with platforms such as GitHub or GitLab, these tools are designed to make code reviews as natural as possible.

Key steps in AI-powered code review:

- Initial data gathering. At first, these tools gather all relevant information about the pull request (PR), including the description, title, related files, and commit history. It helps the tool understand the scope and intent of the changes.

- Breaking down the code into chunks. Instead of splitting the code arbitrarily, the tool divides it into logical units such as functions or classes, ensuring each chunk is meaningful and includes necessary surrounding context. This approach helps the AI model understand the code better without losing important details.

- Dependency analysis. To have a thorough understanding, the tool also examines connections between files and within the code itself. It involves checking for imports and related functions and ensuring any referenced documentation or comments are present. The aim is to give the AI as much relevant context as possible, improving the accuracy and helpfulness of its analysis.

- Code normalisation. Before sending the code chunks to the AI for review, the tool standardises the code by cleaning up formatting inconsistencies and tagging metadata such as file paths and line numbers. This preparation ensures that the AI can focus on the logic and structure of the code rather than being distracted by superficial issues.

It's important to note that everything mentioned above is theoretical, based on the descriptions of how certain tools work. But do they function successfully in practice? How well do they gather context for submission to the AI for review, and do they correctly divide it into chunks? Let's find out.

Unveiling the process: ELEKS’ investigation of AI code review tools for pull requests

Our main priority in this investigation was to explore the benefits and limitations of AI-powered code review tools for reviewing pull requests (PRs) and whether developers can rely on AI reviews.

In the current market, numerous code review tools claim to offer extensive capabilities, but many fall short in practice. The goal was to find a tool with positive reviews and specifically focus on reviewing PRs.

- Main features we looked for in the tool were:

-

- Pull request summary—a high-level description to understand the changes and their impact on the product quickly.

- Code walkthrough—a detailed code walkthrough to understand the changes in each file that is a part of the pull request.

- Code suggestions—feedback posted as review comments on the lines of the code that was changed for each file with suggestions.

- The ability to learn to improve the review experience over time.

Given the evolving nature of AI-powered code review tools, obtaining comprehensive information was challenging. We have curated a list of popular tools to determine the most promising options. From this list, we have chosen three tools for a trial:

- Bito.io

- Codium

- CodeRabbit

After carefully evaluating these three tools, we chose CodeRabbit because of its strict focus on PR reviews and its support for all necessary features. It uses OpenAI's large language models, specifically GPT-3.5-turbo and GPT-4. GPT-3.5-turbo handles simpler tasks, such as summarising code differences and filtering trivial changes, while GPT-4 is used for detailed and comprehensive code reviews due to its advanced understanding. CodeRabbit doesn't support proprietary or custom AI models, but it allows customisation through YAML files or its UI.

It integrates seamlessly with GitHub and GitLab via webhooks, monitoring PR/MR events and performing thorough reviews on creation, incremental commits, and bot-directed comments.

We decided to choose a Pro payment option, which costs $12 per month at the time of investigation. It allows not only running an unlimited number of pull request reviews but also enables line-by-line review of the code and chat with the CodeRabbit bot.

Setting up the test playground for the investigation

We decided to use an e-shop pet project consisting of two repositories for the test environment.

- The front-end is a class-based single-page application written in vanilla JavaScript, which doesn't rely on any frameworks. Instead, it uses custom solutions for routing and state management. Styling is handled with SCSS, providing more flexibility and features than regular CSS. For testing, we use Jest for unit tests and Cypress for end-to-end testing. Webpack is used to bundle the project. The application includes a variety of features such as an authentication system, a product catalog, a shopping cart, a checkout process, an order list, and product creation tools. Every page and component is thoroughly tested with unit tests to ensure they work as expected. This custom approach allows for a highly tailored and efficient front-end experience.

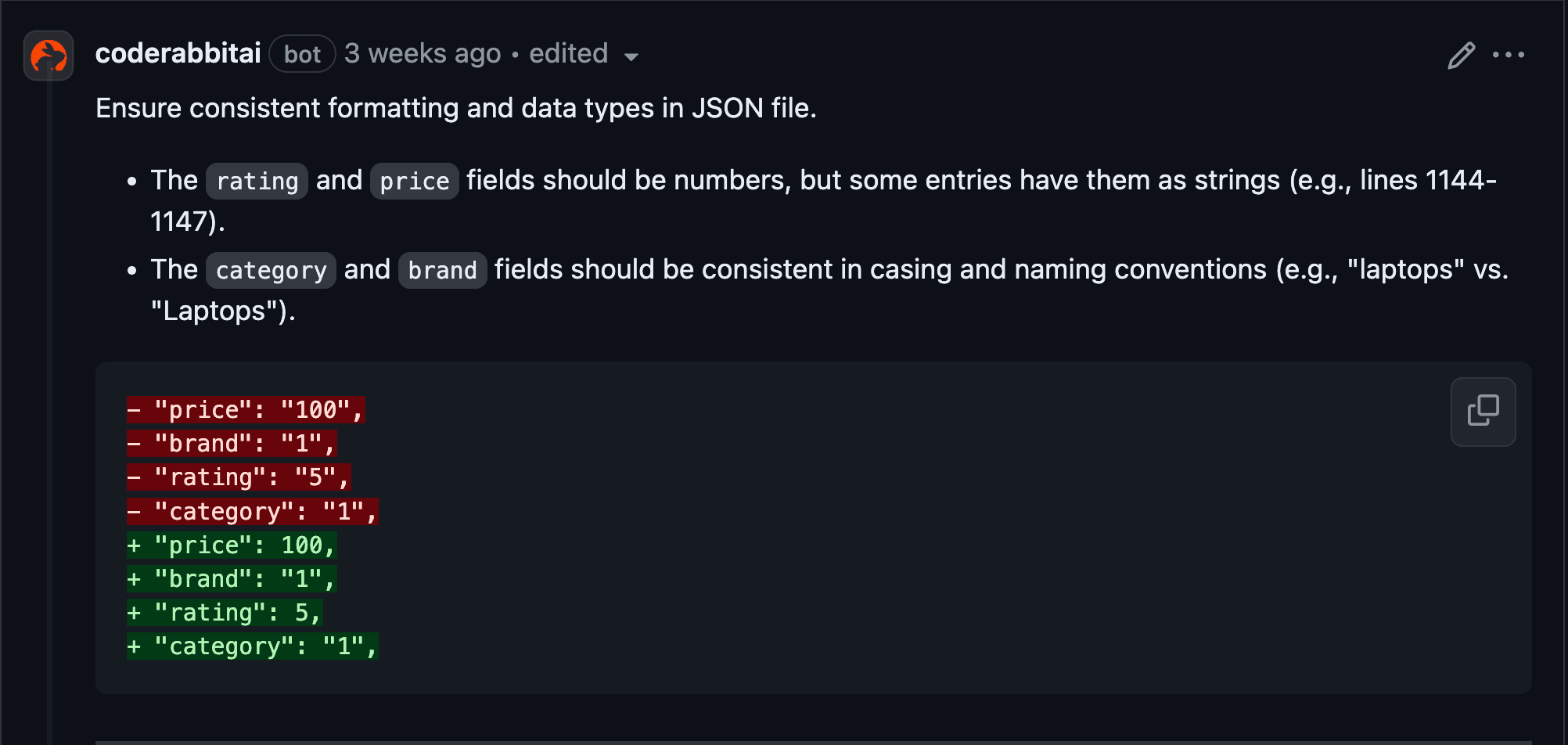

- The back-end is built using a microservices architecture organised within a single mono repository. It consists of three microservices, an API gateway, and a shared npm module that contains a common logic that reduces code duplication across microservices. The technology stack includes Express for the server framework, TypeScript for typing and modern JavaScript features, Stripe for payment processing, and Jest as a testing framework. Instead of a traditional database, we use a JSON file to simulate database operations. To manage interactions with this "database," we've developed a custom mini ORM (Object-Relational Mapping) tool. All the code is thoroughly tested with comprehensive unit and integration tests to ensure reliability and functionality.

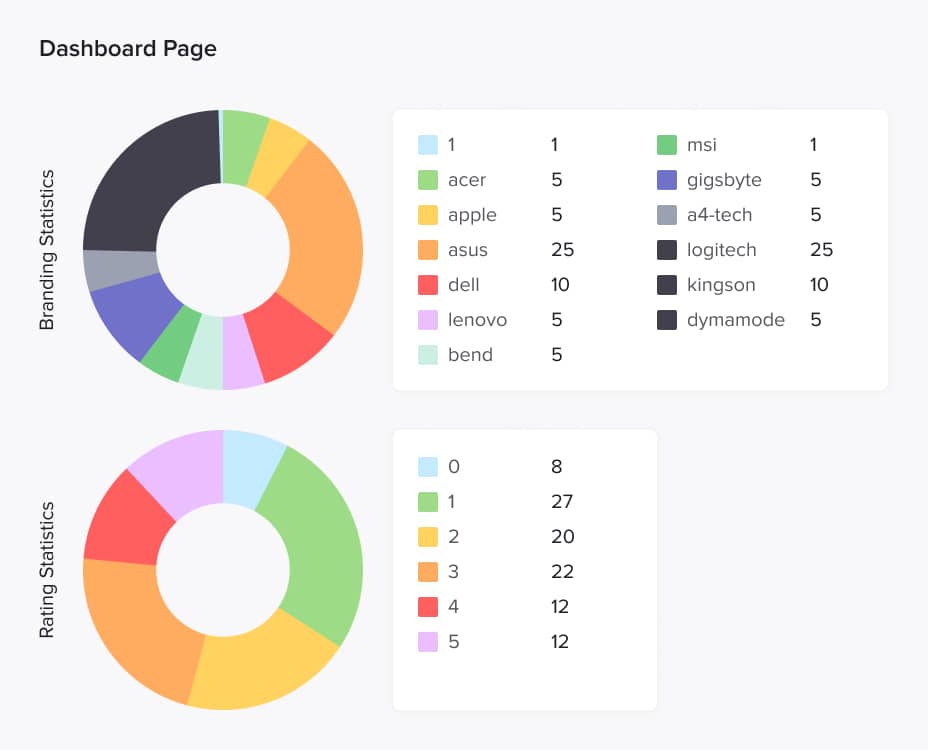

To test the code reviews, we evaluated them on the front-end and back-end repositories to see how the AI reviewer performs on different types of code and languages. On the front-end, we developed a new page, the dashboard, consisting of several charts that display various product statistics fetched from the back-end. We created the charts manually using JavaScript, HTML and SCSS, without any libraries, to see how the AI would respond to custom implementations. On the back-end, we developed a new microservice called "dashboard" with several endpoints that validate the information retrieved from the database and remap data into different formats.

The branding statistics pie chart illustrates the proportion of various brands in the product catalogue. Each segment represents a specific brand, highlighting its presence in the entire range of products. The rating statistics pie chart showcases the distribution of product ratings from 0 to 5 stars given by users. Each segment represents a specific rating category, illustrating customer satisfaction and feedback.

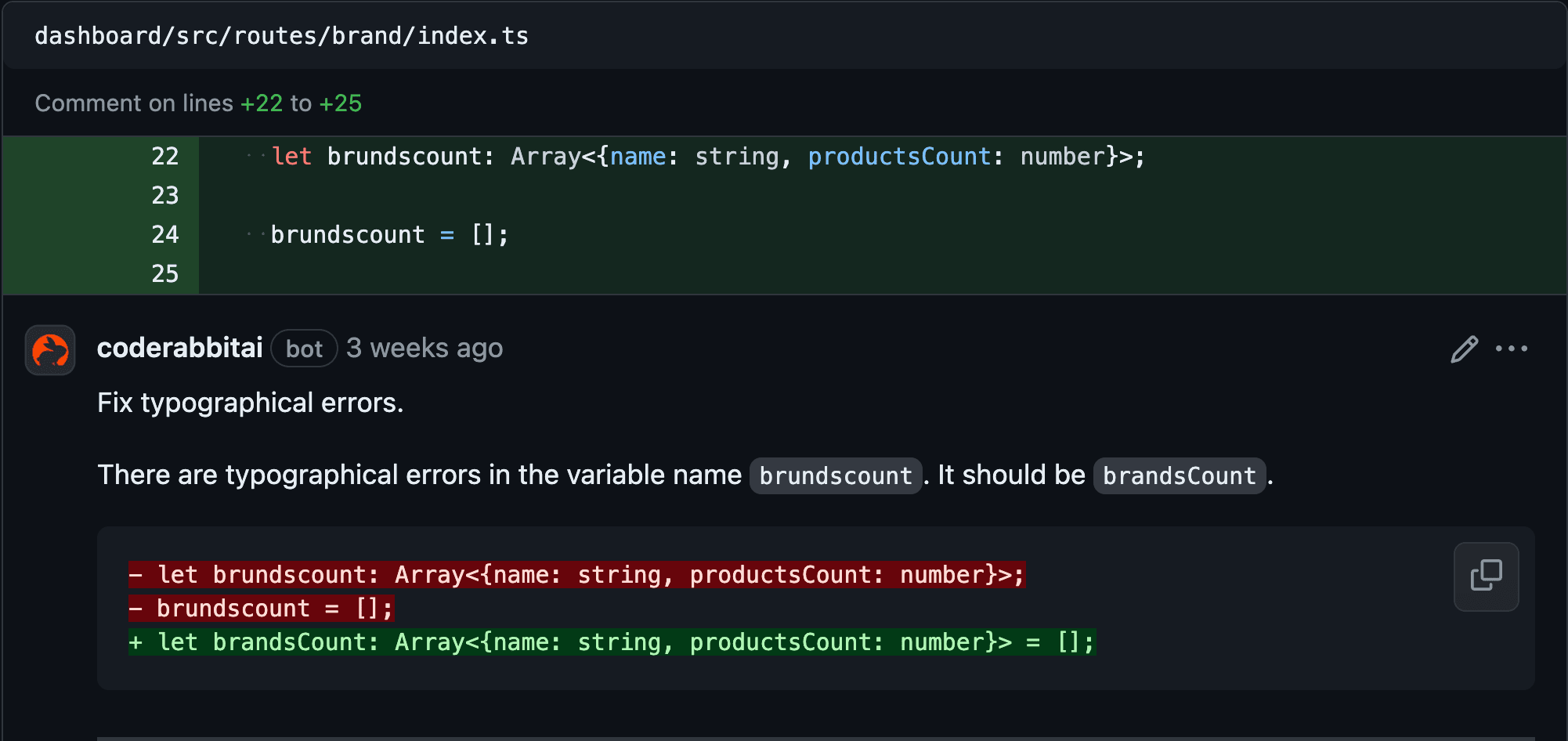

To thoroughly test the AI reviewer, we intentionally wrote poor-quality code with the following issues:

- Bad mapping functions

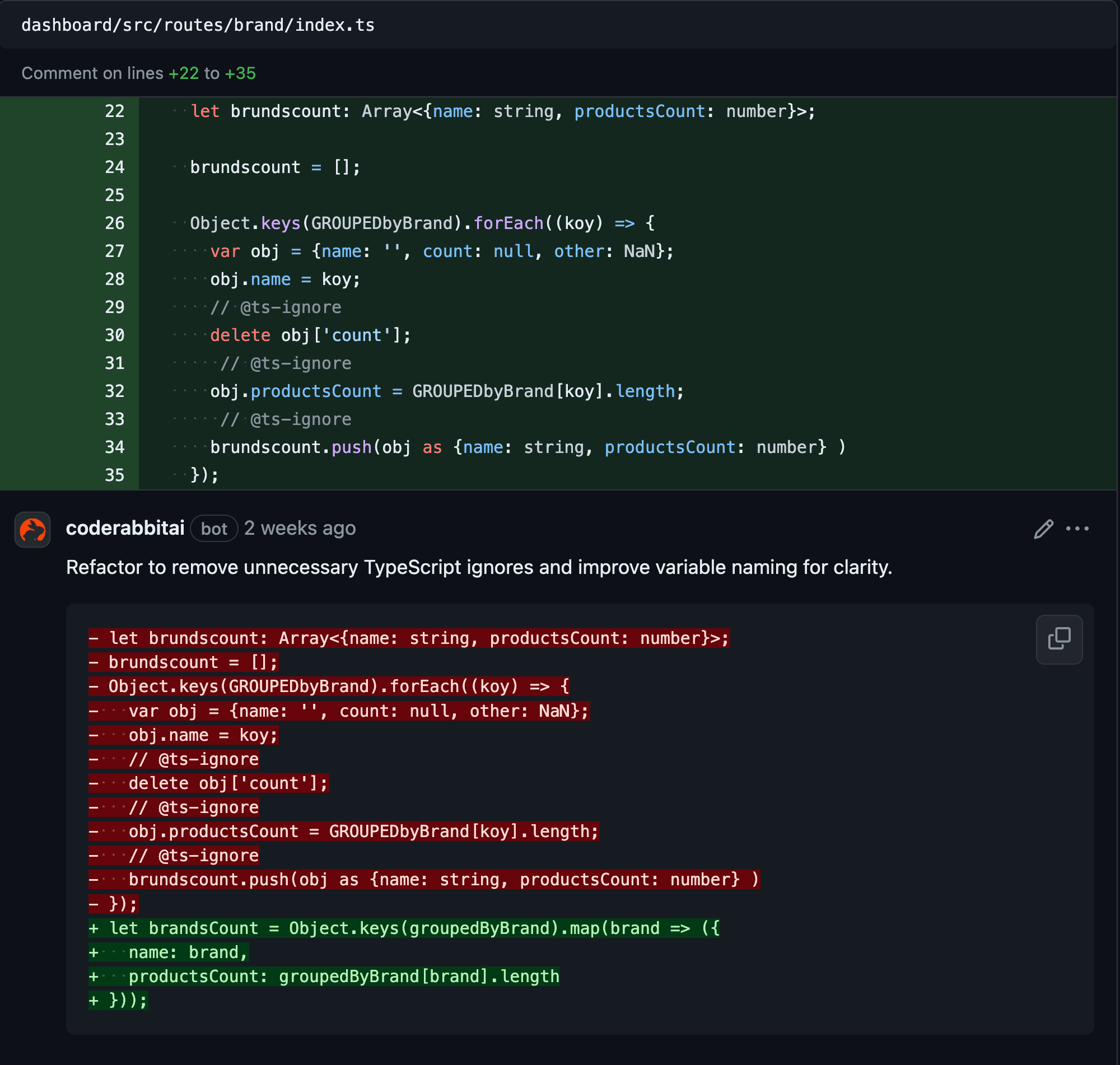

- Incorrect typification and using ts-ignore and any

- Code duplications and file duplications

- Typos everywhere, even in file names

- Poor formatting

- Missing route registrations in the application

- Inadequate and missing tests

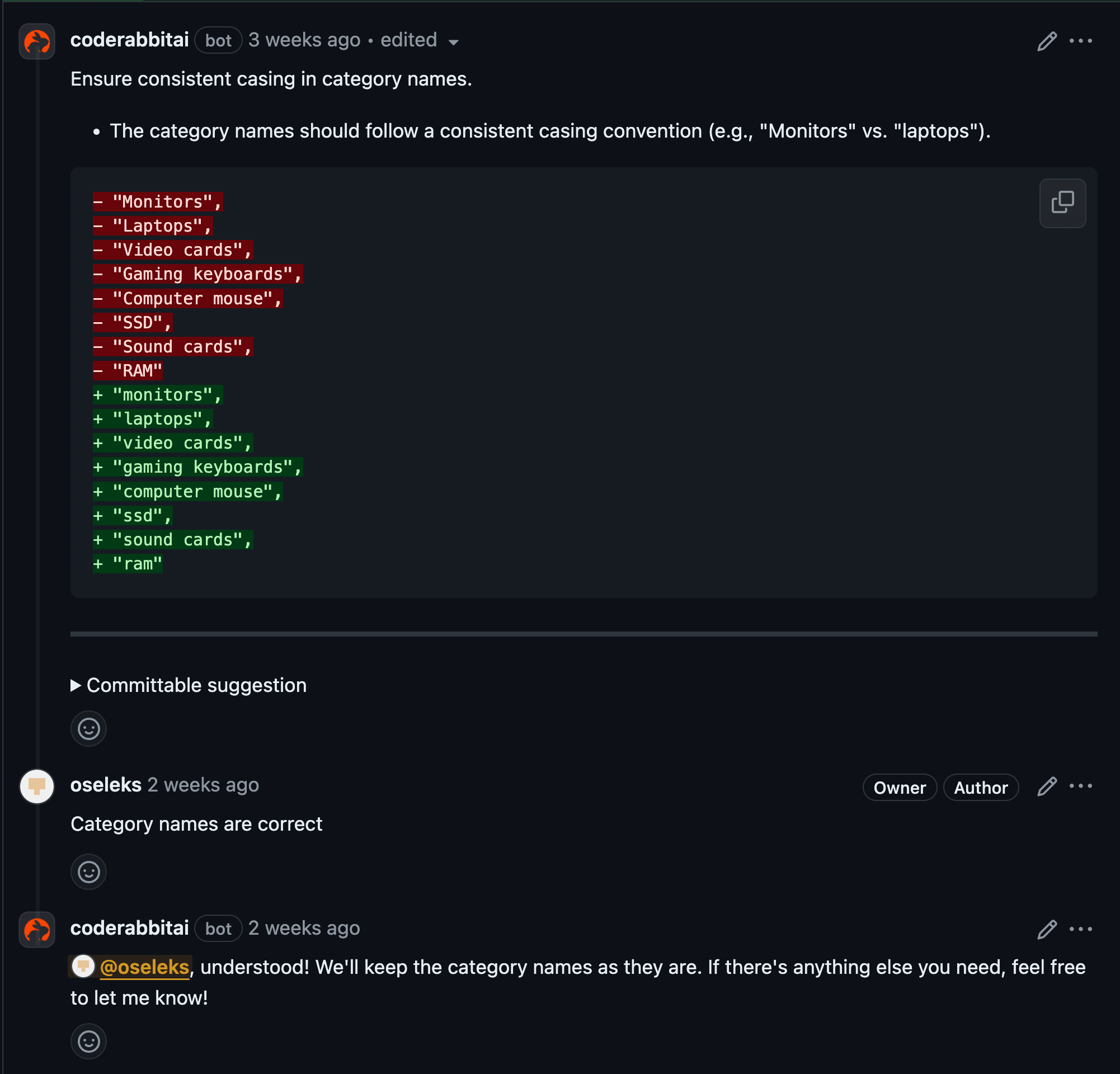

Once these preparations were completed, we created several PRs for both the front-end and back-end branches for the AI tool to review. This led to numerous discussions in the threads regarding the comments left by the tool.

Evaluating the AI code review effectiveness

Pull request summaries and files walkthroughs

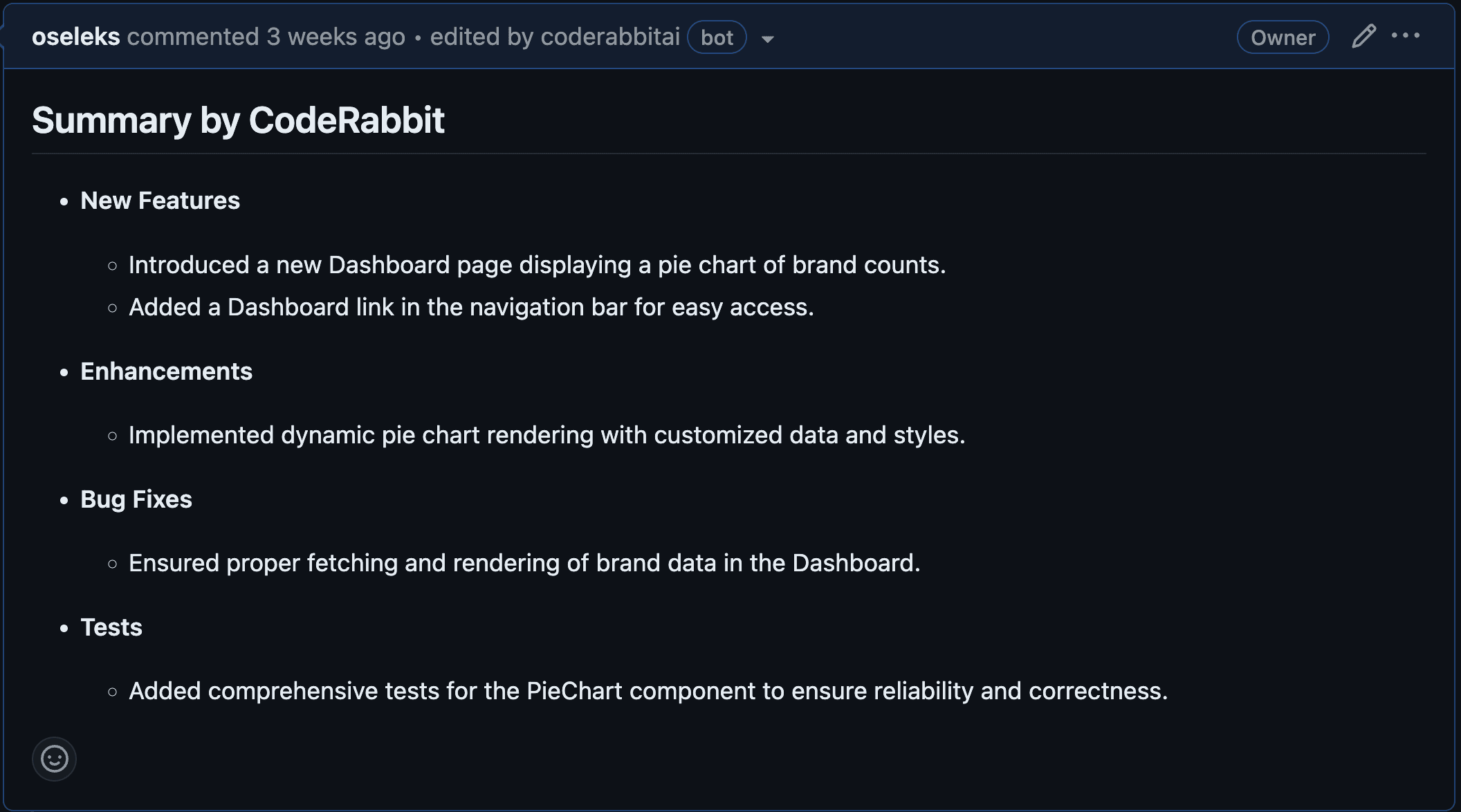

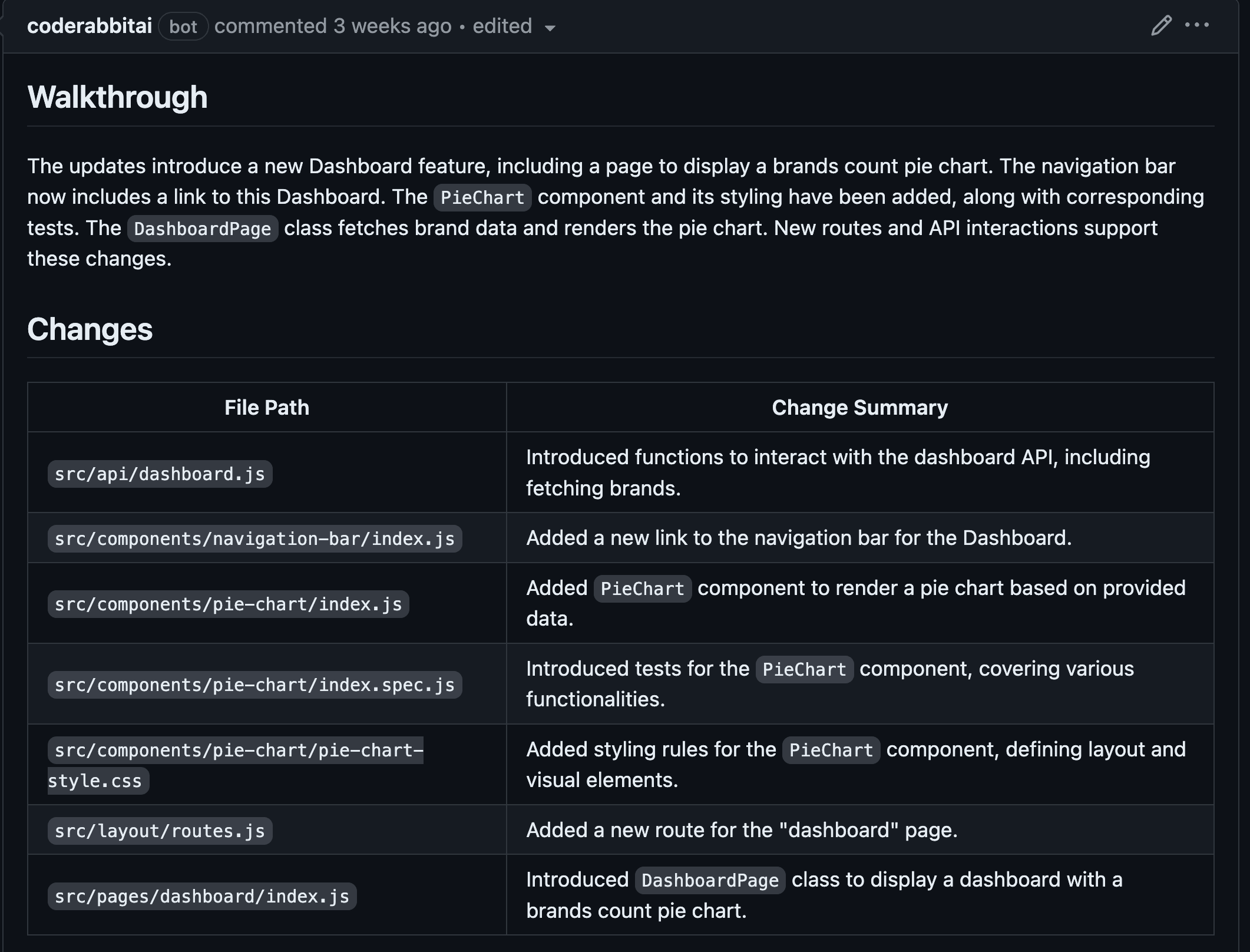

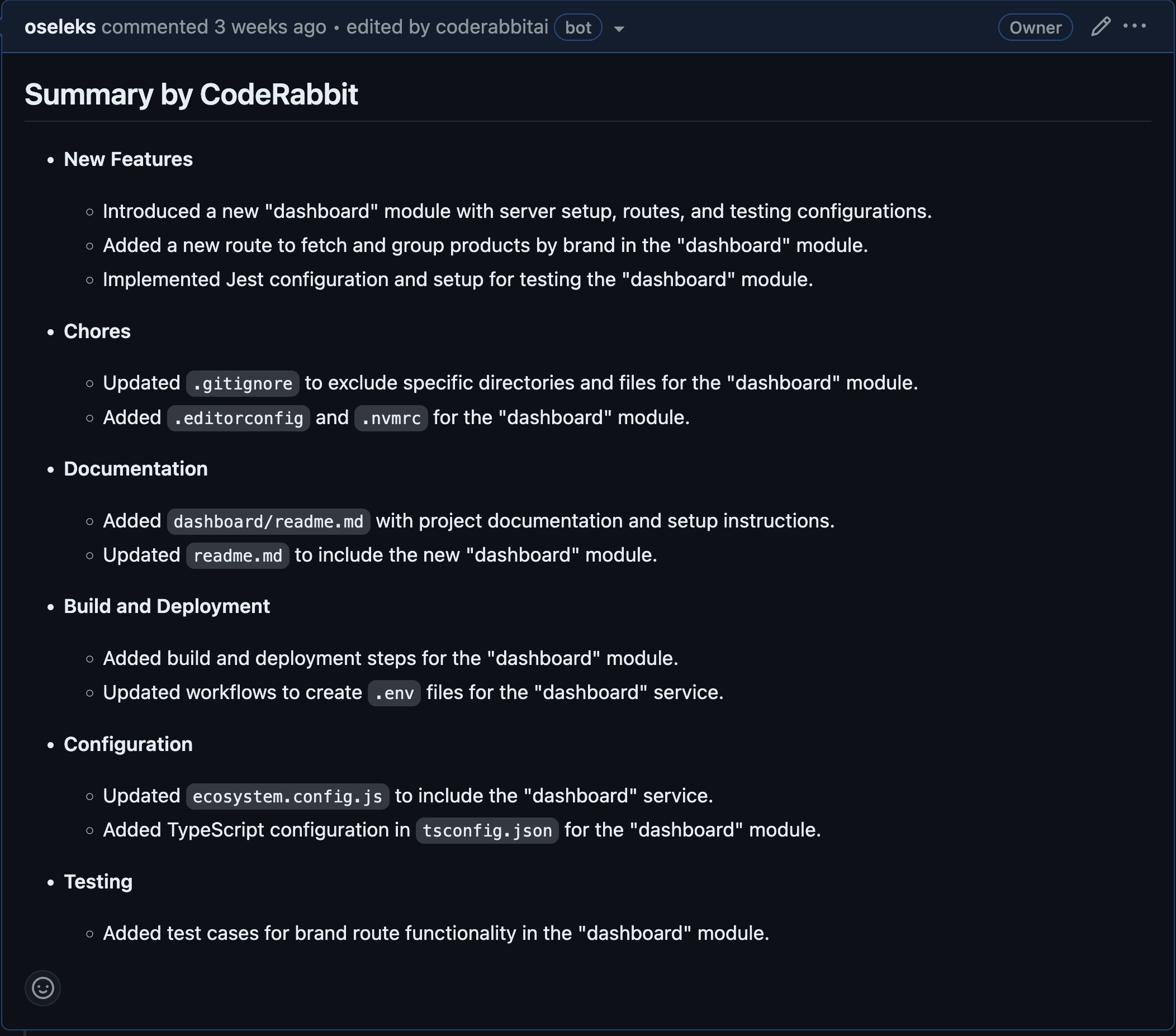

We evaluated the performance of the AI-powered code review tool by examining how it generates pull request summaries and file walkthroughs. As mentioned, establishing context is essential before submitting code for AI analysis. We were pleasantly surprised by how well CodeRabbit handled summary creation and changes walkthrough generation. In 9 out of 10 cases, the tool provided accurate summaries and walkthroughs that effectively captured the changes in each file and the pull request as a whole. This functionality alone is highly valuable.

In a project involving multiple development teams, it is crucial to understand whether the PRs affect specific areas of responsibility. Detailed summaries and file-specific walkthroughs would be immensely beneficial in this context. While the PR owner can manually create a summary, it is time-consuming, and having an assistant to do this, allowing the developer to review and edit as needed, is highly desirable. However, the question remains: can developers fully rely on such assistant?

Context limitations and business logic

It is important to note that AI-powered code reviewers only analyse the code added or edited in the PR under review. This means the AI's understanding is limited and might miss important aspects. This limitation was evident in our case. When creating a new microservice, a significant amount of code was copied from an existing one, resulting in considerable duplicate code that the AI reviewer failed to identify.

The size of the code plays a crucial role. Due to the limited amount of information that can be conveyed to the AI model for analysis, the more information included, the less useful context is generated and transmitted to the AI model during the review. It is challenging to pinpoint the exact number of lines of changes after which the context becomes inadequate for understanding what is happening in the PR, but it is clear that beyond a certain point, the context becomes unusable. Additionally, it is important not to rely solely on the number of lines changed, as a line with a comment and a line with a module import have vastly different utility values.

Best practices for the AI-assisted code review:

- To improve the effectiveness of code review using the AI tool, it's important to logically group changes into commits, give commits appropriate names, and include documentation in the code, especially in areas of business logic that require clarification. Pull requests should be kept as small as possible to enable better AI-assisted review.

- It would also be beneficial to set up the AI code review tool from the beginning, as most of these tools can learn over time, and CodeRabbit is no exception. At least, CodeRabbit developers claim that their tool possesses this capability, although we haven't independently tested it.

Unfortunately, AI reviewer doesn't understand the connection between front-end and back-end repositories, and there appear to be no settings available to configure this aspect. For instance, it would be beneficial if the AI reviewer could verify whether a back-end endpoint returns what the front-end expects according to validation and type definitions.

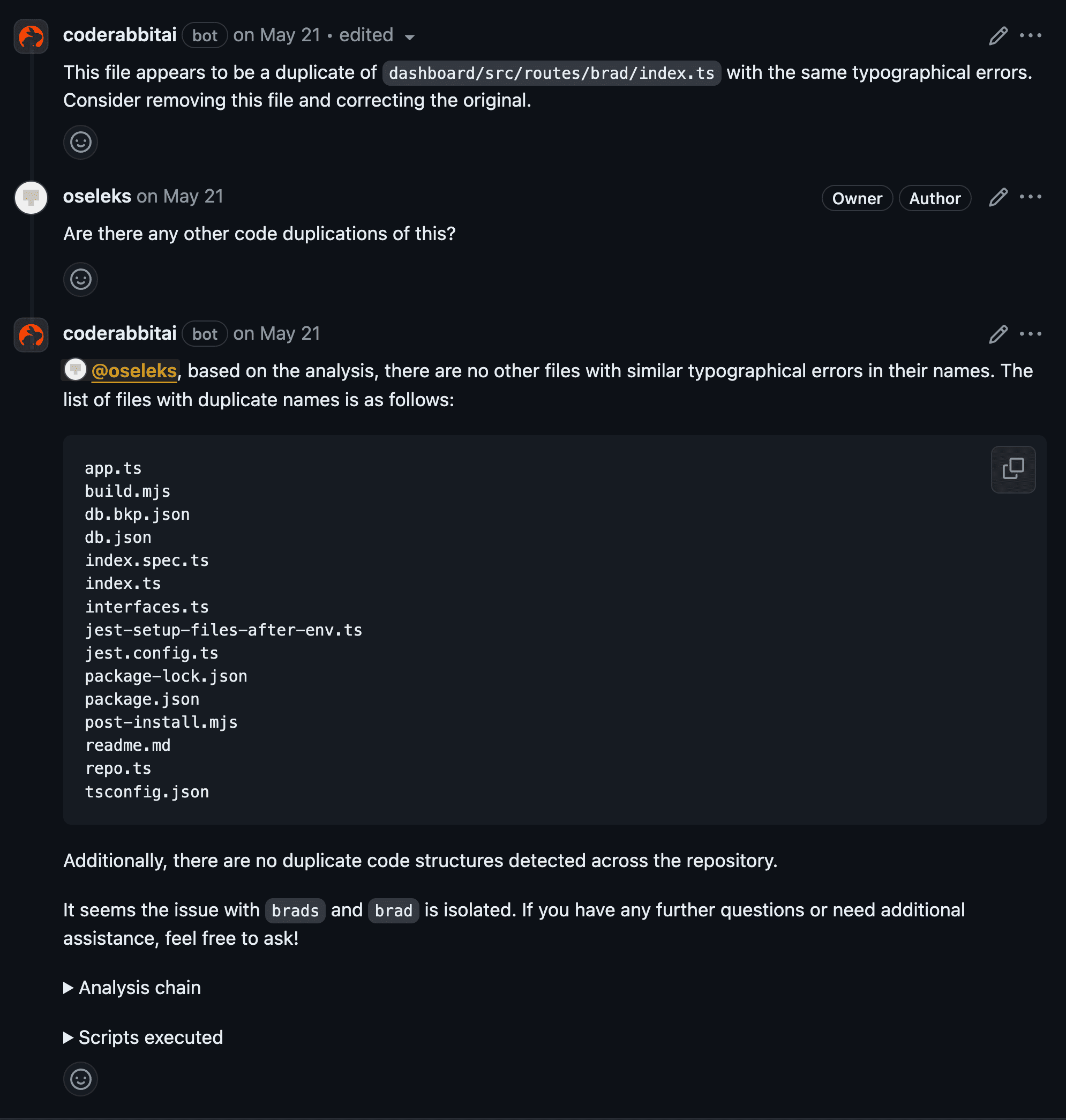

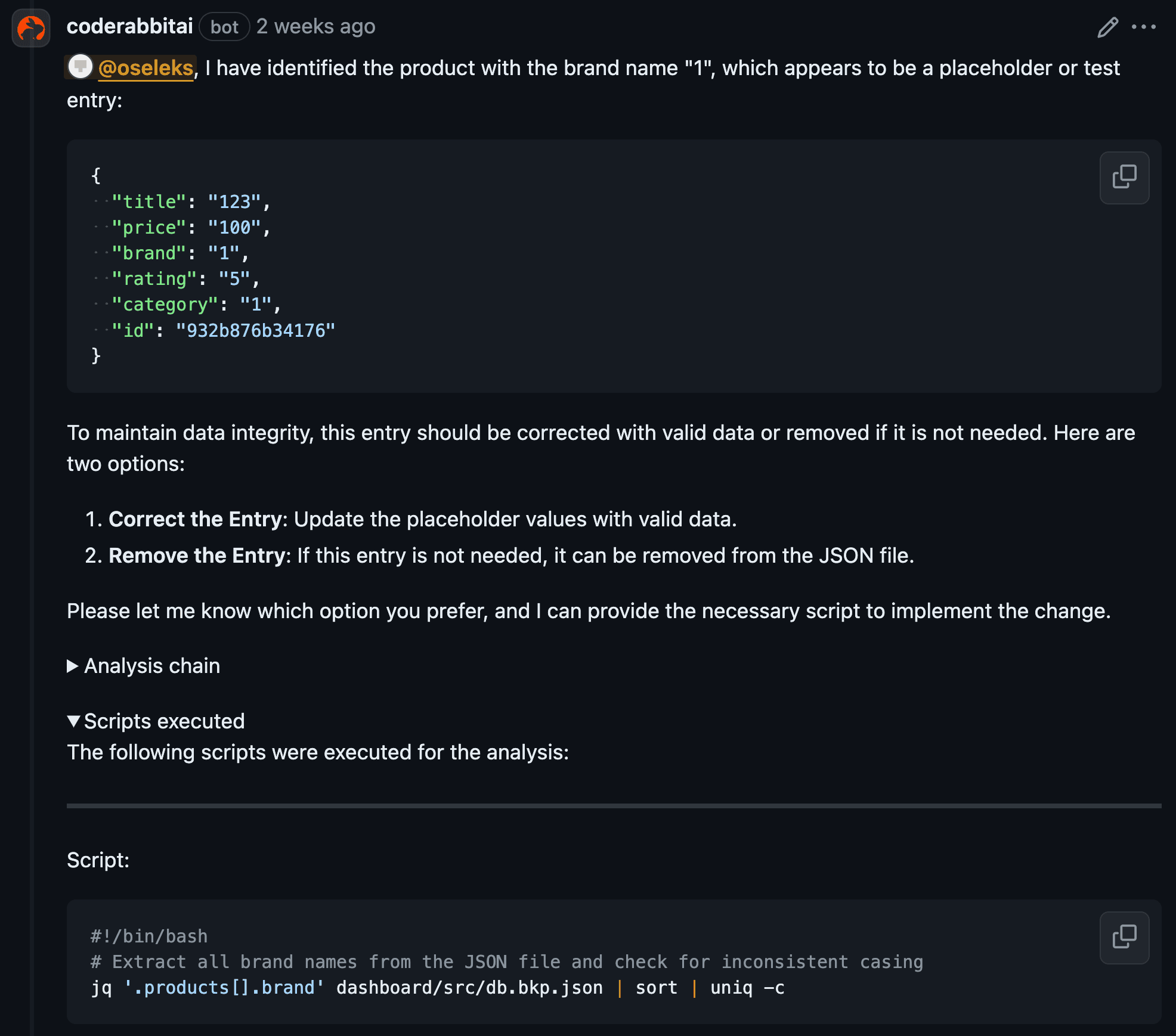

Another example of poor contextual understanding is the AI's inconsistent detection of identical code duplicates within the PR changes. In 1 out of 10 cases, the AI reviewer successfully identified and flagged duplicate code. However, in the other instances, the AI either failed to detect the duplication or reviewed it twice, resulting in nearly identical feedback each time. For example, in our research, the AI independently found and flagged identical code duplicates in different files only once (see the screenshot below). We also tried to give it direct instructions to search for code duplicates, but success in this case varied and could not be guaranteed. This inconsistency highlights the need for human intervention to ensure accurate detection and varied feedback for duplicated code.

We've noticed that the AI performed well within a single file but struggled with context across different yet related files. Moreover, we intentionally left some new back-end endpoints uninitialized, resulting in them not being registered to a particular URL and thus not being reachable, which the AI reviewer did not catch.

AI reviewers have a major weakness in their lack of contextual awareness and nuanced understanding. Unlike human reviewers, AI may miss the context in which a piece of code operates, leading to technically correct but contextually inappropriate suggestions. The larger the number of files and code in a PR, the worse the context for the AI.

Code review efficiency within a file or function

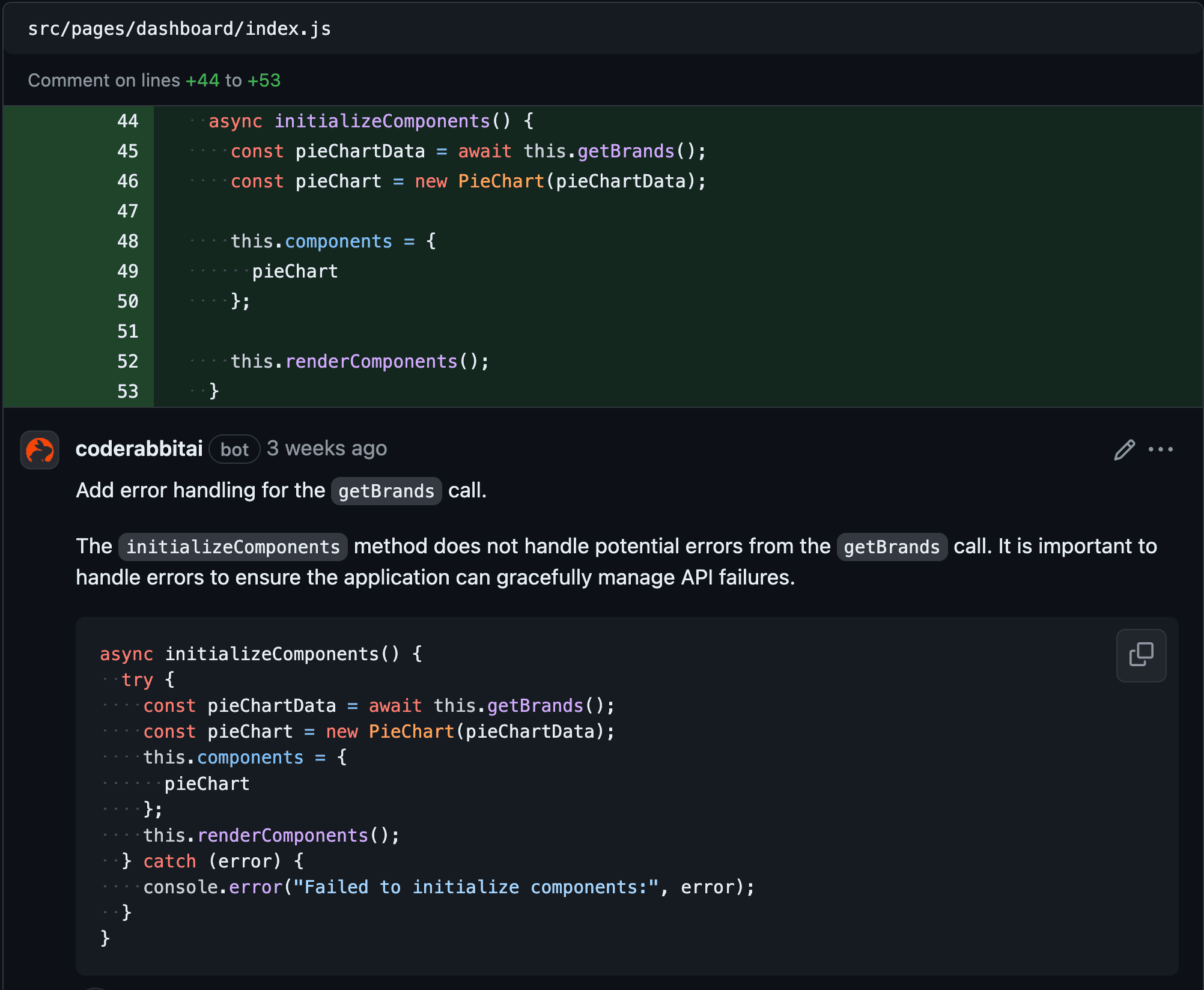

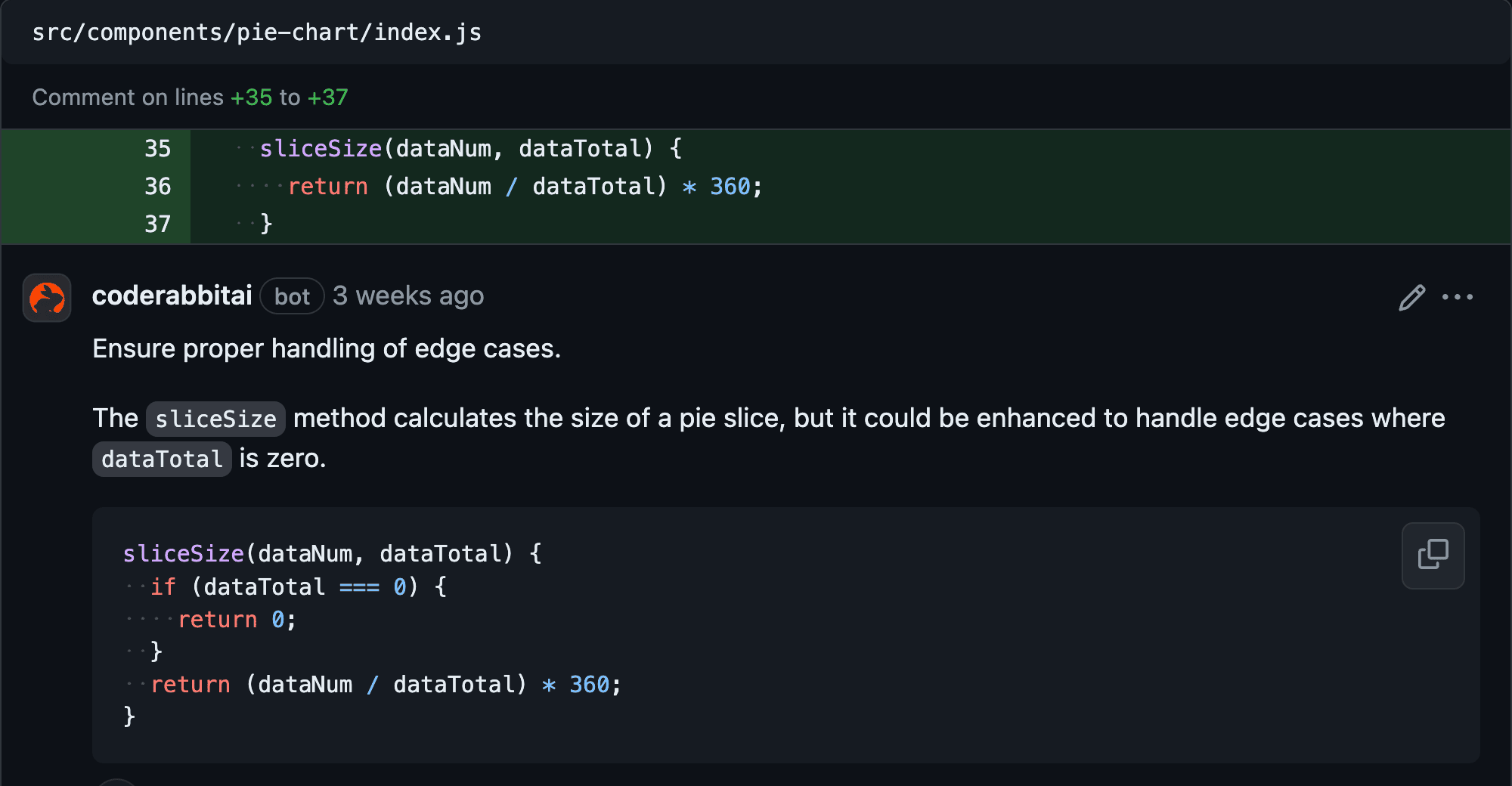

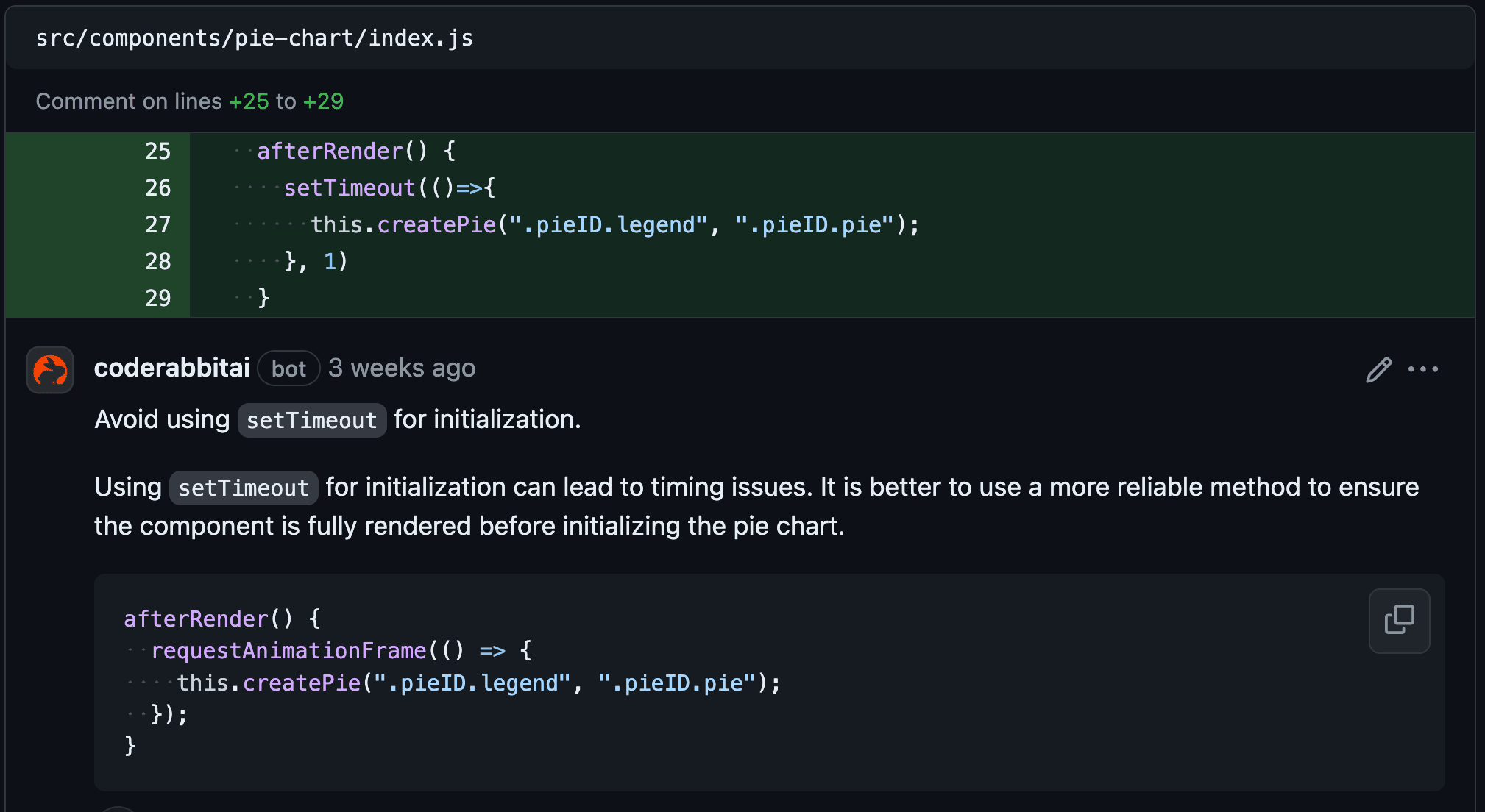

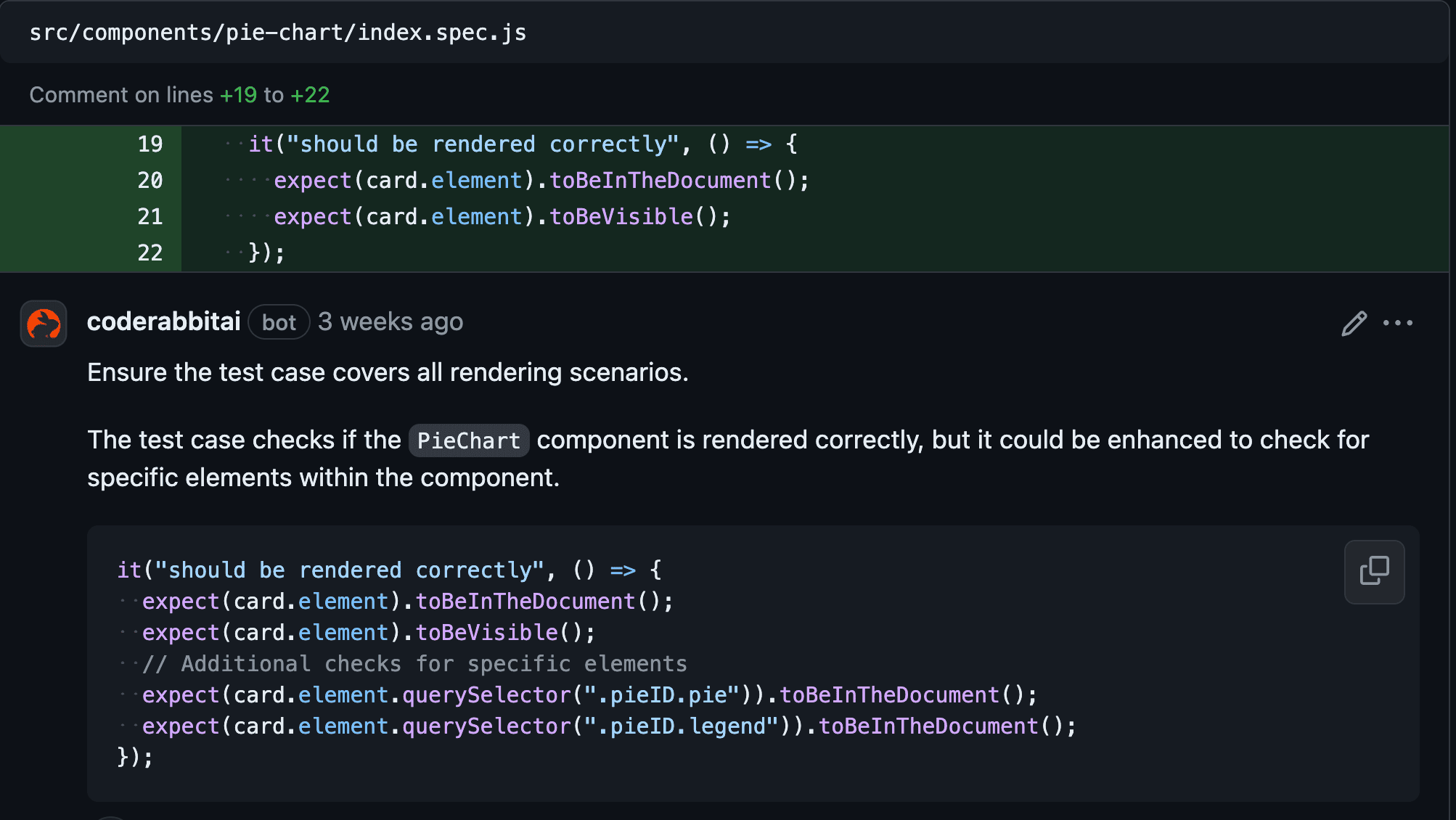

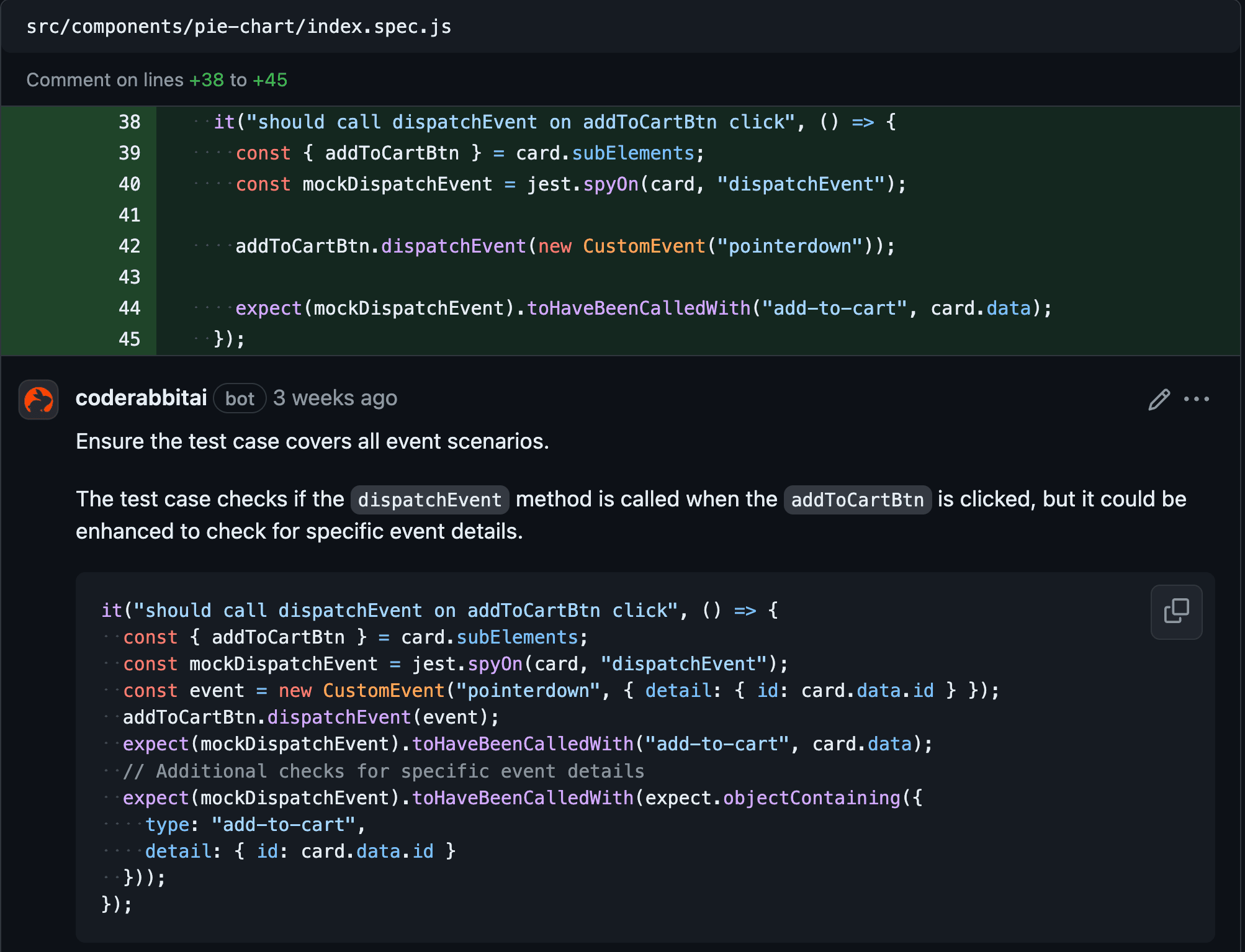

On the other hand, regarding small code snippets within a single file, CodeRabbit offered valid suggestions, such as adding validation, error handling, or more readable code variants. However, these suggestions often resembled an advanced version of ESLint+Prettier, capable of comprehensively reformatting and refactoring a function with comments.

The CodeRabbit's functionality, which allows the viewing of scripts executed for different analyses, is admirable. A review of several cases indicates that the effectiveness of the AI assistant is significantly influenced by its configuration. Most AI tools for code review allow writing additional instructions for reviewing specific file formats, which is useful for focusing on code duplicates or reviewing code according to specific rules.

Reviewing unit-tests

We were quite surprised by how the AI reviewed the tests. The suggestions for missing test cases and how to expand existing tests were quite good on the front-end. However, the situation was quite the opposite on the back-end. The test file on the back-end was 340 lines (11 test cases), and it seemed that the AI struggled to review it correctly due to context limitations. We received some localised comments on certain test cases, but overall, the AI did not recognise code duplicates that should be extracted into beforeEach and beforeAll methods.

Conclusions

AI enhances productivity and code quality by automating routine tasks, identifying common errors, and enforcing coding standards. AI code reviewers still struggle with understanding business logic and handling complex codebases due to context limitations.

For future research, one can integrate AI tools from the start to observe the review quality with small, incremental commits. Configuring the AI agent to ignore specific files and providing detailed instructions can improve overall results. Pairing AI code reviewers with tools that catch issues during development is also beneficial, as some issues can be addressed in the early stage.

The future of AI in code review is promising, with advances in machine learning expected to improve AI's understanding of code context and semantics. Innovations might include AI tools suggesting optimisations and predicting scalability issues. As AI evolves, it will handle more complex tasks and integrate further into development. However, a balanced approach is crucial: AI boosts productivity, but human reviewers provide essential domain knowledge and critical thinking. Continuous learning and collaboration will ensure the effective use of AI tools, optimising the code review process for superior outcomes.

FAQs

While AI-powered code review tools significantly enhance efficiency by automating routine tasks and catching common errors, they cannot fully replace human reviewers. A hybrid approach, where AI handles routine checks and humans focus on complex, context-dependent issues, is currently the most effective way to ensure high code quality.

Most AI-powered code review tools can seamlessly integrate with popular development environments and version control systems like GitHub, GitLab, and Bitbucket. They often offer plugins for IDEs or integrate into continuous integration pipelines, providing real-time feedback during the coding process.

AI code reviewers have several limitations, including a limited understanding of business logic, challenges with handling complex or large codebases, and potential difficulties in recognising issues that span multiple files or repositories. Additionally, AI may generate false positives or negatives, requiring human oversight to validate its findings.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.