Frankly saying, cards are boring. You can hardly impress anybody by simply sending out a greeting card, and the holiday season is a time when no one is really inclined to work. People want to be entertained, relax and have fun. So we came up with the idea of applying our knowledge in VR app development to build an entertaining holiday VR game. Within eight weeks, our R&D team developed a 3D mobile game for Google Cardboard and we called it Back2Pack. Instead of distributing greeting cards, we sent out an email with the link to b2p.eleks.com, offering our customers an entertaining virtual reality experience.

But creating a game was not our only goal. We also wanted to try out multi-device interaction with virtual reality on a real project.

In this article, we will share the story of how we developed our game and the challenges we had to overcome along the way. You can find here some practical technology tips and tricks for creating an award-winning interactive virtual experience.

Gesture recognition

By design, our game required two mobile phones. The first one was to be used as a game controller and the second one as a VR screen for a cardboard. We decided to connect those two phones using web sockets. Our initial idea was to use the controller device as a sword in the game. The player was supposed to ride a deer through the forest trying to cut the trolls’ heads off to save Christmas.

Holiday VR Game first prototype

Unfortunately, we faced some problems with tracking gestures through the controller right from the start. The thing is, the quality of accelerometers in phones usually leaves much to be desired. The data we received from them varied for different devices and had a lot of noise. So, we had to stick to a single gesture that was easy to track - a hand swing.

This made us change the game concept and rename it as Back2Pack. Wondering where the name comes from? In the new version of the game, the player had to throw elves into the runaway toys to quickly pack them and then send them to kids as Christmas presents.

Holiday VR Game second prototype

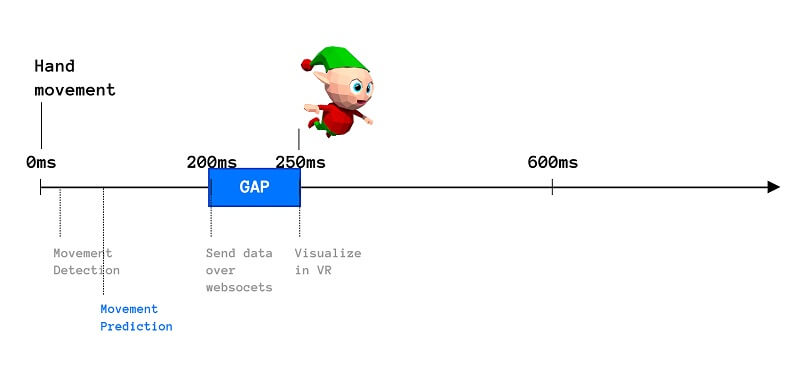

But the result was still far from what we expected it to be. There was a delay between swinging the controller and throwing an elf in the game since we tracked the full gesture from beginning to end and then sent a message about the event via web sockets.

Holiday Virtual Reality Game latency

But often the easiest solutions are the best. So, instead of tracking the full gesture, we simply tracked the acceleration value of a phone swing. When the acceleration acquired a certain value (we picked them by experimenting with different phones) on one of three axes, the system understood that the user had swung an arm to throw an elf and sent a message about the event in advance. That’s how we achieved good gameplay and got rid of the laggy feeling between swinging the controller and throwing an elf in the game.

WebGL

In WebGL everything is about performance and memory. You plan to do a lot of cool things, but very soon you can reach the GPU limit. So, you have to be aware of optimization every single moment, regardless of whether you think a certain part will be “heavy” for the processor or not. You also have to constantly improve everything you can: the less you calculate and render, the higher frame rate you get.

Luckily, the WebGL development part of the project was fairly trivial: simply create an engaging holiday VR game with a fascinating presence effect environment and make it all work on 60 fps in mobile browsers on WebGL-compatible devices (at least on more or less modern ones) - no biggie.

Holiday VR Game

The first step was to create the winter environment and landscape. We decided to make it realistic with a parallax effect. But how do you make it detailed and maintain the polygon count? Yep, by cheating. We made two cylinders of different widths and generated textures for them: with an alpha channel and low mountains for the narrower one and with sky gradient and blurred higher mountains for the wider one. Next, we added a parallax effect by slightly rotating one cylinder along with the camera. The view from above was not that impressive…

Holiday VR game landscape

…but it was quite nice from the player’s point of view:

Holiday Virtual Reality game landscape

We needed to create a relief. We did not have much time to make models of levels in a CAD program; plus, they would look the same every time the game started - b-o-o-o-ring! So, we decided to generate them dynamically.

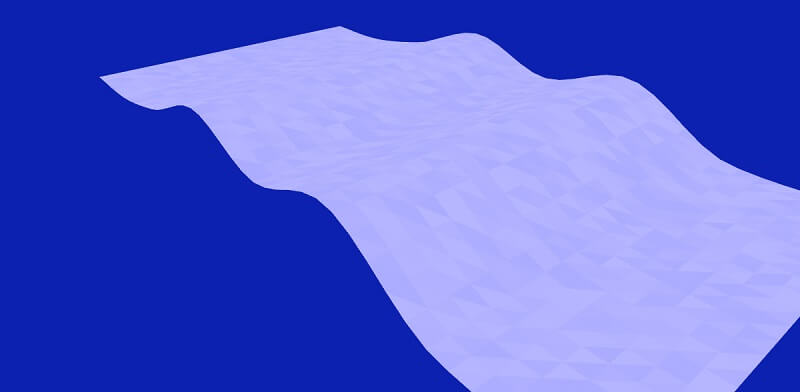

First of all, the landscape height changes had to be smooth. It sounds like a kind of mathematical function. We used the CatmullRomCurve3 component for generating user path, and positioned all objects including the relief according to this curve’s points. So, we created a big plane geometry, calculated its triangles vertices positions according to the curve point coordinate and set narrow range colors for those triangles. That is how we received a relief with smooth hills:

Holiday VR Game relief

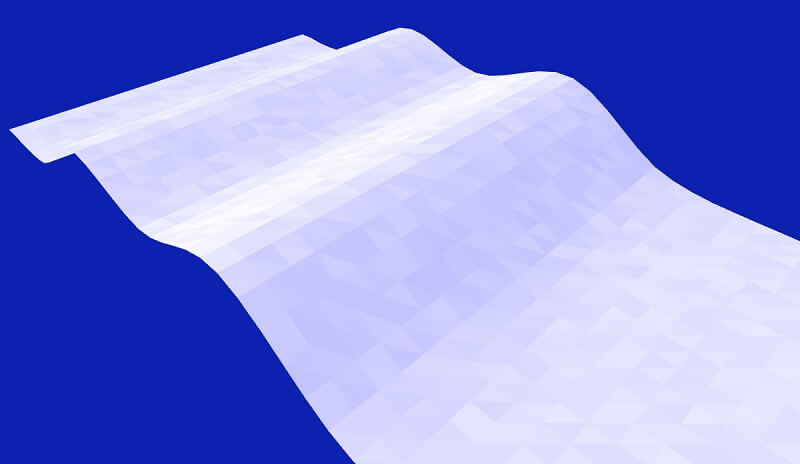

And... it looked rather ugly. A kind of a parquetry landscape, not the snowy view we were looking for. Plus, the unrealistic colors... We needed light to calculate shadows for a more realistic view. But calculating light for that huge amount of triangles is costly. Soooo… Yup, cheating again. We calculated triangle normals and used that data to set the colors range. In that way we created a more natural view and avoided resource intensive light calculation:

Holiday Virtual Reality Game relief

Then we needed some objects – trees, rocks, branches, stumps, etc. We used some 3D models, locating them according to the path coordinates. In this case, we did not need to fill up the whole plane, but only the area near the path the user would run through.

To reduce loading time, we decided to use only one model of a tree and then randomly rotate and scale it in some diapason to make it look different. To provide some more visual variations, we changed the color of the tree. Using various colors and textures, we managed to make the forest look less “flat” and more realistic.

To avoid light mapping and all the other additional calculations, we “baked” shadows into textures. We also had to fill the horizon area, because there were some gaps there. To do this, we created a “fake” ground - just a white plane area outside the user path.

As a result we received a winter forest landscape with a clear user path:

VR game relief with trees

The next step was about adding the user’s movement. There were two options: moving the camera through the space, or moving all the objects instead, with the camera left in its initial position. We decided to choose something in between these two variants: the plane moved towards the user, simulating the run, while the camera rotated and slid along the width and height axes, following the path’s coordinates and angle.

To provide an experience of infinite movement along the plane, we created an additional plane with a randomly set user movement path. We adjusted the relief accordingly, placed some landscape objects on it and set the plane lengths to match the environment size. To hide the joint between the two planes, we set their beginning and end relief heights to the same values. We also adjusted plane positions for every frame. If the plane was behind the horizon at the user’s back, we simply put it behind the horizon in front of the user as well, re-generating the relief and landscape objects. The objects were positioned randomly, and we decided to reuse them instead of generating new ones.

Next, we needed to add animated toys. First, we decided to load DAE animation files, because they were lightweight. But there was one problem. According to our gameplay concept, we needed to duplicate animated objects. In other words, we needed to have multiple similar toys; however, the DAE animations did not duplicate correctly. In three.js, DAE animation is a group of objects. After we tried to create duplicated characters, the animation failed to play correctly and there was also a problem with the dynamic texture changes. We did not find the solution to this issue, so we changed the animation format to JSON. The only one animation left in the DAE format was the deer animation, because it did not need to be duplicated.

At that point we were ready with all the objects we needed for the game, the toys and all the other visual stuff:

It was time to develop the catch algorithm. According to our gameplay scenario, the user had to throw an elf to hit a running toy, so the elf could catch and pack it. The common technique for implementing this interaction was ray casting. But this method is rather resource consuming, especially for multiple objects. So, we calculated the distance to every toy instead, and if it was small enough, we knew, that the elf was really close enough to catch that toy. We added some cool “fight cloud” animation and then the animation to show the process of packing to make the game more interactive and exciting.

At last, ladies and gentlemen, our holiday VR game was ready!

We were about to say: watch out, Angry Birds, we are coming! But, unfortunately, we faced one more problem with reference to the display performance for the iPhone 6. The reason was that we had too many landscape objects. If you remember, we tried to reduce the memory footprint and the loading time. Therefore, we loaded only one model geometry and randomized it.

At the same time we created over 400 objects and added them to the scene. And every single object presupposed an extra draw call. We ended up with the additional 400 draw calls for every frame while the objects did not change. As a result, we had to merge the objects to reduce the number of draw calls. Simply packing objects into container-objects did not work. We had to merge geometries. In our case, we had a single tree geometry, but we needed a forest geometry instead.

So, we created an empty geometry and copied our tree geometry into it with the corresponding offset, rotation and scale. After that, we set the tree geometry properties and copied them to the forest geometry. We had to do this as many times as there were trees on the plane. Next, we received one big forest geometry, drawn by a single draw call. As a result, we managed to double the display performance indicators.

And here is a valuable tip for developers. When the game starts, the player looks forward through the deer’s antlers. But it looks different on Android and iOS devices. On iOS, every time you start a game and initialize the accelerometer controls component, the player looks straight just like you need. But on Android the initial rotation angle depends on the phone’s rotation. So, on Android you have to save the initial rotation value on the first frame of your game into a variable and then count it for every frame afterward.

3D

All character models for the game were created in Maya using simple polygonal modeling tools. But due to technical limitations of our game engine and target devices, the models were very low-poly - less than 1,000 triangles and had very small textures of 128x128 pixels. The most detailed texture parts were the characters’ faces.

(Monkey Model)

Most characters were created in the “T” pose to make the rigging and skinning processes smoother.

(Skinning process)

Inside the game engine, we used morphing for character animation. But before baking morph targets, we created skeleton and control rigs in Maya for all characters.

After that, we created motion cycles for the characters and baked animation into the format acceptable for the game engine.

Animation Hacks

Although the WebGL environment had the leading part in developing this game, everything started with the intro markup. Adding HTML and CSS was not a big deal, even though we did have a lot of uncommon elements such as polygon borders. However, the tough part was having to handle plenty of animations; so, we had to think about them from the very beginning.

VR game UI

As you know, every animatable element usually has a different starting position, does its thing and takes another position. We wanted to have full control over that. That is why we decided not to use native CSS animations and instead work with the JavaScript animation library Velocity.js. Using it, we could tune animations much easier and not care about any changes that could influence other relevant elements. You see, the big problem with CSS animations is their sequence. Particularly, the lack of it. There are techniques that help you solve the issue, but you still will not get as much flexibility as you can have with Velocity.js. Clearly, that is why we used it to animate almost all of the game’s HTML stuff.

VR game UI logo

The North Pole without snow is weird, so, naturally, we added some snow. And it turned out to be quite laggy. The reason was that we used HTML at first. Tons of HTML snowflake elements falling from the top of the screen was a really bad idea for the website’s performance. So we opted for canvas. In our case, it was a great option for dealing with many small elements.

In Web VR We Trust

What can we say? Interacting with VR can be an awesome experience. Especially when you do not need to download any additional apps but just take your phone and have all the fun right away. For us, developing for VR was a pure fun as well, even though we had to work quickly and thoroughly, since the deadline was Christmas and this is something that cannot be rescheduled. We even managed to break a mobile phone while testing our game. Fortunately, it was an old iPhone 4. But the result was definitely worth it.

11,761 players tried out our game during the holiday season, and we received numerous positive comments. We are also proud of receiving industry awards such as Mobile Site of the Day (MOTD) by the Favourite Website Awards (FWA) and People’s Champ in the Experimental Category by the Pixel Awards.

If you are looking for creative ways to generate extra value for your users through engaging VR experiences, we will be happy to help you implement your ideas. Get in touch with our team of VR app development experts.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.