Short time, big results

In 2015, Google’s research team developed the DeepDream algorithm for the ImageNet Large Scale Visual Recognition Challenge 2014. The algorithm is based on a trained convolutional neural network that complements and reinforces similar shapes in images. In the example below, a balloon turns into birds and flowers turn into people or animals. This is because the previews of these objects were similar to other images on which the neural network has been trained.

It was only the initial step, and a year later, the first exhibition of such works entitled 'Deep Dream: The Art of Neural Networks' was held. The best images were sold at prices ranging from $2200 to $8000, and all the collected money ($97,600) was submitted to the Gray Area Foundation for the Arts.

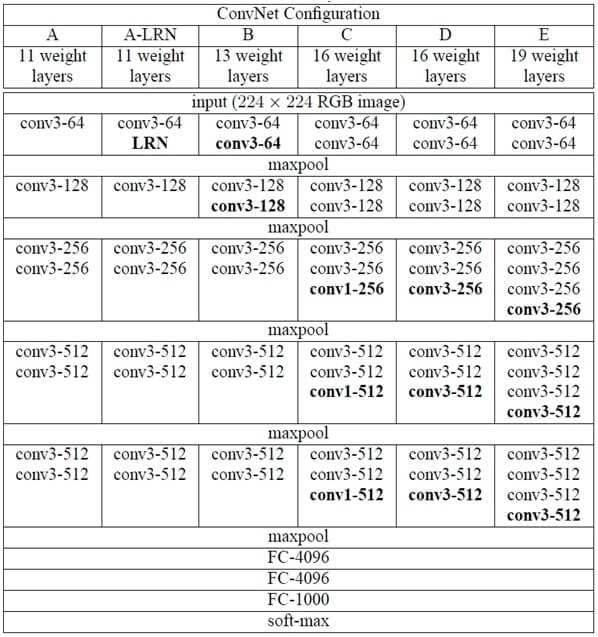

Following the research by Google, in mid-2015, a group of German scientists published an article 'A Neural Algorithm of Artistic Style'. They described an algorithm for artistic style recognition that is based on a trained, 19-layer VGG Network (image recognition). The algorithm can combine the style of one picture with the content of another using convolutional neural networks.

“The key finding of this paper is that the representations of content and style in the convolutional neural network are separable. That is, we can manipulate both representations independently to produce new, perceptually meaningful images,” admit the authors.

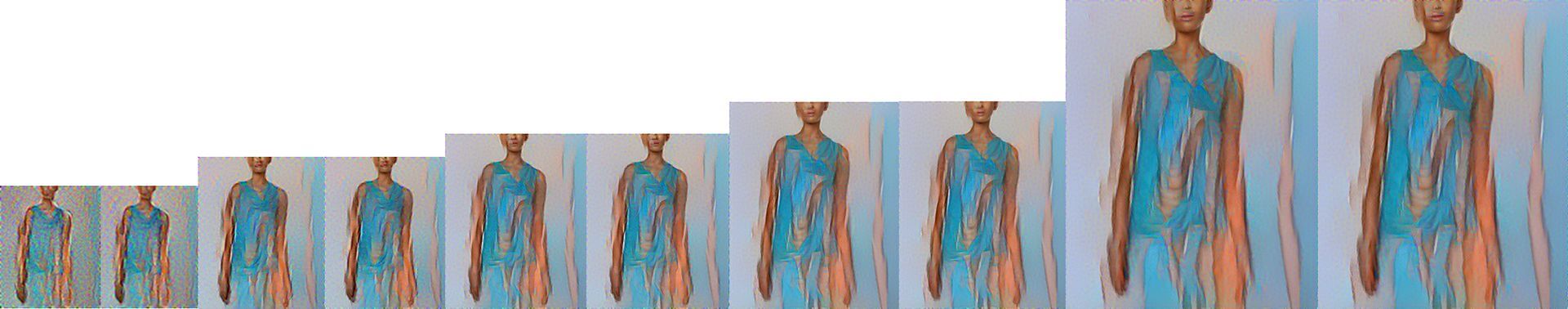

The rows show the result of matching the style representation of increasing subsets of the CNN layers. We find that the local image structures captured by the style representation increase in size and complexity when including style features from higher layers of the network. This can be explained by the increasing receptive field sizes and feature complexity along the network’s processing hierarchy. The columns show different relative weightings between the content and style reconstruction. The number above each column indicates the ratio ?/? between the emphasis on matching the content of the photograph and the style of the artwork.

In 2016, the mobile application Prisma was released. It works by the same principle; images are processed on the server side using the predefined filters (artistic styles or techniques).

Then, over a short time, Hyundai presented the photo of their new car Creta processed with Prisma. Many identical services like Mlvch, Vinci and Artisto also appeared.

Naturally, the trend expanded to other kinds of art and went beyond mere recognition and reproduction. For example, in the middle of 2016, Google launched a Magenta project that focused on machine creation of the unique artistic content instead of copying the prominent features of existing art works. As of today, the only ‘creative achievement’ of Magenta is the 90-second track comprising four notes, with a drum party added by a human.

And the most recent achievement presented in the beginning of September 2016 is WaveNet, a convolutional neural network that can synthesize speech and music with a high discretization frequency. WaveNet considerably outperforms all previous TTS engines. It can imitate breathing and articulation thereby humanizing computer speech to a great extent. Today, samples are available in US English and Mandarin Chinese.

Spreading among users, the mentioned techniques face a lot of criticism. In particular, their creativity, artistic value and novelty are often questioned.

Here are a few examples of what people say on designer forums and discussion boards:

“I would not call the image processed in Prisma ‘art’, but the picture may just become interesting.”

“To simplify, art is when you do not mindlessly redraw the image or click on a button in the app, but when you have a goal, and you think it over, and you go for it. Art is consciousness, and an artist decides what tool to use - paint, fixtures or application code.”

“Prisma application is just a primitive toy for the novelty-hungry.”

However, an opposite opinion is also common:

“Neural networks are everywhere. I do not expect that they will take away the bread of artists and designers, but it took my phone a minute to make quite interesting art work from several mediocre pictures.”

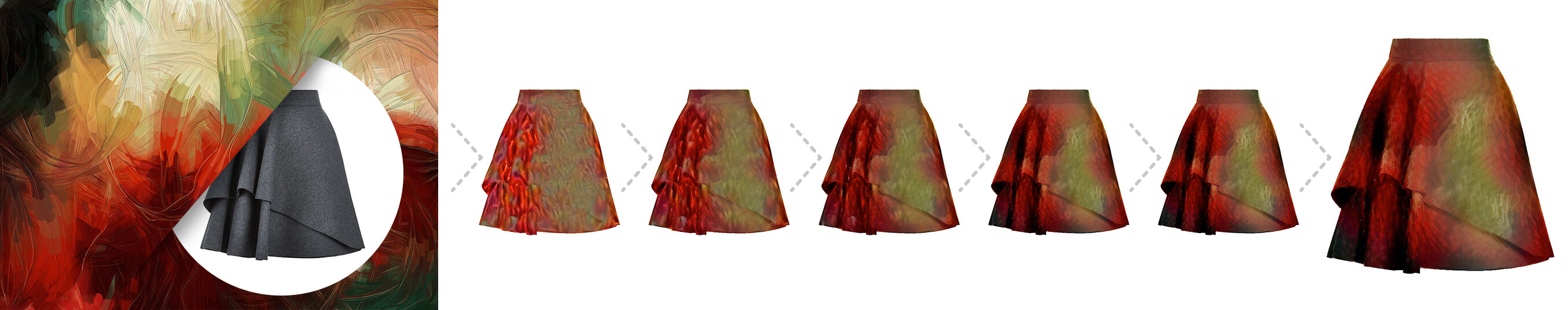

Encouraged by the optimistic feedback, we decided to apply our experience in data science and experiment with how similar algorithms could be used in the fashion industry as a creative tool for fashion designers.

Understanding the evolution of fashion

Fashion is an entirely new direction for machine learning. To design clothes, one should know and understand the mechanisms of fashion: causes and spreading of the trends, principles of cyclic repetition and evolution patterns. Operating all the above, designers develop the fashion of tomorrow.

However, now the task of designing or predicting trends can be simplified, thanks to a new class of neural networks. These networks can automatically allocate shapes, elements and types of clothing and further combine them. Based on the analysis of the way the clothes developed over the time, this approach allowed one to identify fashion cycles of different lengths, from 100 years down to 3 years.

According to several fundamental studies by designers and sociologists, the overall appearance and the construction patterns of clothing are completely updated over a 21-22 year cycle. In addition, the same researches have shown that there are also cycles of 3, 8, 13 and 33-34 years, within which all clothing forms — details and cuts also undergo some changes. Moreover, all types of clothing have their own independent development cycles. Historical cycles that bring radical changes may last up to 150 years.

Thus, the network that is trained to distinguish between popular and unpopular clothing patterns and designs can successfully predict the popularity of a certain garment within a chosen cycle. No matter how the appearance and the cut of clothes change over the years, if we compare items of clothing from different eras, we can track a number of analogous features, though the degree of similarity can be different. The history of fashion does not know exact duplications of clothing items from the previous cycles, but there always is some similarity of cuts, shapes or designs.

The basic parts of clothes, such as sleeves, collars or pockets, also have a cyclic recurrence. The period of these cycles is about 11-13 years followed by a symmetrical repetition and return to the original form of these parts, but on a higher technical or aesthetic level. Creating seasonal clothes, designers often bring in new properties, thereby changing the form of the apparel. The accumulation of qualitative characteristics leads to the change of shape. However, the task of a designer is not limited to predicting symmetrical repetitions. It is equally important to know the upcoming fashion trends in order to create a popular product.

Neural style transfer methods and outcomes

We compared three methods that can be used to optimize the process of creation: neural style transfer based on Keras, neural image analogy based on Visual Geometry Group NN (vgg_16) and neural style based on MXNet.

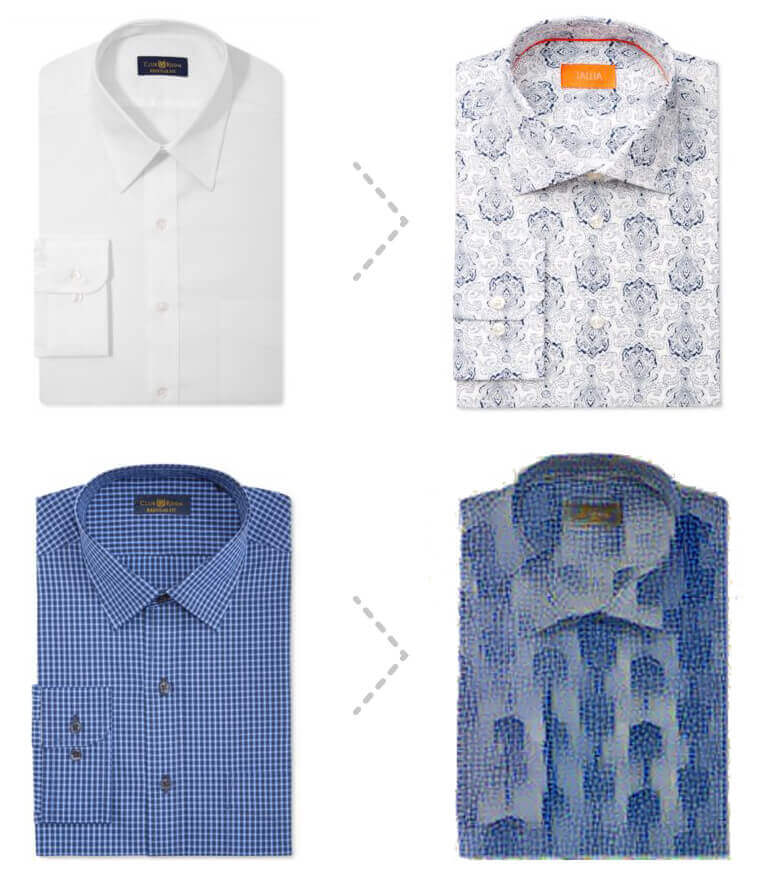

Keras neural style transfer runs SciPy-based optimization (L-BFGS) over the pixels of the generated image to minimize the neural style loss as compared to original style. To speed up the processing, one can take only one or two last layers. The entire network looks this way:

The results we got were postprocessed (after transforming the width and height for the Gram matrix):

As you can see, the new style of a shirt is a specific mix of two images, but not just all blue spaces are filled with checkers.

The second method, neural image analogy, also uses the weights of Visual Geometry Group NN and a SciPy optimizer (L-BFGS). But, unlike neural style transfer, it tracks changes between the original and transitional images and translates them into the third image you want to receive. This optimization can also use an incomplete network and scaled or resized images.

Using different settings like scaling, depth of the network, number of optimizer iterations etc., we can get images of different quality and content. Note that it is important to pick images with the same shapes to avoid blurred elements caused by transition. An optimizer cannot fully replace a background or some element that is in the target picture.

During the style transferring process, a neural network does not only rebuild colors, but it also tries to understand what parts to transform (hand to hand, face to face, pants to pants). The process of such scaled transfer looks like this:

An example of a successful shape selection:

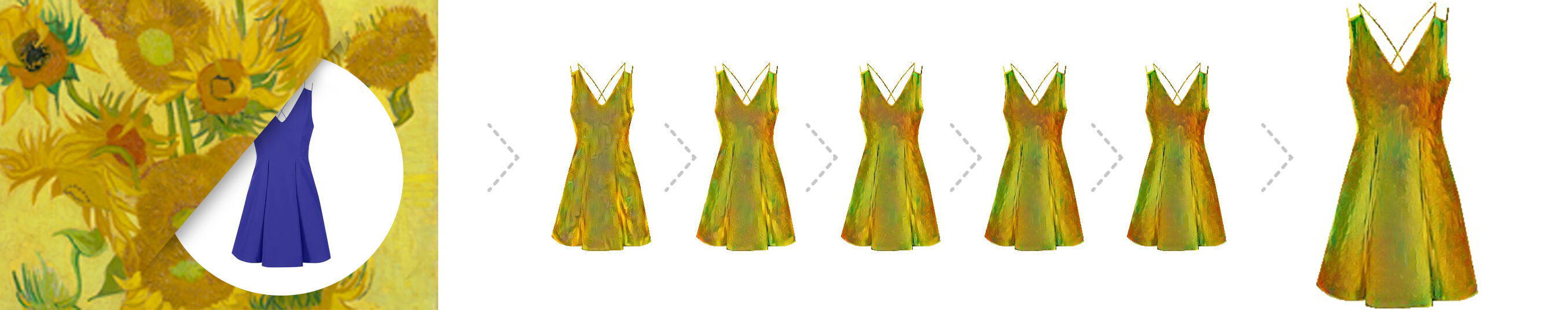

The third method, MXNet neural art, is also based on the known weights of vgg. The model evaluates the difference between the content and the style. The style parameter value converges during the training. The smaller it is, the more similar the style is. MXNet uses SGD to produce an image that has the style similar to the chosen work of art:

MXNet neural art allows transferring the style of artworks taking into consideration the properties of different clothing components - sleeves, folds, collars etc.

A conclusion — where technology meets philosophy

The comparative analysis of the capabilities of convolutional neural networks that are trained to detect/describe objects shows that the results can be quite promising from the fashion industry perspective. At the same time, the methods we use give visually better results than those based on autoencoders.

The reviewed methods can be improved through training solely on clothes. As a result, the methods can acquire specific weights (instead of vgg weights). And once the popularity rating is added, we would be able to predict the popularity of any clothing item.

The main benefit of such models is that they can help generate ideas for designing clothes or their elements, especially when the design process is likely to take more time than one fashion cycle. Given that the current and target cyclic stages are known, the fashion trends or any successful elements can be transferred from one garment, or even a work of art, to another.

Accordingly, the use of neural networks can potentially decrease the marginalization of modern art and fashion. They can boost commercial projects where the needs of human and society are the main drivers for creative designers, who should learn, know, feel and implement these needs into substantive shapes and images. Actually, studying the needs (consumer preferences and demands, properties and qualities of clothes, requirements for different types of clothes and more) is the essential step to take before creating new things.

“Too many clothes kills clothes… Fashion has changed," the 62-year old Jean Paul Gaultier stated. "A proliferation of clothing. Eight collections per season — that's 16 a year.... The system doesn't work... There aren't enough people to buy them. We're making clothes that aren't destined to be worn.”

However, no matter what powerful and sophisticated technology we use, there will always be a problem determining whether a picture or video created on a smartphone is a work of art. We can only say that, in our opinion, modern technologies are essentially the same tools as more traditional instruments; they just do their work in a specific way. Therefore, it does not matter what tools were used to create a work of art. No ink, no pencil, no canvas, no perfect technique or Prisma can transform an ordinary picture into a work of art; these are just tools and only humans can skillfully and consciously use them.

Feel free to share your thoughts in the comments section. And if you have questions on how to apply data science to address your specific challenges, don't hesitate to contact our data science team.

Related Insights