Remember Facebook scaling back its AI chatbot since 70 percent of the time, it failed to understand users. There are multiple other cases of hilarious AI failures that amused and even shocked the community this year. Well, no one is immune to failure when adopting technological innovation. However, many of the blunders could have been avoided.

Nowadays, a lot of manipulation is happening around the problems of artificial intelligence. Some consider it to be pure marketing hype; others blame pseudo researchers nurturing discussions around this technology. The fact remains even though artificial intelligence has a controversial status; people want to use it – to use something that is not yet explored. This brings us to the main philosophical problem of the AI field - is it possible to model human thinking?

Building an interaction with the computer through natural language (NL) is one of the most important goals in artificial intelligence research. Databases, application modules, and expert systems based on AI require a flexible interface since users mostly do not want to communicate with a computer using artificial language. While many fundamental problems in the field of Natural Language Processing (NLP) have not yet been resolved, it is possible to build application systems that have an interface that understands NLP under specific constraints.

In this article, we will show you where to start building your NLP application to avoid the risks of wasting your money and frustrating your users with another senseless AI.

NLU vs NLP - learn the difference

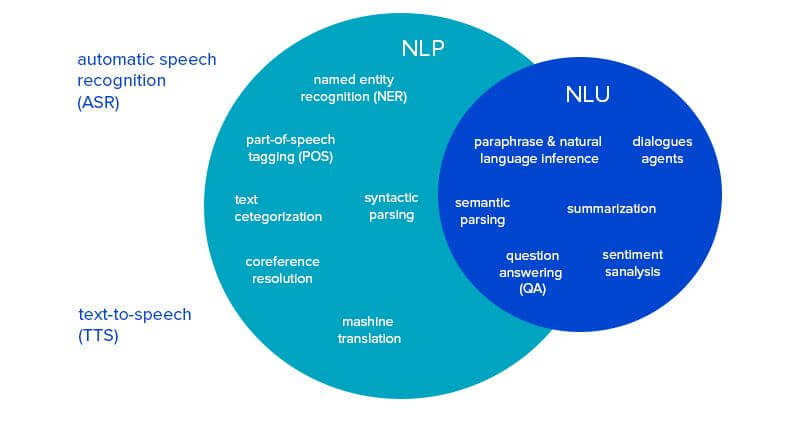

The terms NLU and NLP are often misunderstood and considered interchangeable. However, the difference between these two techniques is essential. So, let us sort things out.

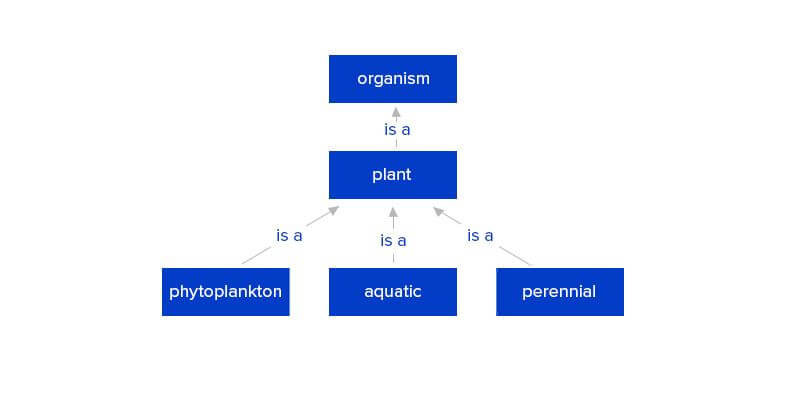

NLU is a narrow subset of NLP. It stands for Natural Language Understanding and is one of the most challenging tasks of AI. Its fundamental purpose is handling unstructured content and turning it into structured data that can be easily understood by the computers.

Meanwhile, Natural Language Processing (NLP) refers to all systems that work together to analyse text, both written and spoken, derive meaning from data and respond to it adequately. Moreover, NLP helps perform such tasks as automatic summarisation, named entity recognition, translation, speech recognition etc.

In other words, NLU and NLP that combine Machine Learning, AI and computational linguistics ensure more natural communication between humans and computers.

Fig. 1. The tasks of NLP and NLU.

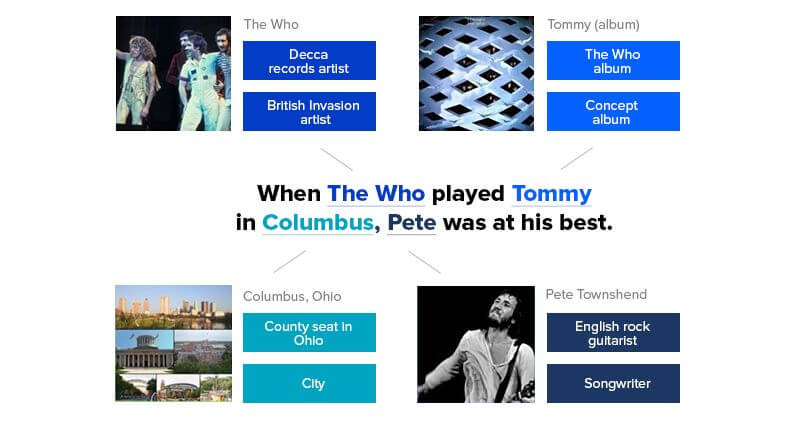

NLU can be applied for creating chatbots and engines capable of understanding assertions or queries and respond accordingly. See the examples below.

What’s causing NLU engines to fail

As human language is ambiguous, the NLP technique remains a challenging task in computer science. Natural language is rich in jargons, slangs, polysemy etc. So, it's often difficult for computers to comprehend sentences properly.

You can find more info on natural language ambiguity at the tutorialpoint portal. They suggest the following gradation of ambiguity levels:

- Lexical ambiguity − occurs at a very primitive level such as word-level. For example, treating the word “board” as noun or verb?

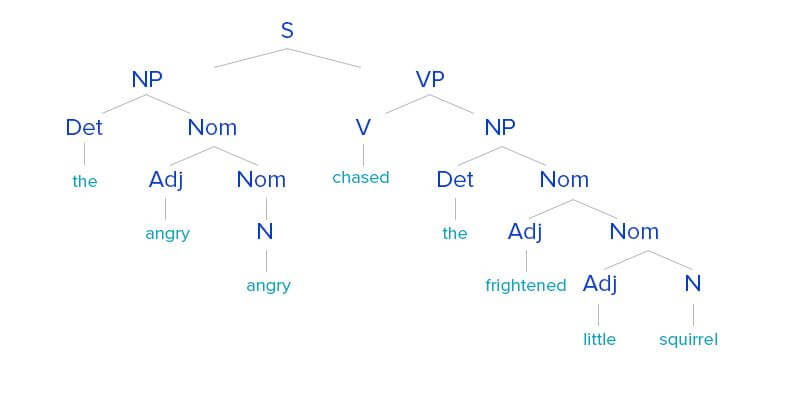

- Syntax Level ambiguity − The same sentence can be parsed in different ways. For example, “He lifted the beetle with a red cap.” − Did he use a cap to lift a beetle, or he lifted a beetle that had a red cap?

- Referential ambiguity − Referring to something using pronouns. For example, Rima went to Gauri. She said, “I am tired.” − Who exactly is tired?

The general idea here is:

One input can have different meanings. Many inputs can mean the same thing.

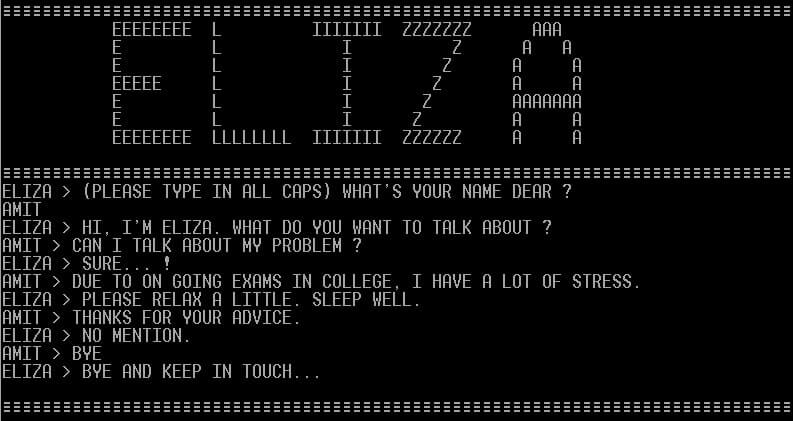

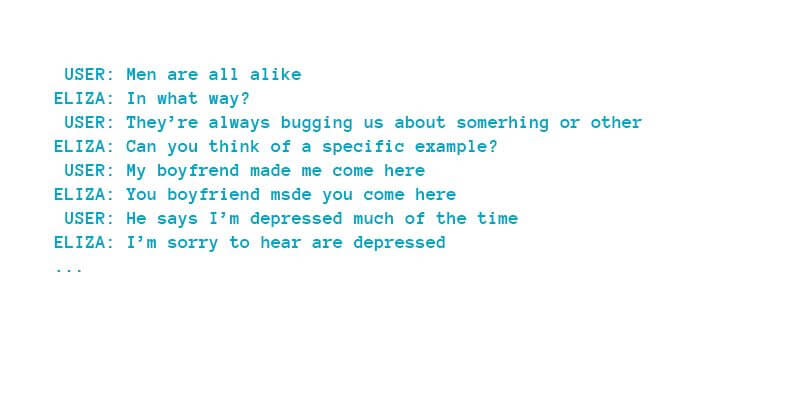

NLU approaches, same as NLP, also have a long history. The first known NLU engine is “ELIZA”. It was written in 1964-1966 using the FORTRAN extension. The “semantic” analysis with this engine was based on transforming keywords into canned patterns that gave users an illusion of understanding:

Of course, this approach was not enough to pass the Turing test, since it takes a few minutes to understand that this dialogue has very little in common with human-like communication.

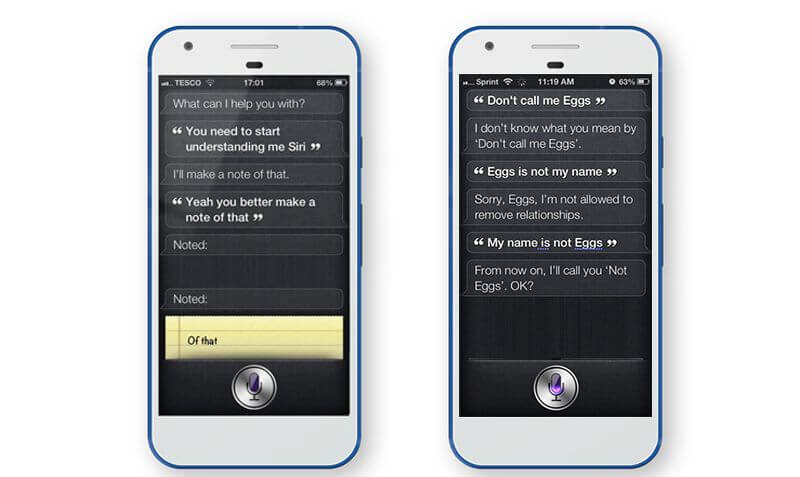

Even though developers now work hard to build smart AI systems that can provide faultless digital interaction between businesses and customers, many modern NLU engines, for example, Siri (2011), Alexa (2014), Google Assistant (2016) and other ‘AI’-based chatbots, screw up while chatting with real users. There are millions of examples on the net. Here’s a funny one:

Fig. 2. Conversations with an AI-based chatbot.

In most cases, the reason for all these blunders is the same. The developers failed to create proper dictionaries for the bot to use. Below, you will find the techniques to help you do this right from the start.

Building a meaningful dialogue with a machine: where to start

First of all, you need to have a clear understanding of the purpose that the engine will serve. This will allow you to create proper dictionaries. We suggest you start with a descriptive analysis to find out how often a particular part of speech occurs. You can also use ready-made libraries like WordNet, BLLIP parser, nlpnet, spaCy, NLTK, fastText, Stanford CoreNLP, semaphore, practnlptools, syntaxNet.

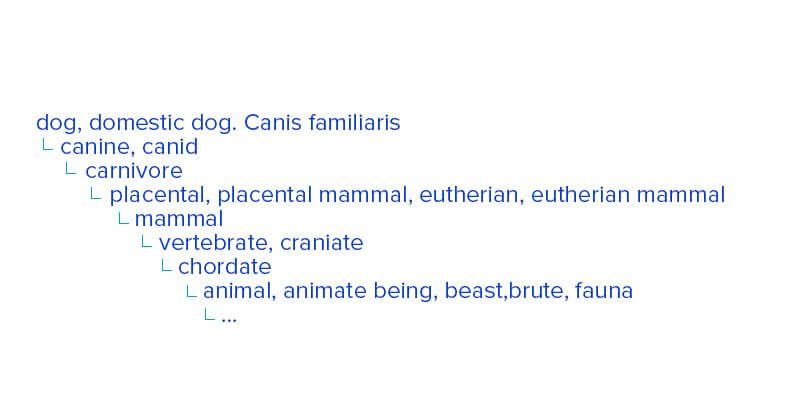

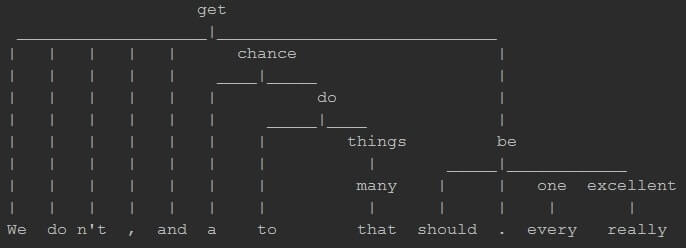

Examples of chunking / dependency parsing, hyponyms and words interpretates.

They allow to perform lexical and syntactical analysis. For example, you can define the relationships between parts of a sentence:

“We don't get a chance to do that many things, and everyone should be really excellent.”

or extract subject, verb, object (SVO):

(u'we', u'!get', u'chance'),(u'chance', u'do', u'things')

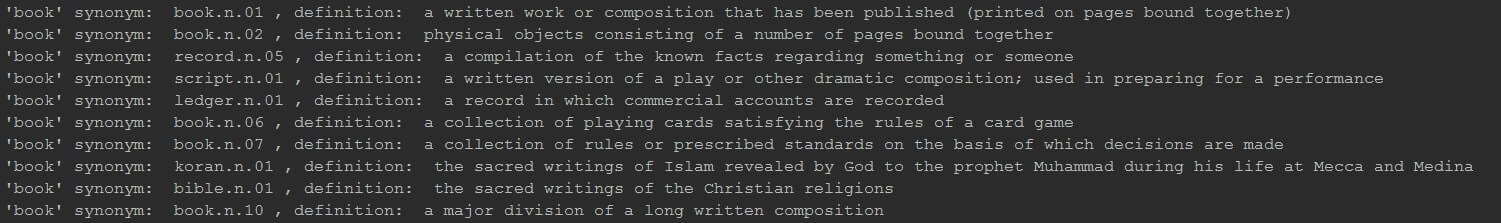

as well as synonyms and explanatory parts of speech (click to expand):

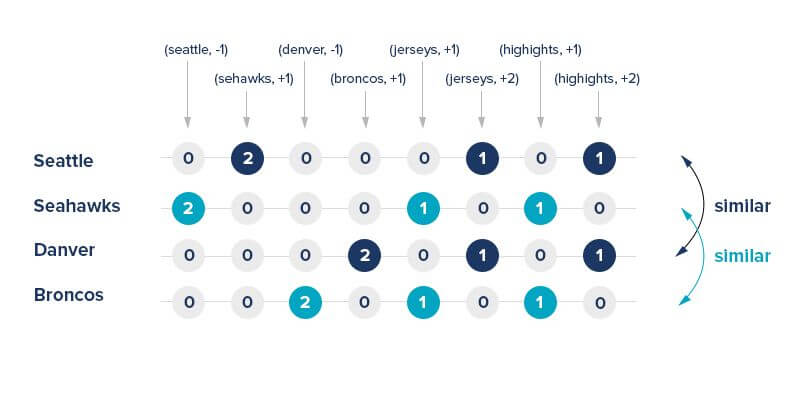

You can also apply the Vector Space Model to understand the synonymy and lexical relationships between words. This model is commonly used for Information Retrieval (IR) and NLP.

Network-based language models is another basic approach to learning word representation. Below, you can find a comparative analysis for the common network-based models and some advice on how to work with them.

What you need to know about language models

Let’s start with the word2vec model introduced by Tomas Mikolov and colleagues. It is an embedding model that learns word vectors via a neural network with a single hidden layer. Continuous bag of words (CBOW) and a skip-gram are the two implementations of the word2vec model. This approach is rather basic though. Today, we have a number of other solutions that contain prepared, pre-trained vectors or allow to obtain them through further training.

One of them is Global Vectors (GloVe), an unsupervised learning algorithm for obtaining vector representations for words. Both models learn geometrical encodings (vectors) of words from their co-occurrence information (how frequently words appear together in a large text corpora). The difference is that word2vec is a "predictive" model, whereas GloVe is a "count-based" model. There is also a FastText library. The difference about it is that FastText presupposes that a word is formed by character n-grams, while word vectors, a.k.a word2vec, recognizes every single word as the smallest unit whose vector representation needs to be found. The fact that fastText provides this new representation of a word is its benefit compared to word2vec or GloVe. It allows to find the vector representation for rare or out-of-vocabulary words. Since rare words could still be broken into character n-grams, they could share these n-grams with some common words.

For example, for a model that was trained on a news dataset, some medical vocabulary can be considered as rare words. Also, FastText extends the basic word embedding idea by predicting a topic label, instead of the middle/missing word (original Word2Vec task). Sentence vectors can be easily computed, and fastText works on small datasets better than Gensim.

Comparing our own model with Gensim and Glove

So, we tried to compare Gensim, GloVe and our own variant of a distributional semantic model built from scratch. We used similar or comparable arguments, such as dictionary size, min word count, alpha/learning rate etc. and a similar text corpus: ‘London Jack - Martin Eden’, 140K words (over 12K unique), 13K sentences.

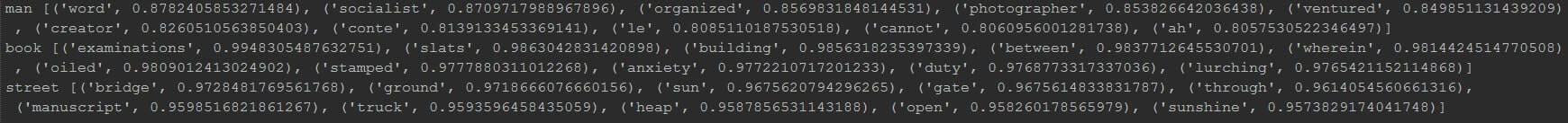

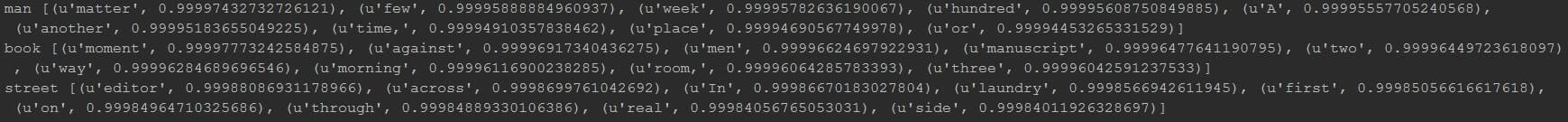

Then we compared the words most similar to the words ‘man’, ‘book’ and ‘street’ using our models. Below, you can see the results (click to expand).

Gensim Word2vec:

Glove Word2vec:

Semantic distribution*:

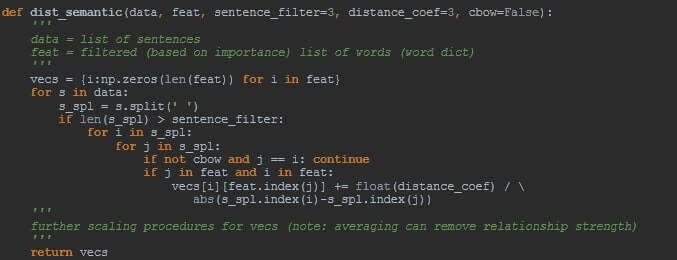

The last model is similar to the distributional semantic model.

The difference is in weights related to the distance from the target word. You can also consider the below approach using python 2.7:

Here the importance of words can be defined using common techniques for frequency analysis (like tf-idf, lda, lsa etc.), SVO analysis or other. You can also include n-grams or skip-grams pre-defined in ‘feat’ and including some changes in sentence splitting and distance coefficient. We can also use an ensemble of the distance metrics.

As you can see, it is better to validate different approaches for casting text to a numeric format (vector space model) instead of using pre-trained vectors from different libraries. However, developers encounter various problems with the existing approaches. As every approach can have disadvantages (e.g. computation time for distributional semantics etc.), it is better to consider different options before choosing the one that best fits the situation.

Some more tools to facilitate text processing

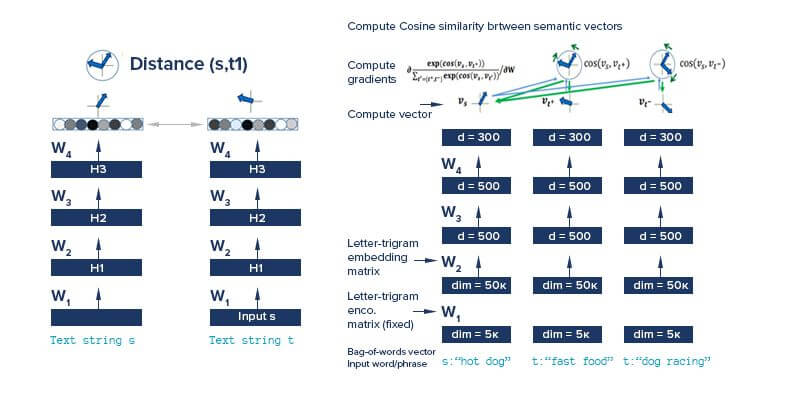

To perform multiple NLU tasks, we can use deep learning estimators with defined vector representations/embeddings. One of them is the Deep Semantic Similarity Model (DSSM):

This is a deep neural network that represents various text strings in the form of semantic vectors. We can use the distance metric (here - cosine) as an activation function to propagate similarity. Next, the trained model can efficiently reproduce questions the same way as paragraphs and documents in one space.

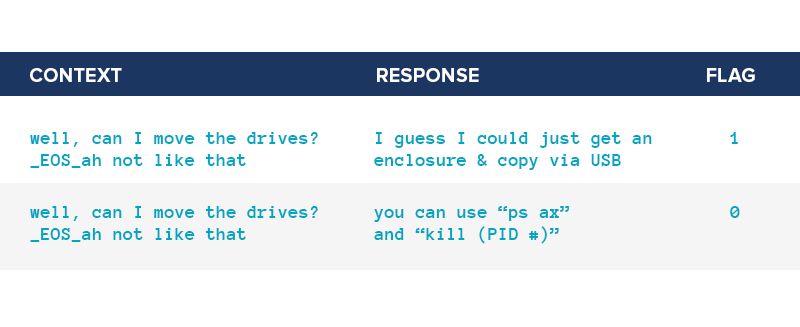

This model has broad applications. It can answer questions that are formulated in different ways, perform a web search etc. We can use various data sets for such training (dialogue agents). The most commonly used is the Ubuntu dialogue corpus (with about 1M dialogues) and Twitter Triple corpus (with 29M dialogues). Below, you can find a typical example.

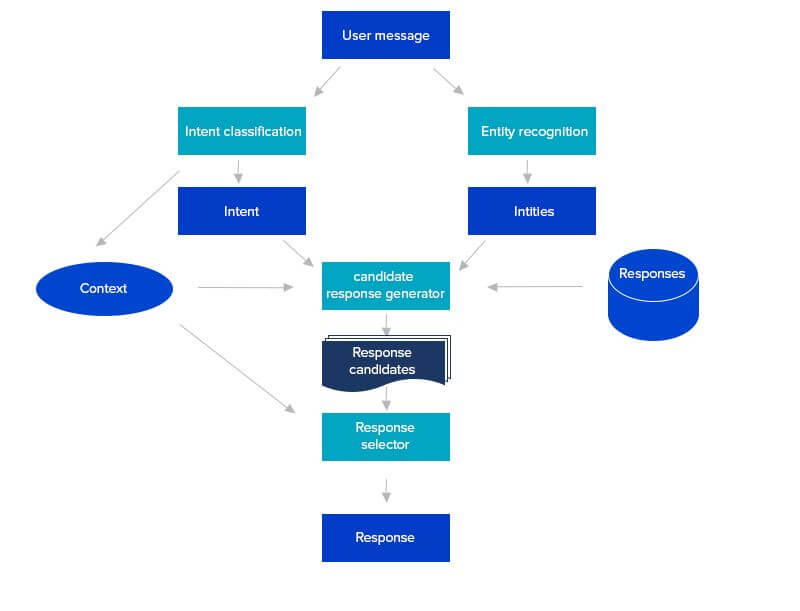

Many tools have specific combinations of text processing (SVO, NER and levels extraction) and pre-defined word embeddings. They have no real cognitive skills but combine different well-known methods. The next schema demonstrates the process flow for NLU inside such pattern-based heuristic engines:

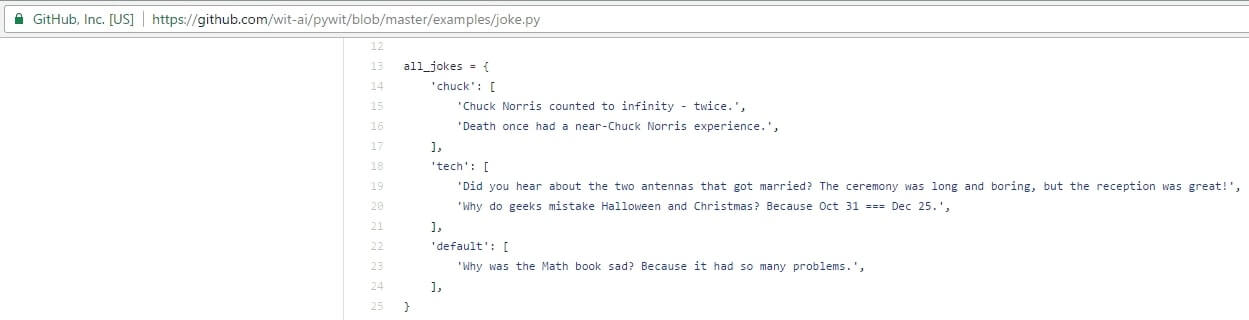

But usually such pattern-based engines need an extensive amount of information, even when building simple examples:

As you can see, the user needs to label NER types as PERSON. The other pic shows how a user can generate the answer (a random choice within the topic) when asked to tell a joke:

Such tools can facilitate the development of NLU engines. Apparently, to reflect the requirements of a specific business or domain, the analyst will have to develop his/her own rules.

In most cases the dataset for training is structured and labeled, so we use known ontology and entities for information retrieval. We may also create complex data structures or objects with annotations (standardised intents).

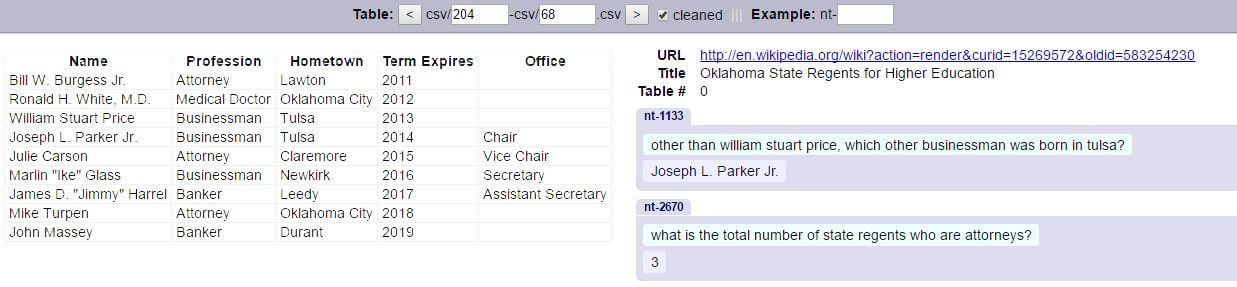

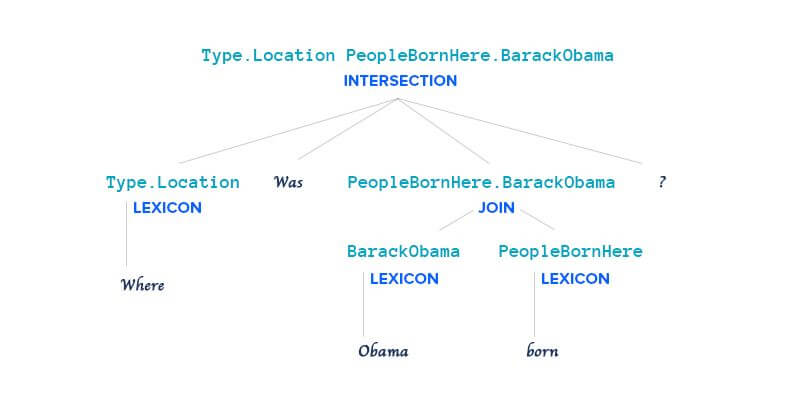

Another toolkit to mention is called SEMPRE which stands for Semantic Parsing with Execution. SEMPRE allows you to categorise all entities and group them to different intents (classes) based on existing structured or semi-structured data (https://nlp.stanford.edu/software/sempre/wikitable/ ):

On the right side, you can see the examples of queries and the responses that you can use to add ML approaches besides those with annotation. Below, you can see an example of how an ontology can look like when annotated and extracted from a description or a table.

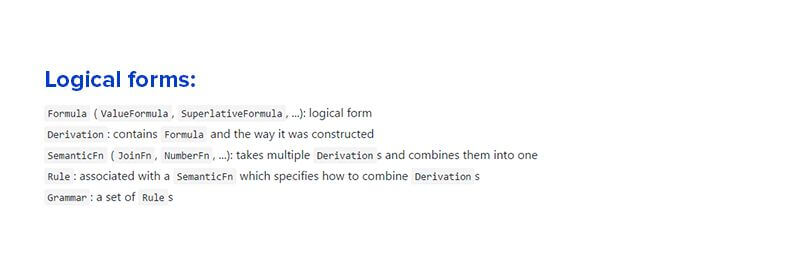

Also, SEMPRE provides different logical forms to evaluate the response:

For example, the city with the largest land area can be found as:

As you can see, efficient text processing can be achieved, even without using some complex ML techniques.

Your key takeaways for successful NLP engine development

From our experience, the most efficient way to start developing NLP engines is to perform the descriptive analysis of the existing corpuses. Also, consider the possibility of adding external information that is relevant to the domain. This can show possible intents (classes, categories, domain keyword, groups) and their variance/members (entities). After that, you can build the NER engine and calculate the embeddings for extracted entities according to the domain. Next, you will need to find or build the engine for the dependency parsing (finding the relationship between the extracted entities). The last step in preprocessing is to extract the levels/values that vector representation cannot handle the same way as it handles other words. Then, you can use the extracted information as an input to create estimators (applicable for linear regression models as well as ensemble algorithms and deep-learning techniques) or to generate rules (when your data is not labeled).

We hope that the methods we described in this post will help NLP professionals to organise their knowledge better and foster further research in the area of AI. For businesses, this article can help understand the challenges that accompany AI adoption.

While chatbots can help you bring customer services to the next level, make sure you have a team of specialists to set-off and deliver your AI project smoothly.

The ELEKS’ team can provide you with in-depth consulting and hands-on expertise in the field of Data Science, Machine Learning and Artificial Intelligence. Get in touch with us!

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.