Every experienced developer is aware of the significance and importance of testing. Software testing and quality assurance become even more crucial as a project moves from a monolith to a microservice architecture with databases, services, and multiple communication channels.

In this article, Yehor Khilchenko, Senior Software Developer at ELEKS, shares the experience of integration tests that boosted confidence in our codebase and reduced debugging time on staging and production.

What is integration testing?

Integration testing is an essential component of the software development lifecycle. It is one of the types of software testing methods; different parts of a software application are tested in a group to make sure that there are no issues when combining various components.

For example, integration testing for an online travel booking website should ensure that its various parts, such as flight/hotel booking and payment processing, work together smoothly. In this case, when a user books a flight and a hotel, the system needs to correctly pull flight and hotel details, calculate the total cost, and send the information to the payment gateway. If any of those fail, the system should consider booking incomplete.

- 4 types of integration testing process

-

- Big-bang integration testing is simple but makes error isolation challenging because it tests all components at once.

- Top-down integration testing—higher-level components are evaluated first utilising stubs for lower-level components, allowing early testing of essential business logic.

- Bottom-up testing starts with lower-level components and progressively integrates upward.

- Sandwich/hybrid integration testing offers more flexibility but necessitates more intricate test management since it combines the two methods by testing both ends of the system at once using stubs and drivers.

You can choose one of these testing strategies based on your project's specific needs, such as team size, application complexity, and available resources.

Integration testing vs unit testing

An average software consists of multiple software units or components. During unit testing, when tested separately, such elements may perform well; however, when tested together or integrated, errors might occur.

That's why integration tests go after unit tests when you are sure that functions and classes work as expected, and it is time to see how your code acts after its release.

Want to learn more about automated testing? Read our article: AI and Test Automation: Driving Innovation in Software Testing

ELEKS’ approach to integration testing

The main difficulty during integration testing is to mimic the environment. If the app uses databases and message brokers—they can be spun up easily. However, can we anticipate receiving some incoming requests following the call if we have an API from a third-party provider? If you've never heard of MockServer, this can prevent you from implementing it.

A mocking framework called MockServer offers the ability to respond to incoming requests by sending back pre-written answers. Below, you can see a JSON schema example:

{

"httpRequest": {

"method": "GET",

"path": "/view/cart"

},

"httpResponse": {

"body": "some_response_body"

}

}

Business logic example

We have developed a service with multiple external dependencies and business logic as close as possible to production.

The service is called "Purchase service" and makes the following series of actions (source code):

- Validates if operation can be done by checking related db entities;

- Generates unique code for which customer pays;

- Making an external API call to confirm if code can be used;

- Return response to the use

- To add interest, data is saved to Redis and messages are sent to Rabbitmq only after the HTTP response has been returned.

As you can see, there is a requirement to run a database, redis, rabbitmq, and Mockserver instead of external API. For these purposes, docker-compose fits enough (docker-compose source code).

Setting up Docker container

To ensure the tests pass, you need to set up interactions with Docker containers to verify whether data is being pulled from or pushed to the database or message brokers during the specified scenarios.

This can be achieved in two ways:

- by directly interacting with Docker through the CLI and using the `process. exec` command,

- by utilising an existing npm solution, such as the `test-containers` library.

No libs

Without the use of third-party tools, interactions with Docker Compose take place directly in this section. Let's define docker-compose.test first. Except for adding a mock server container, it shouldn't differ significantly from a production-like one:

mockserver: image: "mockserver/mockserver:5.15.0" container_name: "mockserver_container" restart: always ports: - "1080:1080" networks: - network

Defining a driver of some sort comes next. They will serve as a link between containers and mock tests. Under the hood, they use the Docker network to describe operations.

In this code, a MockserverDriver class is defined as one that acts as a driver or intermediary to communicate with a MockServer instance running in a Docker container. This driver allows Jest tests to interact with the MockServer, simulating external API responses and managing the mock server's behaviour. Here's a breakdown of Mockerver's definition:

export class MockserverDriver {

protected url: string = 'http://localhost:1080';

public async mockResponse(expectation: Expectation): Promise {

await request(this.url).put('/mockserver/expectation').send(expectation);

}

public async gracefulShutdown(): Promise {

await request(this.url).put('/mockserver/reset').send();

}

}

Examining the test body is the last phase. Usually, we specify mocks or stubs at the beginning of a test, but in this instance, the containers themselves will act as the test body because the code is not being mocked:

Explanation:

- The test defines a scenario for the "pay method."

- Instead of mocking the entire system, containers are used for integration testing.

- drivers.mockServerDriver.mockResponse simulates a response from an external service (in this case, a mock server).

- The test prepares a mock PUT request to the /confirm endpoint and expects a successful 200 OK status response.

describe('pay method', () => {

it('should pass successfully', async () => {

// Setting up a mock response from the MockServer

await drivers.mockServerDriver.mockResponse({

httpRequest: {

method: 'PUT', // Simulating a PUT request

path: '/confirm', // Targeting the '/confirm' endpoint

},

httpResponse: {

statusCode: 200, // Responding with a 200 OK status

},

});

});

});

Next, we'll send our request and check two things: that the event got published and that our data was saved correctly:

const { body, statusCode } = await request(API_URL)

.put('payment')

.send(purchaseDto);

...

const event = await drivers.rabbitMqDriver.waitForEvent();

const redisValue = await drivers.redisDriver.get(

userEntity.id.toString(),

);

expect(event).toEqual(

JSON.stringify({ userId: 1, product: 'BasicSoftware' }),

);

expect(redisValue).toEqual('BasicSoftware');

Now we can be sure that the app acts as expected and the same code will be executed at staging or production.

Testcontainers library

The second method looks similiar, however if your application is complicated and you would rather utilise a well-known solution rather than manually define "Drivers" for picture transmission, we suggest you to look at the testcontainers npm library.

Here's an example of how to it can look in your code:

export class PostgresContainer extends AbstractContainer {

protected userName: string = 'root';

protected password: string = 'password';

protected dbName: string = 'database';

protected dataSource: DataSource;

constructor(network: string) {

super('postgres', Containers.PostgresContainer, network);

this.withEnvironment({

POSTGRES_USER: this.userName,

POSTGRES_PASSWORD: this.password,

POSTGRES_DB: this.dbName,

})

.withExposedPorts({ container: 5432, host: 5432 })

.withWaitStrategy(Wait.forListeningPorts());

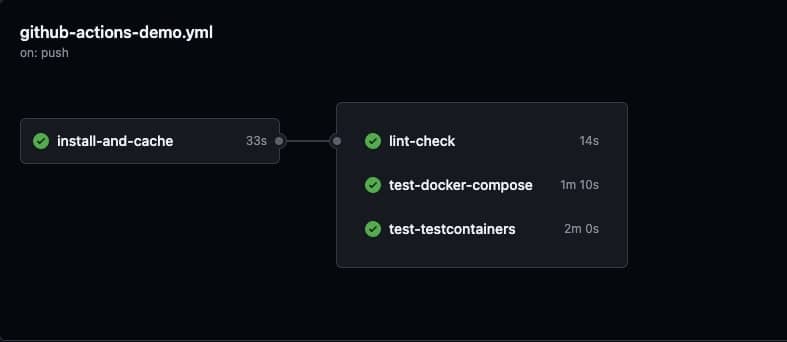

CI/CD

Integration testing can be configured as an automated step in your CI/CD pipeline. Quite important to keep it there to avoid unnecessary deployments. When implemented with GitHub Actions, these tests run efficiently and quickly, providing a cost-effective way to ensure the system's reliability. These tests add minimal overhead while significantly improving the system's robustness.

Summary

The integration testing approach runs your actual application code instead of mocking it, closely mimicking production behaviour. The aim is to mock surrounding services and check whether communication with them happens as expected. Integration testing excels at complex bugs and covering different scenarios. And while E2E testing provides the best coverage, integration testing will fit quite well for many apps and business logic.

The integrated testing offers debugging time in staging and production environments, improved confidence in microservice interactions, and early detection of integration issues. Combining Docker containers and MockServer provides a robust framework for complex distributed system testing without compromising test coverage or reliability.

FAQs

Integration testing ensures how your application’s components work as a whole in a production-like setting, as opposed to unit tests, which examine individual components separately.

One of the integration testing examples is user registration flow testing, where the test confirms that the registration API delivers a confirmation email, creates the required user records in related services, and successfully stores user data in the database.

The four main types include big-bang integration testing (testing all components together at once), top-down integration testing (starting with lower-level components and gradually adding higher-level ones), bottom-up testing (beginning with high-level components and progressively integrating lower-level ones), and mixed integration testing (combining both top-down and bottom-up approaches).

Since API testing confirms how various software modules and components communicate with one another via their APIs, it is a type of integration testing. API testing is a subset of integration testing that can cover other areas, such as database interactions, message queues, and third-party service integrations.

While integration testing verifies how different parts of your application work together, regression testing focuses on ensuring that new code changes haven't broken existing functionality. For example, after adding a new feature, regression tests check if all the old features still work correctly.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.