Supervised learning

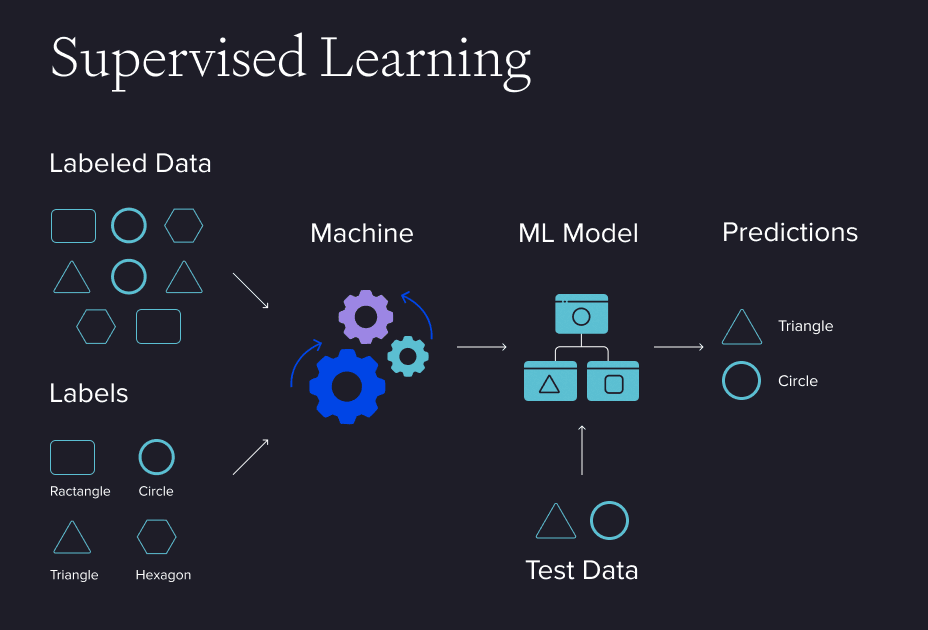

Supervised learning (Figure 1) is a type of machine learning where algorithms are trained using labelled data. The model learns to associate inputs with the correct outputs by analysing examples in the dataset. These examples consist of input-output pairs, where the input is a set of features (such as variables or attributes), and the output is the corresponding label or value. The main objective of supervised machine learning is to enable the model to generalise from these examples and generate precise forecasts on new, unseen data.

Figure 1

Types of supervised learning

Classification involves using algorithms to categorise data into distinct groups. It identifies elements within a dataset and assigns them appropriate labels or categories. Classical classification algorithms include linear classifiers, support vector machines (SVM), decision trees, k-nearest neighbours, random forests, and boosting.

Neural networks that are modelled after the human brain effectively solve complex tasks such as classification, regression, segmentation, localization, generation, and more.

These deep learning models consist of connected layers. Each layer processes input data, applies weights, and considers a bias (or threshold) to generate an output. The layer activates and sends the information to the next layer when the output exceeds a certain threshold. The input layer processes inputs, the output layer produces predictions, and activation functions are applied between layers.

Regression predicts continuous outcomes by analysing the relationship between dependent and independent variables. Regression models are used for tasks like forecasting sales or financial planning. Classical regression techniques include linear regression, logistic regression, and polynomial regression, as well as Support Vector Regression (SVR), decision tree regressors, random forests (RF), and boosting methods, which are the same estimators used in classification tasks. Neural networks can also be applied to regression, just as they are for classification tasks.

Evaluating supervised learning models

For regression tasks, the most commonly used metrics are:

- Mean Squared Error (MSE) calculates the average of the squared differences between the predicted and actual values. The lower the MSE, the better the model accuracy.

- Root Mean Squared Error (RMSE) measures the magnitude of prediction errors, with lower values indicating better performance. RMSE focuses on prediction errors, while standard deviation can assess model robustness.

- Mean Absolute Error (MAE) measures the average of the absolute differences between predicted and actual values.

- The coefficient of determination, or R-squared, measures the proportion of the variance in the target variable that is explained by the model. Higher R-squared values suggest a better model fit.

The most commonly used metrics for classification tasks are:

- Accuracy is the proportion of correct predictions made by the model. It is calculated by dividing the number of correct predictions by the total number of predictions.

- Precision focuses on the percentage of positive predictions that were correct.

- Recall measures the percentage of actual positive cases that the model correctly identified.

- The F1 score combines precision and recall into a single metric by calculating their harmonic mean, balancing false positives and false negatives. It is mostly used when we face imbalanced datasets (skewed classes).

A confusion matrix is a table that shows the number of correct and incorrect predictions for each class. It helps to identify areas where the model may be misclassifying data.

Unsupervised learning

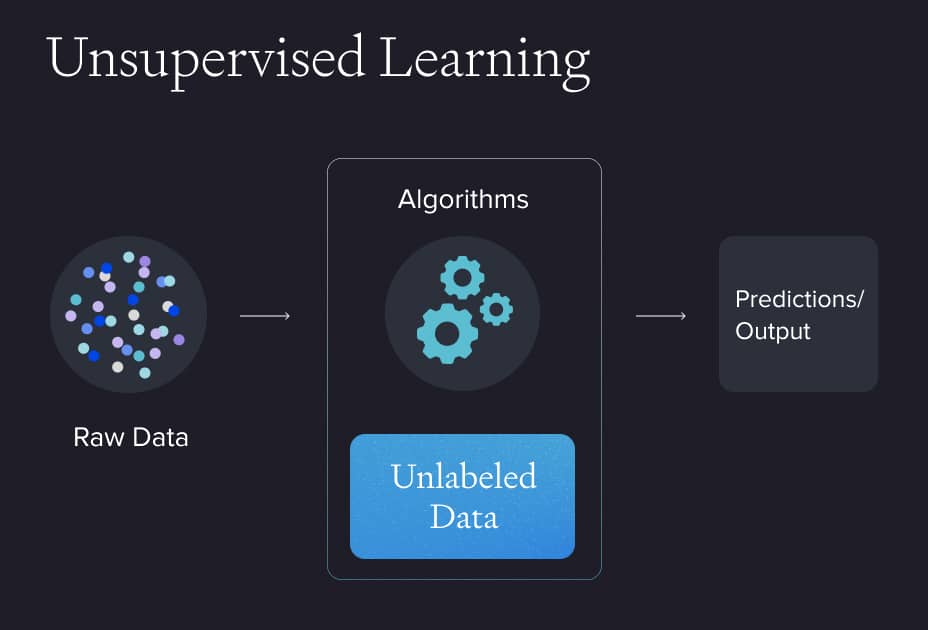

Unsupervised machine learning (Figure 2) is when the model is trained on data without labelled outcomes or target variables. In this approach, the algorithm identifies patterns, structures, or relationships within the data. The goal is to discover hidden insights, such as grouping similar data points (clustering), reducing data dimensionality (dimensionality reduction), or detecting anomalies.

Since there are no predefined labels or outcomes, unsupervised learning models learn from the intrinsic properties of the data, making it useful for exploratory data analysis and tasks where labelled data is unavailable. Common unsupervised machine learning techniques include k-means clustering, hierarchical clustering, principal component analysis (PCA), and autoencoders.

Figure 2

Types of unsupervised learning

Clustering is a methodology where unlabelled elements/objects are grouped to construct well-established clusters whose elements are classified according to similarity. This process provides valuable aid to the researcher in identifying patterns in the data. When working with large datasets, detecting such patterns may be challenging without the use of a clustering algorithm.

It focuses on discovering interesting relationships, patterns, or associations between variables in large datasets. It is beneficial for transactional data, identifying how items or events are related.

It is a technique that reduces the number of features or variables in a dataset while preserving its essential structure and meaningful information. Dimensionality reduction helps improve computational efficiency, reduce storage requirements, and mitigate issues like overfitting in machine learning models by simplifying the data. It is beneficial for high-dimensional data, such as images or genetic information, where visualising or analysing the data in its original form can be challenging. Standard methods include Principal Component Analysis (PCA), which transforms the data into uncorrelated components, and t-distributed Stochastic Neighbour Embedding (t-SNE), which visualises high-dimensional data in two or three dimensions.

An autoencoder is a type of neural network that learns to compress data into a smaller, more efficient representation and then reconstruct the original data from this compressed version. It has two main parts: the encoder, which reduces the data to a simpler form, and the decoder, which tries to rebuild the original data from that simpler form. Autoencoders are often used for tasks like reducing the size of data, removing noise, or finding unusual patterns in the data.

Evaluating unsupervised learning models

As unsupervised learning does not have predefined labels, the evaluation relies on specialised metrics and qualitative methods like visual inspection.

Elbow rule

The elbow rule is a method used to find the best number of clusters in unsupervised learning. It involves plotting the variance against the number of clusters and looking for a point where the decrease in variance slows down. This point is considered the optimal number of clusters.

Silhouette score

It defines how close an object (based on its features) is to its own cluster compared to other clusters, using a specific metric. The silhouette score ranges from -1 to 1, with higher values indicating well-separated clusters and correctly assigned data points. A silhouette score near 1 signifies clear cluster boundaries; scores close to 0 suggest overlapping clusters.

Calinski-Harabasz index

Also known as the Variance Ratio Criterion, it compares the dispersion between clusters to the dispersion of points within clusters. The higher the Calinski-Harabasz index, the better the clustering quality.

Davies-Bouldin index

This metric assesses clustering quality by evaluating the average similarity ratio between each cluster and its most similar cluster. A lower Davies-Bouldin index value signifies better clustering.

Visual inspection

Since there are no labels, data scientists use methods like PCA or t-SNE to reduce complex data into 2D or 3D plots. These plots help to see how the data points group together and whether the clusters are clear and separate. This method gives a clearer understanding of the data that might not be obvious from just numbers.

Key differences between supervised and unsupervised learning

| Aspect | Supervised Learning | Unsupervised Learning |

|---|---|---|

| Data type | Uses labelled data, where each input has a corresponding output label. | Uses unlabelled data, where no explicit output labels are provided. |

| Supervision | Involves external supervision, where the algorithm learns from the labelled data. | No advanced supervision or labels; the model identifies patterns from the input data without labels. The only supervision is during validation. |

| Objective | Aims to learn a mapping from inputs to outputs in order to accurately estimate future outputs. | Aims to discover underlying patterns, groupings, or structures in the data. |

| Use case | Used for tasks like classification (categorising data) and regression (predicting continuous values). | Used for tasks like clustering (grouping similar data), dimensionality reduction (simplifying data), or data restoration/representation (autoencoders). |

| Learning process | The algorithm learns from the relationships between the input data and known outputs. | The unsupervised algorithm explores the input data and tries to find patterns or groupings based on the similarities. |

The supervised machine learning process

Data preparation

- Data cleaning: Handle missing values by imputing or removing them, remove duplicates, and fix errors in the data. (Note: In some cases, data cleaning is considered part of data preprocessing.)

- Preprocessing: Scale numerical features, encode categorical variables, and prepare the data for the model.

- Data splitting: The data is divided into training, validation, and test sets to train, optimise, and evaluate the model.

Feature engineering

- Feature selection: This step identifies and retains the most relevant features that significantly contribute to the model’s predictive capabilities.

- Feature transformation: Features are transformed to enhance the relationship between the input data and the target variable, thereby improving model performance.

- Feature creation: New, predictive features are derived from existing data to provide deeper insights and improve the model’s accuracy.

Model training

- Training: During this phase, the model learns the patterns and relationships between input features and the corresponding target labels.

- Optimisation: The model’s parameters are fine-tuned using optimisation algorithms to maximise performance and minimise error.

- Cross-validation: It is used to evaluate how well supervised learning models perform based on unseen data. It is beneficial when there is a lack of data or when you want to reduce the possibility of overfitting to a particular training set. It is used in 98% of cases as part of the ML estimator validation.

Model selection

- Performance metrics: The model’s effectiveness is evaluated using metrics such as accuracy, precision, recall, or mean squared error, depending on whether the task is classification or regression.

- Model comparison: A variety of models—such as decision trees, support vector machines (SVMs), and neural networks—are tested to identify the best-performing model for the task at hand.

- Hyperparameter tuning: The model’s hyperparameters (e.g., learning rate, regularisation) are adjusted to optimise performance.

Model deployment

- Integration: Once trained and selected, the model is deployed in a production environment, where it can make real-time or batch predictions based on incoming data.

- Monitoring: To check the model's performance and if it remains consistent, accurate, and reliable as it processes new data.

Model maintenance

- Updating: Over time, the model may require retraining with new data to maintain its accuracy and relevance.

- Refinement: If issues such as concept drift (when data patterns change over time) arise, the model may need to be refined or restructured to address these challenges.

- Retraining: As new data becomes available, the model is retrained to adapt to evolving trends and ensure its continued predictive power.

Dive deeper into the details—read our article on the ML model lifecycle next.

Application of supervised learning

- Image classification and object detection. A model is trained to recognise and classify images into set categories, like identifying animals in photos. In object detection, supervised learning is used to detect and locate objects within images, such as recognising pedestrians or vehicles in autonomous driving.

- Customer churn prediction and recommendation tasks. In customer churn prediction, it predicts whether a customer is likely to leave a service based on historical data. Supervised learning is also crucial for building recommendation systems that suggest products or services to customers based on past behaviours and preferences.

- Predictive modelling and regression analysis. In predictive modelling, supervised learning algorithms are trained to predict future outcomes on historical data. It can predict sales figures, stock prices, or customer behaviour. In regression analysis, supervised learning models are used to understand relationships between variables and predict continuous outcomes, such as predicting house prices based on features like size and location.

Application of unsupervised learning

- Image segmentation and anomaly detection. Unsupervised learning can be used for anomaly detection, such as finding unusual patterns or outliers in data. For instance, in fraud detection, unsupervised learning can spot unusual financial transactions that might indicate fraud. In network security, it helps identify abnormal activities, such as potential hacking attempts.

- Customer segmentation and clustering. It allows businesses to identify distinct customer groups based on purchasing behaviour, demographics, or other features. This helps in targeted marketing and personalised customer experiences. For example, retailers use unsupervised learning to group customers with similar shopping habits, enabling them to tailor promotions effectively.

For more, read our blog post: Harnessing the Power of Behavioural Data Analytics in the Insurance Industry

- Exploratory data analysis and dimensionality reduction. In exploratory data analysis (EDA), unsupervised learning tries to identify unusual patterns and structures in data. Additionally, dimensionality reduction techniques, such as PCA, help reduce the complexity of high-dimensional data while retaining valuable information. This is especially useful for visualising data or improving the performance of other machine learning models.

Characteristics of supervised and unsupervised learning

Supervised learning

- High accuracy: Supervised learning models make highly accurate predictions on new. For example, image classification models can often achieve over 90% accuracy in identifying objects in images.

- Less risk of overfitting: The model is less likely to simply memorise the training data. Instead, it learns patterns that can apply to new data, making it better at handling different situations.

- Wide variety of algorithms: Supervised learning offers a wide range of well-established algorithms, such as linear regression, random forests, support vector machines (SVM), and neural networks, which provide flexibility and effectiveness for various tasks.

- Requires a large amount of labelled data: One significant drawback is that obtaining labelled data can be expensive and time-consuming. In many cases, gathering thousands of labelled examples may not be practical.

- Not suitable for unstructured data: Supervised models typically perform poorly on unstructured data, such as text, audio, and video, which are more challenging and costly to label.

Unsupervised learning

- Can work with unlabelled data: Unsupervised learning algorithms are particularly valuable because they do not require labelled data to find patterns or structures within it.

- Uncovers hidden insights: Techniques like clustering and association rule mining are excellent for discovering interesting groupings and patterns in large, unlabelled datasets.

- Subjective results: The outcomes are subjective and depend on how the human interprets them and on human intervention.

- Prone to overfitting: Without the guidance of labelled data, unsupervised models may fit too closely to spurious or irrelevant patterns in the data.

Supervised learning is ideal for making precise predictions but relies heavily on labelled data, which can be difficult and expensive. On the other hand, unsupervised learning is well-suited for discovering patterns in unlabelled data but may lack clear accuracy metrics and is more prone to subjective interpretation.

The choice between these methods depends on the specific application and data availability.

Real-life examples of supervised learning

Medical diagnosis in healthcare

Supervised learning is used to develop predictive models that help doctors diagnose diseases based on medical images or patient data. For example, algorithms are trained on labelled datasets of X-rays or MRIs. Each image is tagged with a diagnosis (e.g., cancerous or non-cancerous).

In the healthcare industry, this approach improves diagnostic accuracy, reduces human error, and speeds up diagnosis and treatment. By integrating supervised learning into healthcare software, organisations can harness advanced predictive models to support more efficient medical decision-making.

Credit scoring in finance

In the fintech industry, banks and financial institutions apply supervised learning to predict whether a person will likely repay a loan. Historical data, such as income, credit history, and employment status, labelled with default or on-time repayment outcomes, is used to train these models. Once trained, the models predict the likelihood of default for new applicants. This approach, powered by fintech solutions, helps assess creditworthiness, mitigate risk for lenders, and streamline loan approval processes.

Real-life examples of unsupervised learning

Customer segmentation in retail

Retailers analyse customer data and purchasing behaviour (e.g., frequency of purchases, types of products bought) without predefined labels. Clustering algorithms like k-means can group customers with similar buying behaviours into distinct segments, which can be targeted with personalised marketing strategies.

This approach helps businesses that use retail software understand customer preferences, create tailored marketing campaigns, and optimise inventory management based on different customer needs.

Anomaly detection in cybersecurity

Unsupervised learning is used to detect unusual activity in networks that may indicate a cybersecurity threat, such as a potential data breach or a new type of malware. Without prior knowledge of specific attacks, anomaly detection algorithms analyse network traffic or user behaviour to identify patterns that deviate from the norm, signalling a possible threat.

With the help of cyber security services, companies can apply unsupervised learning techniques to identify novel or unknown attacks, strengthening their security infrastructure and proactively detecting threats before they cause significant damage.

Semi-supervised learning

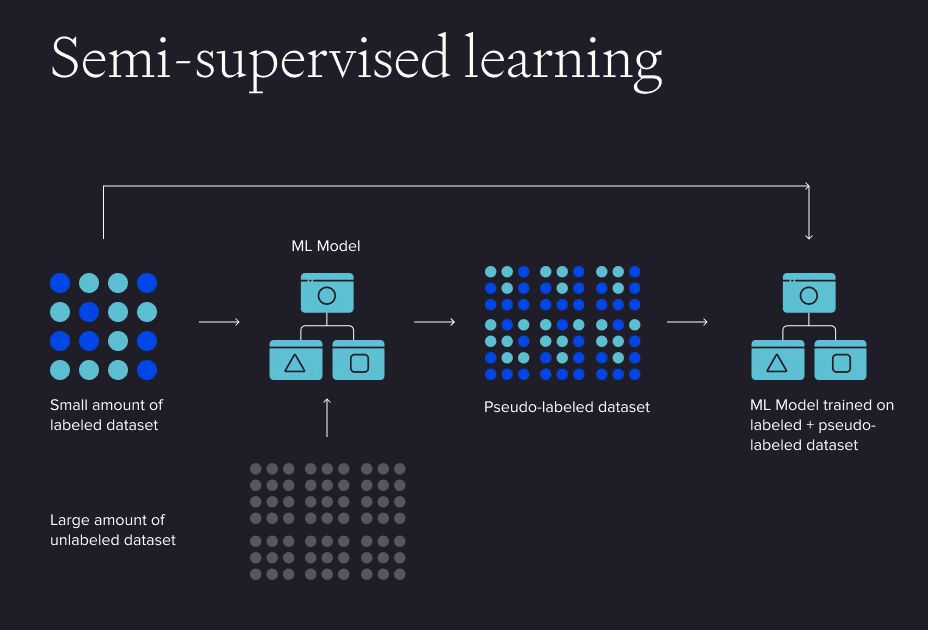

Discussing supervised and unsupervised models, it is also worth mentioning semi-supervised learning as it combines the best aspects of both. Semi-supervised learning (Figure 3) is a type of machine learning that combines a small amount of labelled data with a large amount of unlabelled data to train models. This approach merges elements of supervised learning, which utilises labelled training data, and unsupervised learning, which relies on unlabelled training data. In essence, it incorporates both supervised and unsupervised learning techniques.

Figure 3

How semi-supervised learning relates to supervised and unsupervised learning

Semi-supervised learning brings together the strengths of supervised and unsupervised learning. In many practical situations, having a fully labelled dataset is not possible, and relying only on unsupervised learning may not produce accurate results. By using unlabelled data, semi-supervised learning can enhance the model's performance without a large labelled dataset.

Challenges of semi-supervised learning

Although semi-supervised learning offers many advantages, it also comes with its own set of challenges:

Choosing the right architecture and tuning parameters: Selecting the right model and fine-tuning its parameters can take a lot of time and resources. This process often involves trial and error to find the best combination.

- Data noise: Unlabelled data may contain noise or irrelevant information, which can negatively affect the model’s performance. If the model learns from such noisy data, it may take the noise as the factual labels and be less accurate on further usage.

- Consistency assumption errors: semi-supervised learning assumes that similar data points in the feature space should have the same label. However, this assumption may not always hold true in real-world data, to the representation (embedding) errors.

- High computational demands: Training semi-supervised models requires significant computational resources, especially with large datasets and complex algorithms.

- Performance evaluation challenges: Evaluating semi-supervised learning models is not always straightforward, as the quality of the unlabelled data and how the model uses it heavily influence the results.

Future directions and emerging trends in machine learning

Machine learning (ML) is growing and impacting different industries. Technologies such as deep learning, reinforcement learning, and transfer learning have improved ML's capabilities to solve complex business problems. These advances are pushing the boundaries of what machines can do, making them more efficient and accurate in applications ranging from natural language processing to driverless cars.

The rapid growth of the ML market reflects the growing demand for these advanced solutions. The industry is expected to experience an impressive compound annual growth rate (CAGR) of 34.80% from 2025 to 2030, and the market is expected to reach $503.40 billion by 2030. This growth shows how powerful machine learning has become across industries.

Other types used in machine learning

- Reinforcement learning: A learning paradigm where agents interact with an environment to learn optimal actions by maximising rewards over time. It is widely used in robotics, gaming, and autonomous systems.

- Self-learning: A method where systems can learn patterns and improve performance without explicit supervision, relying on raw data and iterative self-correction.

- Online learning: A technique where models are updated continuously as new data becomes available, making it ideal for real-time applications like stock trading or personalized recommendations.

- Federated learning: A decentralised approach to training models across multiple devices or servers while maintaining data privacy. This is especially relevant in industries like healthcare and finance.

- Transfer learning: A method where knowledge gained from one task is reused to solve a different but related task, reducing the data and computation needed for new models.

- Metric learning: Focuses on learning distance metrics to better represent similarities or differences between data points, enabling improvements in facial recognition and recommendation systems.

Machine learning plays a significant role in shaping the future of technology, with new applications and use cases constantly emerging. The journey has just begun, and the possibilities for innovation are endless.

What is MLOps and how it streamlined ML development process

MLOps (Machine Learning Operations) incorporates the principles of DevOps with methodologies, practices and tools from Data Science and Data Engineering to optimise the end-to-end lifecycle of machine learning models. This method minimises manual intervention, enabling rapid experimentation and faster iterations.

Streamline your machine learning development process by reducing model development lifecycles with ELEKS’ MLOps services.

FAQs

The main difference lies in the presence of labelled data. Supervised learning uses labelled input and output data to train models for tasks like classification and regression, where accurate predictions are required. In contrast, unsupervised learning works with unlabelled data to uncover hidden patterns, such as in market basket analysis or dimensionality reduction.

ChatGPT is trained using a combination of supervised and self-supervised learning techniques. While supervised learning helps in tasks requiring specific labels, self-supervised learning leverages the structure of raw data to improve performance in tasks like sentiment analysis or multiple-class classification. These are examples of supervised machine learning techniques often used in artificial intelligence applications.

Clustering and market basket analysis are popular examples of unsupervised learning. These techniques group similar items or uncover associations without the need for labelled data. Another example is dimensionality reduction, which simplifies complex datasets while retaining critical information.

Regression is a supervised learning task. It predicts continuous outcomes based on labelled data and is widely used in classification and regression tasks.

Use unsupervised learning when you lack labelled data or want to explore hidden structures in your dataset. Examples include clustering, market basket analysis, and dimensionality reduction. It’s also ideal for pre-processing steps or for exploratory analysis to guide downstream supervised learning tasks.

Common unsupervised learning techniques include clustering (e.g., K-means, hierarchical clustering), dimensionality reduction (e.g., PCA, t-SNE), and association rule learning (e.g., market basket analysis). These techniques help uncover patterns and structures in unlabelled data.

Common supervised learning algorithms include decision trees, support vector machines (SVM), k-nearest neighbours (KNN), and linear regression. These algorithms rely on labeled training data to learn the relationship between input features and the correct output labels, allowing them to make accurate predictions or classifications on new, unseen data. These machine learning algorithms are foundational for tasks such as classification and regression.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.