Microsoft is celebrating its 50th anniversary by launching Agent Mode with MCP support for all VS Code users worldwide. Our experts at ELEKS have worked with the tool for some time and tested its capabilities across multiple projects.

As it becomes available to the broader development community, we've asked Ostap Elyashevskyy, ELEKS' Test Automation Competence Manager, to share the insights.

How effective has Copilot been at catching bugs or improving your code?

GitHub Copilot’s code review agent is moderately effective at catching bugs and suggesting improvements, especially when it is used alongside traditional review processes. It can be helpful in identifying common anti-patterns, unused variables, and suggesting more readable alternatives to existing code.

The strength of the GitHub Copilot review

1. Syntax check. It can suggest more readable constructions and check the typos in variables, functions, classes, and other entity names.

2. Logic suggestions. A review agent can detect and notify about missing checks, inconsistent logic, especially in simple functions.

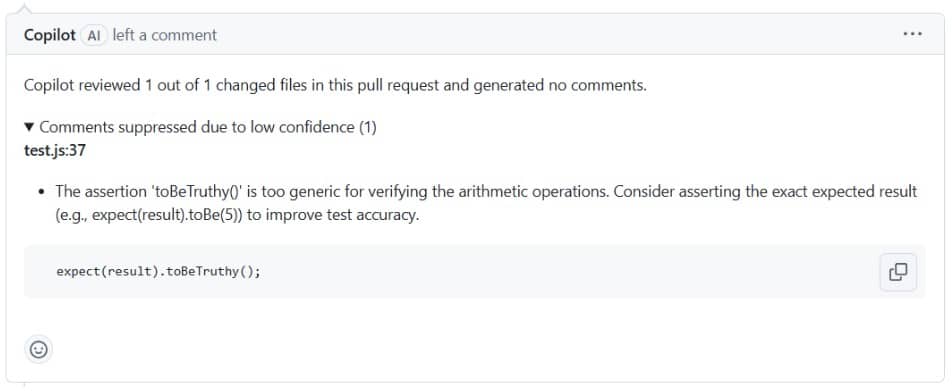

Let's look at the first example. GitHub review recommends checking the specific value instead of using general verification. Without this change, this test does not bring much value.

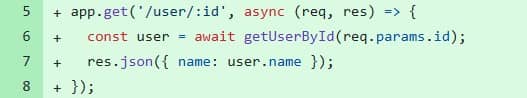

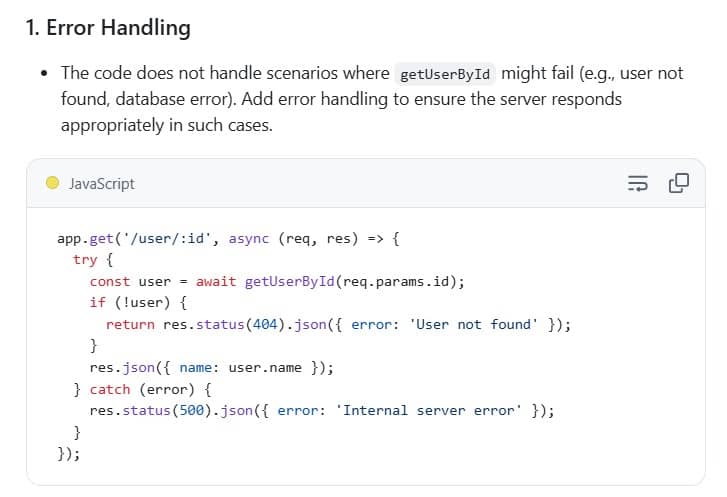

In the second example, edge case bug in a REST API endpoint—something a GitHub code review could catch early.

If getUserById() returns null or undefined, accessing user.name will throw an exception: “Cannot read property 'name' of null.:” which could crash the server. GitHub review recommends wrapping the code with error handling, like in this Copilot suggestion screenshot below:

3. Best practices and guidelines check. It verifies that the code is implemented according to best practices and coding standards.

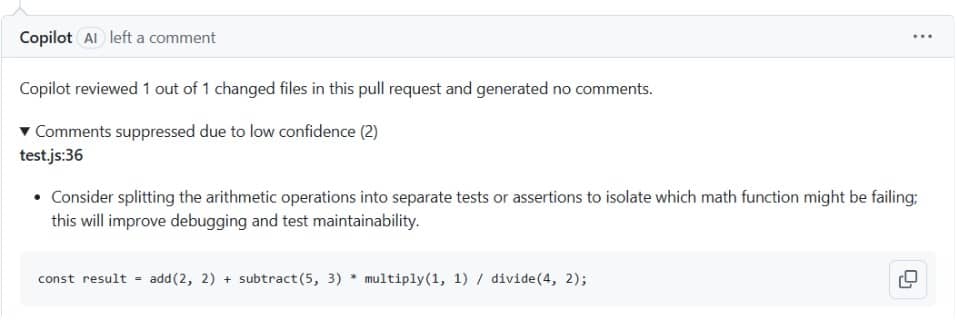

For example, a code is ok from a functional point of view, but is not the best from the maintenance and debugging perspective.

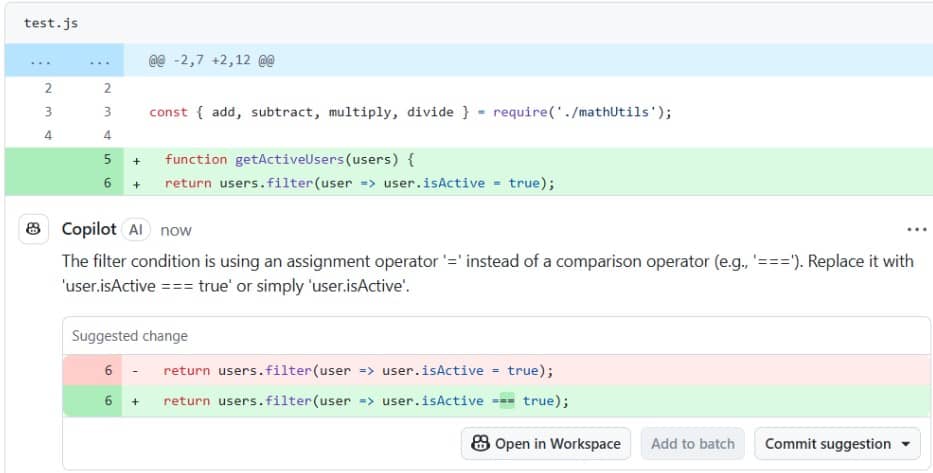

4. Catching bugs. Early bug detection. Let's look at the example with the assignment operator.

The condition uses = (assignment) instead of === (comparison), which always evaluates to true and modifies the data unintentionally.

This kind of bug can be missed even by an engineer as a reviewer, especially in short lambda functions.

Limitations of GitHub code review

Sometimes it misses architectural issues or logic bugs. In such cases, another feature of GitHub Copilot can be helpful: “Ask Copilot about this file”, or just ask Copilot to suggest code improvements in your IDE. It will generate a more comprehensive report with recommendations which may be missed by code review recommendations.

For example, let’s review our code:

The Copilot code review proposed only error handling, as was shown above in the Copilot suggestion screenshot. But if we “Ask copilot” with the next prompt: “what else can be improved in this code ?”, the result will be more comprehensive and will propose changes by categories: Error Handling, Input Validation, Security, Response Data, Logging, Asynchronous Function Optimization, Use Constants for Status Codes, Documentation and Unit tests. All these suggestions are reasonable and make sense (for more details about recommended code changes, see screenshots attached).

Does Copilot offer deep code review insights or just surface-level feedback?

GitHub Copilot (as of now) tends to focus more on surface-level suggestions, like syntax, naming, formatting, and common best practices, rather than deep code review feedback. It cannot substitute human code review. GitHub review is good at suggesting better names for variables and functions, pointing to unused imports, offering minor changes, and recommending well-known best practices. GitHub review has difficulties with business logic, complex security and performance issues, architecture design, and some types of testing, such as edge-case testing.

What's your experience with managing PRs after AI reviews?

After the AI review, we still need to review the code and suggestions generated by the AI. It does not mean that AI is not helpful, but at the same time, we cannot rely only on AI. It can suggest chunks of code that work in isolation but don’t fit well together, so you need to modify and merge it. AI doesn’t always see the bigger picture, sometimes we have business constraints or legacy systems with their own coding approaches. Sometimes you spend more time reverse-engineering the AI's logic than writing it from scratch.

How do you integrate Copilot reviews with human ones in your workflow?

Copilot is usually added as an additional code reviewer, not the sole one. We rely on best practices, which help to increase the effectiveness of code review:

- Engineers ask for Copilot feedback before opening a PR.

- Engineers tend to make PR smaller to simplify the process of review.

- Always follow up with a human reviewer.

- The engineer should comment on why they accepted the AI suggestions, confirming that they reviewed and understood the change, helping to build team trust around AI use.

Since GitHub Copilot’s code review feature is still relatively new, we are in the process of defining best practices for working with it.

What's your experience with false positives in Copilot's reviews?

Copilot might flag something that is correct or optimised just fine, because it is drawing from common code patterns, not your specific intent. Copilot is good at boilerplate, repetitive code, and suggesting common patterns, not business logic. For example, in the Copilot suggestion screenshot above, the review suggested handling of exceptions, but did not suggest adding verification of the user variable, which can be null or undefined, causing a runtime error. But if we ask Copilot directly to improve the code in the IDE or by using “Ask Copilot” in GitHub, it will suggest relevant error-handling code.

Regarding time spent on validation. It depends on the complexity and size of PR, but usually it takes from 1-2 minutes for simple and small changes to 2-10 minutes for more complex suggestions. It is worth mentioning that the suggested code should also be tested and verified, especially for edge cases.

What adoption challenges did you face with Copilot code review?

While Copilot doesn’t currently let you type custom prompts directly into the PR interface (as of early 2025), you can still guide Copilot using comments in your code, or use Copilot Chat (for example, in VS Code ) to ask targeted review questions. GitHub Copilot Review is currently only supported within GitHub-hosted repositories. Unfortunately, this means we can’t use its code review capabilities in GitLab/Bitbucket/Devops Azure/etc, which limits AI-assisted feedback outside the GitHub ecosystem.

If you want similar functionality elsewhere, you might need to explore alternatives like DeepSource, Amazon CodeGuru Reviewer, Sider, Codacy or others.

FAQs

Yes, GitHub Copilot can analyse code to suggest improvements, detect potential bugs, and verify adherence to best practices. For a more comprehensive analysis, users can use the "Ask Copilot about this file" feature rather than relying solely on automated review comments.

Yes, GitHub Copilot can improve code by suggesting more readable constructions, identifying missing error handling, catching logic bugs like using assignment operators instead of comparison operators, and recommending adherence to best practices. However, all suggestions should be validated by developers to ensure they align with the specific project requirements.

Related insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.