Artificial intelligence (AI) and large language models (LLMs) are hot topics nowadays, and for good reason. LLMs are advanced algorithms and a powerful subfield of AI, but it's important to remember they're just one piece of the puzzle. While true "general" AI might be further down the road, AI as we know it now and LLMs are already making waves in software development and testing. Let's explore how this technology is transforming how we can test software.

How is AI transforming software testing and test automation?

Software testing has undergone significant transformations over the last decades. Its evolution started with manual testing, progressed to automated testing, and now it's moving to AI-powered testing strategies. AI is already being used in some areas of quality assurance (QA); now, let's delve into the key AI applications within the realm of QA.

- Key AI tools and their applications in software testing:

-

- GitHub Copilot helps to generate and autocomplete the code and comments. This tool has already been used on some test automation projects in our company. It is one of the top AI-based services worth considering.

- ChatGPT—a well-known AI system. For test engineers, ChatGPT can help generate test cases based on requirements, traceability matrixes, test plans and strategy, test data, SQL queries, API clients, XPATH locators for elements, regular expressions and more.

From the test automation perspective, ChatGPT can be used to implement utilities/helpers, test data, code generation and much more. There is also a paid version with extra features and abilities that allow users to create and use custom user GPT models.

ChatGPT Plus allows you to use custom GPTs trained on user data, such as documents (guidelines, best practices, policies), images or other files you have. It helps with implementing a comprehensive test plan, strategy, and other testing documentation and provides feedback on mockups during development. Those are only the most popular ways of using ChatGPT.

Let’s delve into the hands-on experience of the ELEKS quality assurance office. First, we will explore test automation tools, and generation of automated scripts based on requirements. Then, we will review how AI processes images and provides feedback based on visual information. Lastly, the ELEKS team will describe the system they implemented in the scope of our PoC using OpenAI API for mobile applications.

It is worth mentioning this research took place in 2023 and the first quarter of 2024. While the prices mentioned in this article can be a bit different now, the main approaches and results are still conceptually valid.

Overview of AI in end-to-end test automation

The purpose of integrating AI into the test automation process is to automatically generate code and execute tests based on requirements or test cases. This concept is not new—before 2023, similar frameworks were already available on the market. These frameworks claimed to be able to automatically heal locators (such as Selenium Reinforcement Learning, Healenium, Testim, and others) or even generate code for automated tests, based on test cases.

The main disadvantages of test automation solutions based on AI

The main goal of AI-powered frameworks is to make tests more stable, auto-fix them when they fail, and even write them instead of engineers. This idea is great, but if it were true, we would never use Selenium and other test frameworks, requiring coding and skilled engineers.

- Main disadvantages of AI-powered test automation frameworks:

-

- Slowness. Data should be shared with and processed by an AI-powered backend and then returned to the client.

- Stability. There is no 100% guarantee that the generated code will work after the UI is updated (in many cases, generated code requires review and fixing), so we cannot rely on such tests and trust to generate code.

- Dependency on additional wrappers. During our research, we found that popular AI tools use libraries to access AI services like OpenAI API or similar, so additional dependencies are introduced.

- Cost. AI-powered tools typically require a monthly or yearly subscription payment.

These disadvantages cause popular original test automation tools like Selenium, Cypress, Playwright, WebDriver.IO to remain more popular than AI-powered UI test automation tools.

The fact remains that even when we use AI-driven frameworks, we still need to wait for code generation, fix and debug autogenerated code and this is overhead we tried to eliminate by using AI. But let’s review AI test automation frameworks available today.

We have investigated frameworks based on Playwright powered with AI. Playwright is one of the most popular and powerful E2E testing frameworks on the market, so it is reasonable to look at the different modifications which extend it with AI features.

Auto Playwright

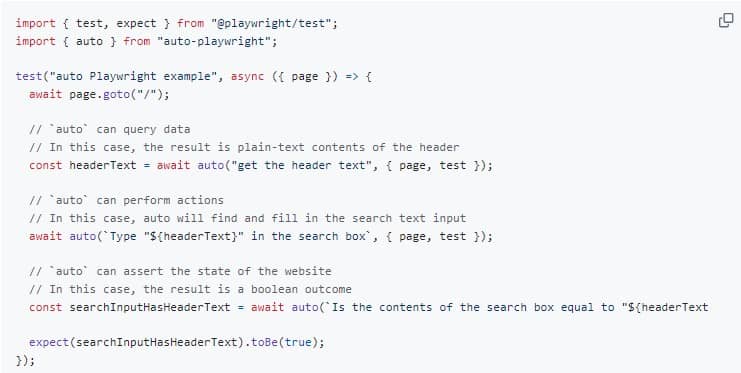

This framework is a wrapper over Playwright, and it uses OpenAI API underneath the hood to generate code and locators based on the DOM model of the web page. You should use your OpenAI API token to be able to generate and run tests. While testing it, we found some limitations: Auto Playwright works slower than Playwright, which is expected. It sends a whole page to OpenAI, so the cost depends on the number of tokens being sent; in our case, it was around $0.1-$0.5 per 10 API calls or steps. But the price has decreased over time and is now cheaper. To reduce costs, Auto Playwright uses the HTML sanitiser library to decrease the size of the HTML document.

Regarding the quality of the generated tests, they were runnable but only in simple cases on web apps in the English language. In more complex scenarios, tests failed because of missing locators or just timeout.

Test implemented on Auto Playwright

In the example above, you can see that there are no locators in the code; they are dynamically generated by the AI layer just before execution. The author of this framework recommends looking at a more robust solution—ZeroStep.

ZeroStep offers a similar API but a more robust implementation through its proprietary backend. Auto Playwright was created to explore the underlying technology of ZeroStep and establish a basis for an open-source version of their software. For production environments, I suggest opting for ZeroStep.

ZeroStep

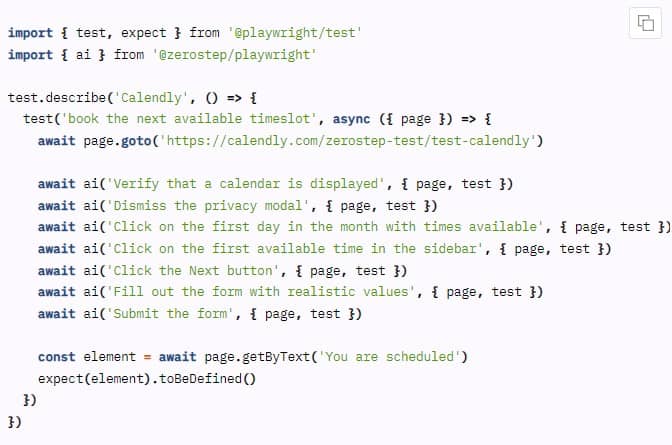

After a few experiments with ZeroStep, we discovered that it works better than Auto Playwright. ZeroStep is based on its own backend, but OpenAI is also used underneath the hood. Register on their website to use it and receive a token for your tests. In the free version, you are limited to 500 AI function calls per month, and if you pay $20 per month, you have a limit of 2000 calls (again, pricing can be different at the moment you read it). This framework works faster than Auto Playwright but slower than the original Playwright framework. While testing this framework, we encountered some issues with non-English websites and found that not all controls were recognized in English web applications.

Code written in ZeroStep

This test above contains human-readable instructions that are translated into Playwright commands during runtime. It is a more advanced example. If you try to write similar code for your application, you may struggle with issues related to element identification, some timeouts, etc. So, you need to play a bit with the step description and find the best wording to generate and run some meaningful tests.

Comparison of Auto Playwright vs. ZeroStep frameworks

| Comparison criteria | Auto Playwright (PoC) | ZeroStep (commercial) |

|---|---|---|

| Uses OpenAI API | Yes | Yes ZeroStep proprietary API + OpenAI |

| Uses HTML sanitization | Yes | No |

| Uses Playwright API | Yes | No Uses some Playwright API, but predominantly relies on Chrome DevTools Protocol (CDP) |

| Snapshots / caching | No | States that yes (but no info in documentation found) |

| Implements parallelism | No | Yes |

| Allows scrolling | No | Yes |

| License | MIT | MIT |

The Auto Playwright is more of a proof of concept rather than a ready-to-use commercial tool. ZeroStep is a commercial tool that has some potential, but considering its slowness, issues with control identification, and other challenges, it is hard to use it as a replacement for the original Playwright framework.

The idea of generating test scripts from requirements is interesting and looks great in demos. However, it is still far from commercial usage due to the high cost of code generation and execution, slow code execution, incorrect processing of more complex web applications, and the inability to work with dynamic elements that appear in the DOM model only after a user performs some actions on the UI. We believe that it will significantly improve over time, and ultimately, we will use it for at least some types of testing, such as simple Smoke tests.

How AI-powered visual analysis enhances application testing

Visual AI-powered analysis services and tools assist in testing as well by taking images as input, processing them, and generating output. This approach has plenty of applications, such as generating test cases, providing quality feedback/report, image comparison, OCR operations, working with complex PDF documents, and others.

The public introduction of models like GPT 4 Turbo with Vision has created a big space of opportunities for thousands of startups and products.

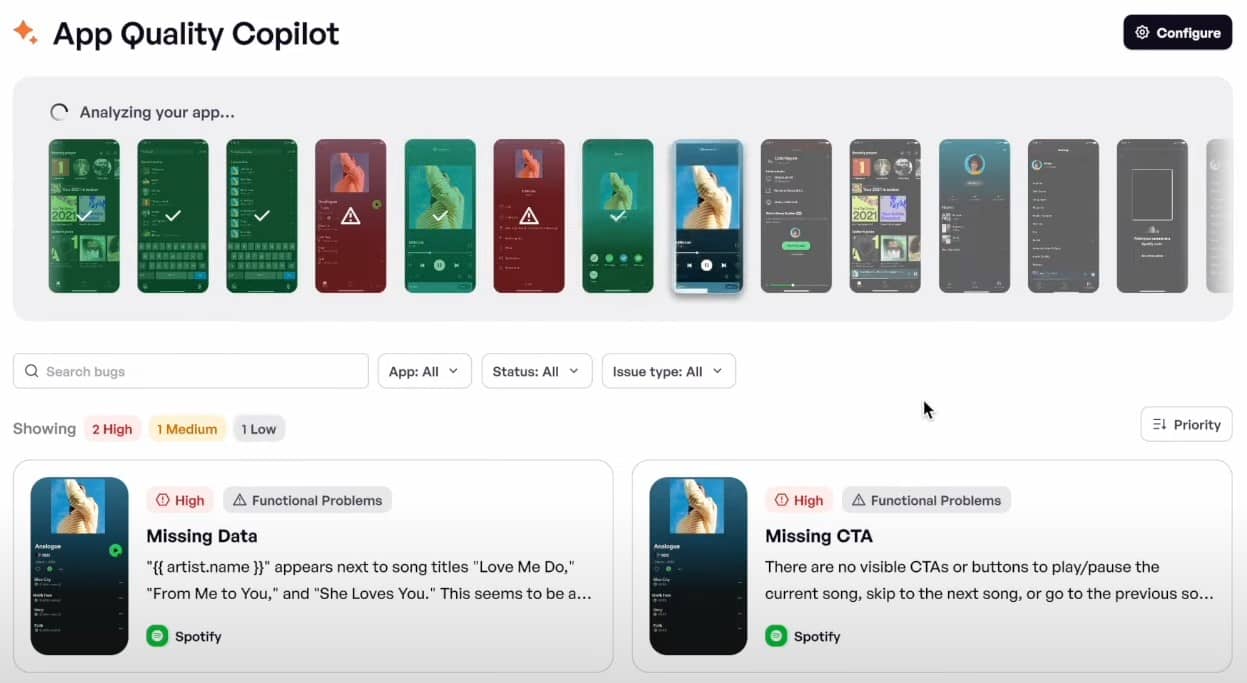

One of the services that offer quality feedback based on screenshots is App Quality Copilot. It is a new service on the market, with its first public version appearing at the beginning of 2024. It provides three main functionalities:

- Generation and execution of mobile auto tests (UI end-2-end) from requirements.

- Providing bug reports for screenshots taken from mobile applications, desktop and mobile browsers. This feedback contains suggestions from different perspectives and areas: functional, translation issues, UI/UX insights, missing data, broken images, and others.

- Generation of test cases from requirements.

In the demo video, we can explore the functionality of this service: the user selects a mobile application, which is then divided into multiple screenshots, each of which is analysed with AI. After the analysis, feedback and overview of issues found are provided for each screenshot. These issues can be functional and non-functional, also they are grouped according to High/Medium/Low priorities.

Our team did not have an opportunity to work with App Quality Copilot, but it is worthy of attention, and we may return to it at some time.

ELEKS UI Copilot: implementing AI-powered proof of concept

In the scope of the ELEKS R&D project, we have developed and implemented our own AI assistant, ELEKS UI Copilot, which helps us to provide quality feedback or generate test cases by taking screenshots of mobile applications or any image attached.

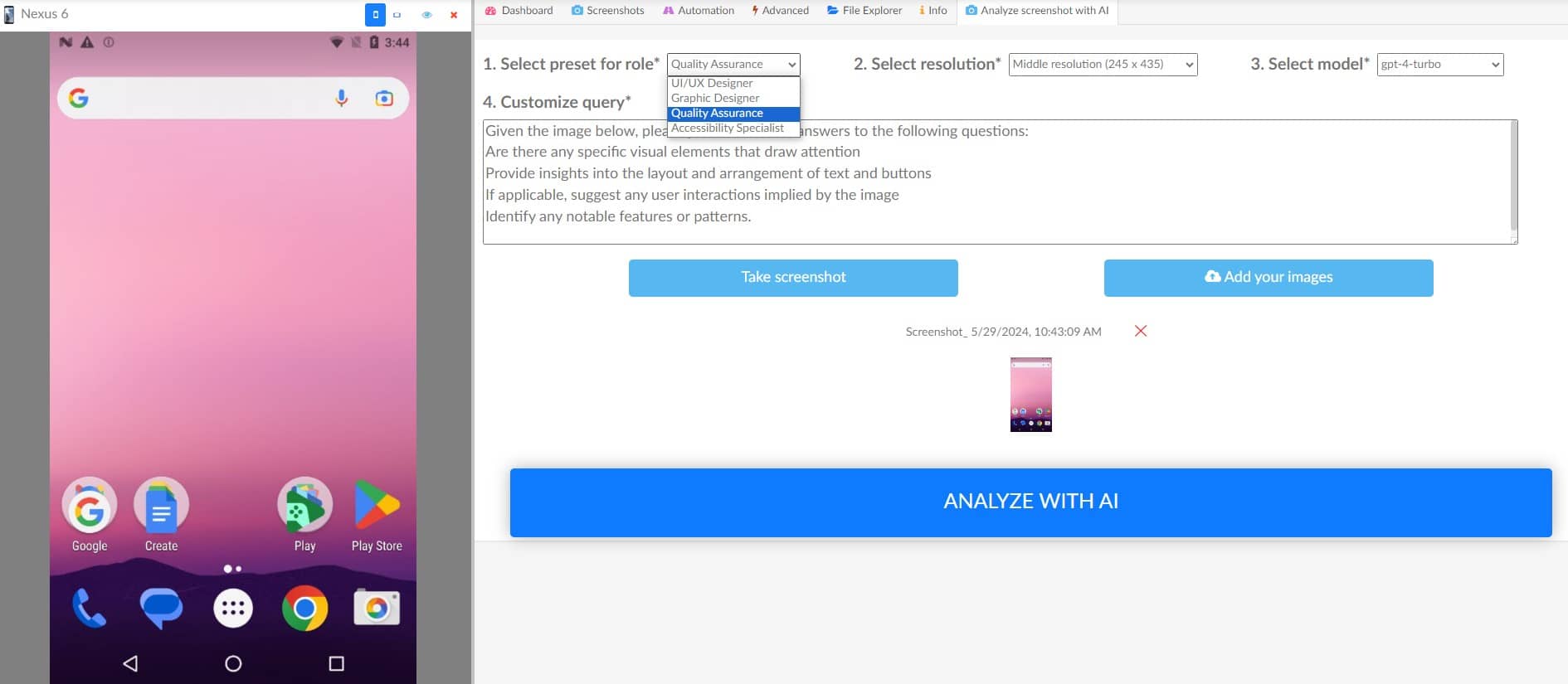

To test mobile applications, we use Device Farmer– an open-source web-based application that allows us to interact with Android mobile devices, run mobile applications and test them. We extended the Device Farmer functionality with the AI analyser feature. So, the whole testing process looks as follows:

- The user logs in using his credentials;

- Connects to a mobile device and starts testing some scenarios;

- Captures screenshots of the application or uploads existing screenshots;

- Selects prompt, screen resolution and model;

- Sends it for AI analysis;

- Receives feedback based on a screenshot.

The feedback that the user receives is comprehensive and depends on a prompt the user selected on the UI. There is also the ability to customise prompt and get more specific recommendations. For example, users can request UI/UX improvements based on the guidelines or generate the most critical test cases for a given screen.

Prompts are preconfigured for each role, but they can be customised as well. We have also added the ability to change the resolution of the screenshot which will be analysed, it helps to reduce costs associated with data being sent to OpenAI. Please see the screenshot below:

ELEKS UI Copilot

ELEKS UI Copilot: Evaluation of AI-powered visual analysis feedback

The quality of the feedback exceeded our expectations, as it provided a lot of valuable information from the testing perspective. We used the GPT-4 Turbo model, which works well with OCR and images, ensuring accurate handling of images with average screen resolutions. Just before the publication of this article, the new model appeared GPT-4o, which is cheaper and faster. Now, let’s review some of request examples we can handle with our AI assistant.

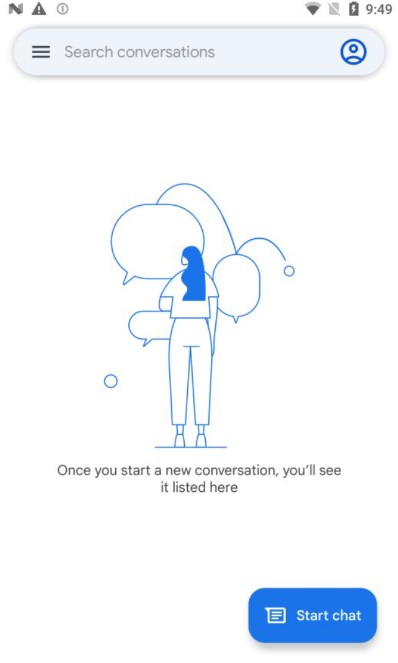

Example 1. Default messaging application

Prompt: “Act as QA engineer and suggest 5 of the most critical test cases for mobile application.”

Screenshot to be analysed:

The feedback was quite comprehensive, as shown in the table below with generated Test Cases:

| Test case title | Description |

|---|---|

| Functionality test: Sending and receiving messages |

Test case: Verify that a user can send a message and the intended recipient receives it. Steps: User A sends a message to User B. User B checks for the message’s arrival. Expected result: User B receives the message as intended within an acceptable amount of time. |

| Performance test: Load handling |

Test case: Assess the app's performance under high-traffic conditions. Steps: Simulate multiple users sending messages simultaneously. Expected result: The app should handle the load without significant performance degradation or crashes. |

| Security test: Data encryption and spam protection |

Test case: Ensure messages are encrypted and spam protection works as intended. Steps: Monitor and analyse network traffic when messages are sent and received to check for encryption. Try sending messages with spam-like characteristics to see if the app identifies and filters them. Expected result: Messages should be encrypted in transit. The spam protection feature should warn or block spam messages according to the app's defined behaviour. |

| Usability test: User interface consistency and responsiveness |

Test case: Examine the ease of navigation and responsiveness of UI elements. Steps: Interact with all UI elements like menus, buttons, and links to ensure they respond correctly on different devices and screen resolutions. Expected result: All UI elements should be consistently usable across various devices, and the app should display and function properly on different screen sizes and orientations. |

| Disruption test: Handling interruptions |

Test case: Determine the app's ability to handle interruptions like incoming calls or switching apps. Steps: While the app is in use, simulate an incoming call or switch to another app, then return to the messaging app. Expected result: The app should pause and resume appropriately, without losing data or crashing, and should maintain the user's state within the app before and after the interruption. |

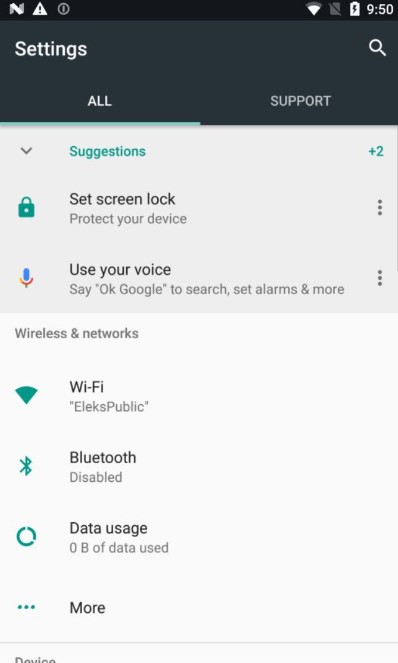

Example 2. Setting page analysis

Prompt: “Given the image below, please provide detailed answers to the following question: are there any specific visual elements that draw attention. Provide insights into the layout and arrangement of text and buttons. If applicable, suggest any improvements according to UI/UX guidelines”

Screenshot to be analysed:

The prompt for this example is more complex and contains more instructions, and was tested on a typical Settings page.

After selecting screen resolution and clicking the button “Analyse with AI”, we got quite comprehensive feedback about this screenshot, which was divided into three areas: “Visual elements that draw attention”, “Layout and arrangement”, and “Suggestions for improvement”. The most interesting part, of course, is suggestions for improvements.

| Visual elements that draw attention | Layout and arrangement | Suggestions for improvement |

|---|---|---|

| The "Suggestions" heading, with its green plus icon and blue accent, draws attention due to its position at the top of the list and the use of colour. | The interface uses a clean and organized layout following Material Design principles, with a clear hierarchy that separates the settings into different sections like "Wireless & networks" and "Device.” | The "+2" next to "Suggestions" isn't self-explanatory. It could be improved with a tooltip on tap or a better indication of what "+2" signifies. |

| The icons accompanying each setting, such as the lock icon for "Set screen lock" and the microphone icon for "Use your voice," help users quickly identify the functions associated with each setting. | The use of dividers and whitespace between the sections helps to visually separate different groups of settings, aiding in navigation and readability. | The contrast between the text and the background meets legibility standards, but accessibility improvements could include options for larger text or high-contrast modes for better visibility. |

| The Wi-Fi setting indicating "EleksPublic" is also noticeable as it includes more detail than other settings, specifically showing that a Wi-Fi network is connected, which provides immediate feedback about the device’s connectivity. | The use of a hamburger menu icon in the top-left corner and a magnifying glass icon in the top-right corner implies a menu can be accessed from the left and a search function is available. | While the tabs at the top indicate "ALL" and "SUPPORT," it is not clear whether "ALL" is the currently selected tab. Improving the visual distinction between selected and unselected tabs would enhance user understanding. |

| Three-dot menu icons are placed next to "Set screen lock" and "Use your voice," suggesting there are additional options or actions that can be performed related to these settings. | The "More" button under "Wireless & networks" could provide an indicator (like an arrow or more descriptive text) to make it more explicit that there are additional items in the list. |

ELEKS UI Copilot: possible use cases

Our proof-of-concept showed that we can use it for receiving quick feedback based on any visual or text data we have, from Android mobile applications connected to Device Farmer to any screenshot uploaded on the UI of ELEKS Copilot.

It can assist QA team and development engineers in their daily testing activities by identifying test cases, issues, and defects and focusing attention on areas that can be skipped during regular testing activities. For example, a test engineer receives mockups of the application in the early stage of development. Now they can upload images to the Copilot, get feedback immediately, and understand if it corresponds to Google or Apple guidelines.

Additionally, ELEKS Copilot can validate applications based on UI/UX best practices. It can provide suggestions for element visibility, layouts, and font choices, including colour gradients.

- Key advantages of ELEKS UI Copilot:

-

- Ability to decrease screen resolution to save costs. The cost of 1 processed image is from $0.002 to $0.01, and it depends on the screen resolution and number of tokens transferred.

- It allows storing custom prompts and reusing them like from a dictionary with predefined prompts for each role.

- Integrated screen capturing feature.

- Integration with Active Directory. Users inside the company can use the system without creating a personal ChatGPT account for each user.

ChatGPT Plus vs. ELEKS UI Copilot: pros and cons

| Comparison criteria | Chat GPT Plus (model GPT 4 Turbo) | ELEKS UI Сopilot |

|---|---|---|

| Ability to change screen resolution | No | Yes |

| Pre-defined list of prompts | No (only 4 in custom GPT) | Yes |

| Integrated screenshot tool | No | Yes |

| Limits | GPT-4 has a rate limit of 40 messages every 3 hours | 500 RPM 300,000 TPM (API) |

| Cost | $20/month | 1 processed image costs from $0.002 to $0.01 depending on resolution and number of tokens |

| Sharing of the license between users | Need to share user/password or buy personal account for each user | User is logged in using corporate account, password is not exposed |

Ensuring security in AI-based projects

Security is very important for all projects, especially those that use AI features. Data is being transferred to the Internet, so we must ensure its safety. In ELEKS UI Copilot, we worked only with visual information and public applications, so the risk of exposing sensitive information is much lower than working inside a code repository. However, we still need to be sure that no data leakage occurs. We utilise OpenAI API and derive the same security policies for our project.

We do not train on your business data (data from ChatGPT Team, ChatGPT Enterprise, or our API Platform).

— OpenAI

OpenAI retains API data for a limited period, primarily for monitoring and abuse detection. The specifics of data retention policies can be found in OpenAI's official documentation and terms of service. It is also worth mentioning that security should be considered at all levels of SDLC. To prevent risks of data leakage, we need to build our applications according to security best practices—use special secure fields for passwords, avoid exposing sensitive data on the application's UI, do not store passwords in log files, and follow other security rules.

Challenges in incorporating AI tools for test automation and testing

The AI world is changing every day. On the market, we have not hundreds but thousands of AI-powered tools. Most of them are wrappers over top five AI engines like OpenAI, Gemini, Anthropic Claude API, and others.

The main challenges we face when incorporating AI tools for automated testing are:

- The changeable and fast-growing market of AI products where leaders continuously competing, releasing new features, lowering prices and improving model’s performance.

- Thousands of AI tools and wrappers are on the market, but only some are interesting and worth considering.

- Security-related questions and sensitive data should be the number one priority. A good point is that AI vendors understand risks and provide some means of security. Security policies should be analysed carefully, and additional agreements should be considered with customers before any usage of AI tools with sensitive data.

Conclusions

AI is a powerful technology, and using it can greatly boost performance. As AI technology continues to evolve, its potential to enhance software testing processes will only grow. However, as with any other technology, it's important to ensure that it is used properly and that there is no data leakage.

Based on our experience with OpenAI, we can say that their API enables the extension of the functionality of any product. For example, we have developed ELEKS UI Copilot, an assistant that can help a lot with testing mobile and other types of applications by providing valuable suggestions. AI will not replace engineers in the future, but engineers who use AI will replace those who do not use AI, so we must follow the updates and news in this area and make sure we know when and how to use this technology.

FAQs

AI automation testing refers to the application of artificial intelligence technologies to enhance and streamline the software testing process. This approach leverages AI to generate, execute, and analyse tests, aiming to improve efficiency, accuracy, and coverage in software testing. AI automation testing can automatically generate test scripts based on requirements, adapt to changes in the application, and provide more insightful analysis and feedback compared to traditional methods.

AI tools like GitHub Copilot and ChatGPT assist in generating code, comments, and test cases. ChatGPT can create test cases based on requirements and generate test plans, SQL queries, API clients, and more. These AI systems help implement utilities, generate test data, and produce code. Advanced AI models can also process images to provide feedback on visual elements, aiding in tasks like OCR operations, image comparison, and generating quality reports from screenshots.

AI is not yet advanced enough to fully replace automation testing. AI-powered frameworks have challenges such as slowness due to data processing, stability issues with updated UI, and reliance on additional wrappers and libraries. Traditional automation tools like Selenium, Cypress, and Playwright remain popular and necessary due to the limitations of AI-powered tools. AI can enhance testing, but human oversight and traditional tools are still essential.

Related Insights

The breadth of knowledge and understanding that ELEKS has within its walls allows us to leverage that expertise to make superior deliverables for our customers. When you work with ELEKS, you are working with the top 1% of the aptitude and engineering excellence of the whole country.

Right from the start, we really liked ELEKS’ commitment and engagement. They came to us with their best people to try to understand our context, our business idea, and developed the first prototype with us. They were very professional and very customer oriented. I think, without ELEKS it probably would not have been possible to have such a successful product in such a short period of time.

ELEKS has been involved in the development of a number of our consumer-facing websites and mobile applications that allow our customers to easily track their shipments, get the information they need as well as stay in touch with us. We’ve appreciated the level of ELEKS’ expertise, responsiveness and attention to details.